As I write this, my apartment smells like turpentine and tea. The turpentine is for cleaning brushes and stencils I’m using for painting on recent prints. I bought the tea in Istanbul and have just a little stash left. The atmosphere feels like this is a place where things are made and life lived. It’s a good time to look back over the highlights of 2024.

- Turner's watercolors in Edinburgh

- AI landscapes for a problematic project

- New prints

- 100 posts in 100 days: an Instagram experiment

- Layer Cake in Berlin

- Superbooth24

- Norwegian heavy metal

- Skulls: a more successful AI project

- Experimental Photography Festival in Barcelona

- Phasenpunkte

- Programming glass robots

- Skateboarding at SFMOMA

- Synthesizer brain transplants

- Modern Istanbul

- Making prints at Kunstquartier Bethanien

- Glide path

Turner’s watercolors in Edinburgh

WIlliam Turner was an English painter that lived from 1775-1851. He was eccentric but influential and is a pivotal figure in English art history. A group of his watercolors are housed at the Royal Scottish Academy in Edinburgh, Scotland. They can only be seen once a year.

This collection of Turner watercolours was left to the nation in 1900 by the art collector Henry Vaughan. Since then, following Vaughan’s strict guidelines, they have only ever been displayed during the month of January, when natural light levels are at their lowest. Because of this, these watercolours still possess a freshness and an intensity of colour, almost 200 years since they were originally created.

I planned a trip to see these paintings in person and spend a few days in Edinburgh as well. The weather in January was heavy and my trip was wet and windy, thanks to Storm Isha. I got to see these amazing watercolors though. There is an intensity to them that doesn’t translate to any book or digital image. Seeing them in person was worth it.

Coinciding with that show was a large exhibit by the Royal Scottish Society of Painters in Watercolour at the same place. I wasn’t expecting such a massive collection of contemporary watercolors. Based on that show, I learned that there is a whole tradition of Scottish painters that is still really active. They have their own online gallery of that exhibit that is worth a look.

Turner’s watercolors

Photos I made in Edinburgh

AI landscapes for a problematic project

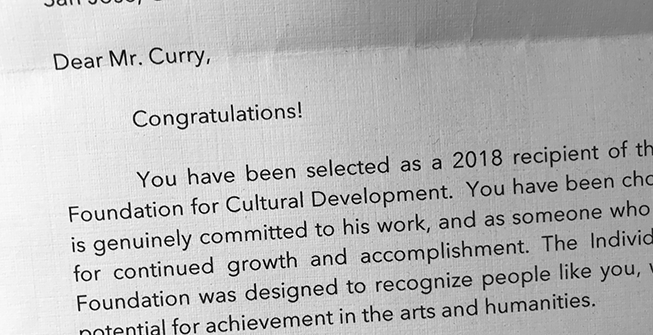

An AI related art project I started back in 2020 came to fruition in January. It didn’t go smoothly.

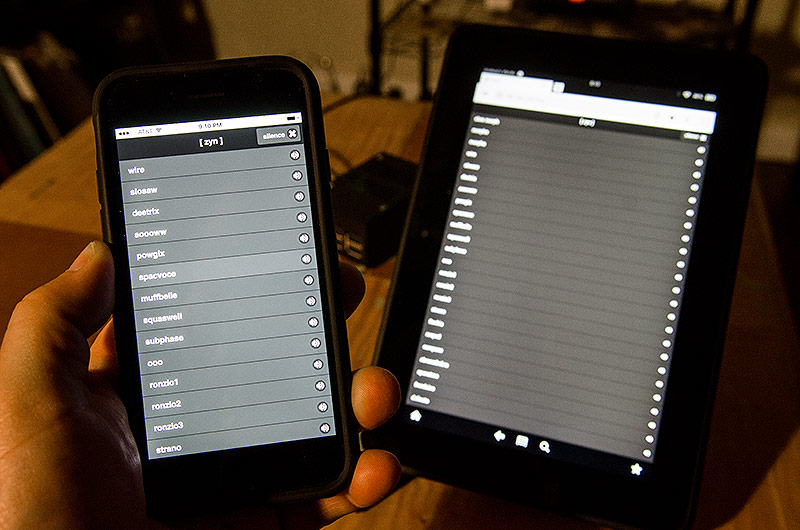

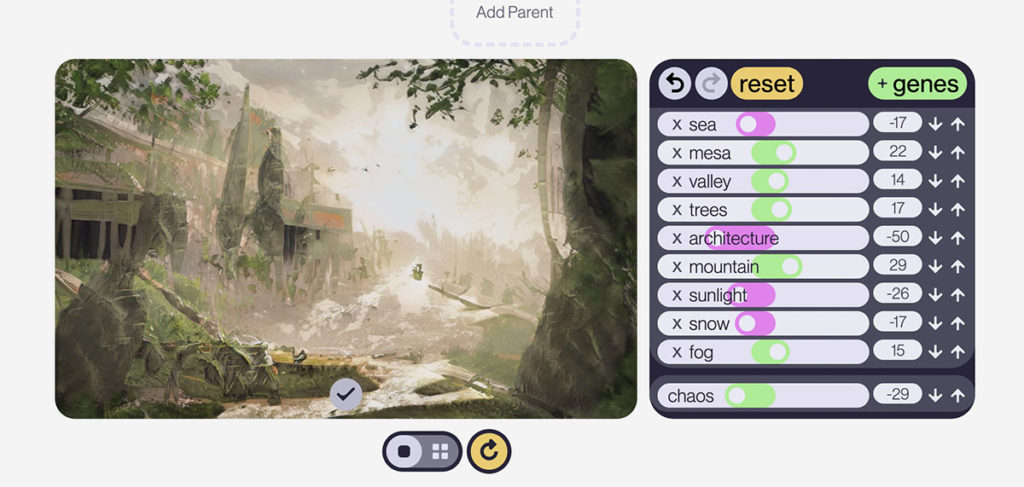

In 2020, online image generators were much simpler than they are now. The results were primitive compared to what is common now. ChatGPT and Dall-E didn’t exist yet and there was no real public awareness of these processes. I began tinkering with a tool called Artbreeder because it had the novel ability to generate landscapes instead of just avatars. It also use a model trained on real paintings instead of using procedural techniques that came from 3D and video game software.

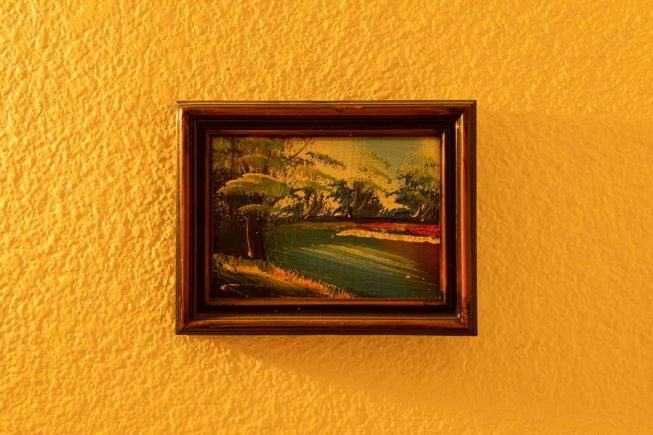

Instead of a text prompt, it had a panel of sliders that you moved around to get results you wanted. I spent a fair amount of time experimenting with that tool and came up with a set of landscapes and objects that were related to my own aesthetic and art practice. Those images were then edited down to a smaller set of coherent and conceptually related images. They reminded me of some the early Western photographs by Carleton Watkins. I wasn’t sure what direction the project was going to take, so I held onto them for later.

Initially, I considered commissioning painters from Dafen, China, to create large-scale paintings of the images. The results of that would have made a compelling story. But, the logistics would have been costly and time consuming. I was also very involved in the local San Jose, California art community and thought it would be interesting to collaborate with a local painter instead. Maybe they would have contributions I hadn’t thought of.

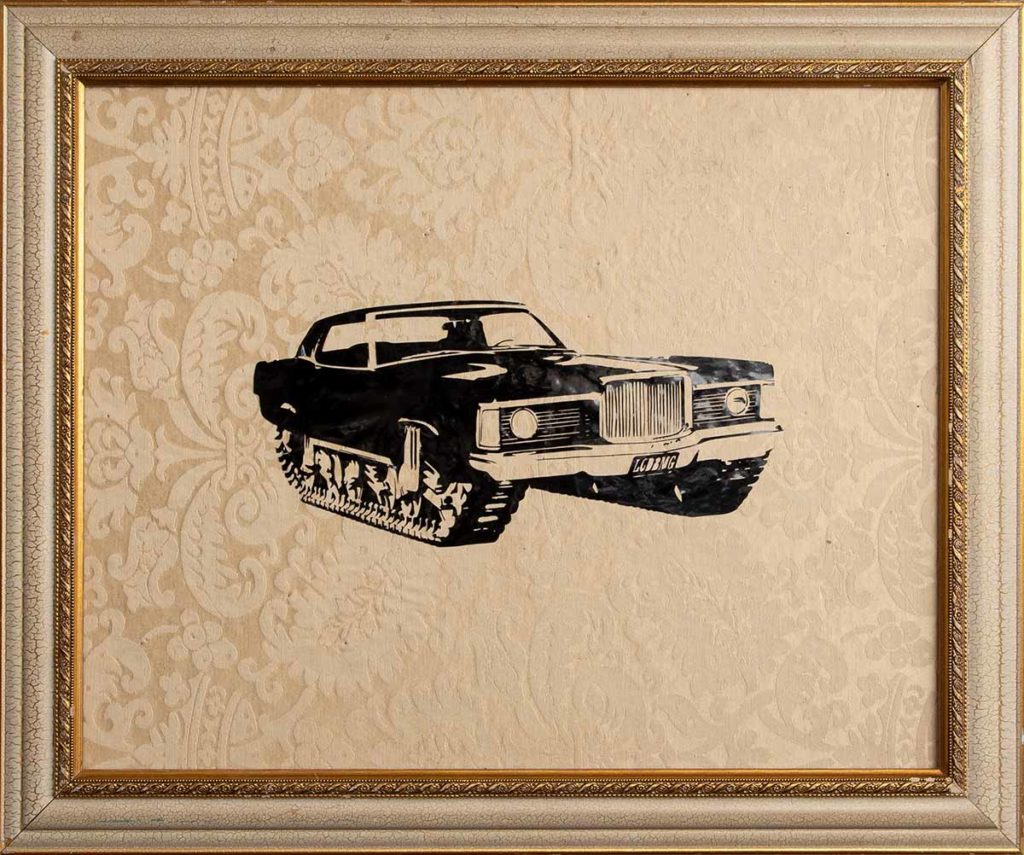

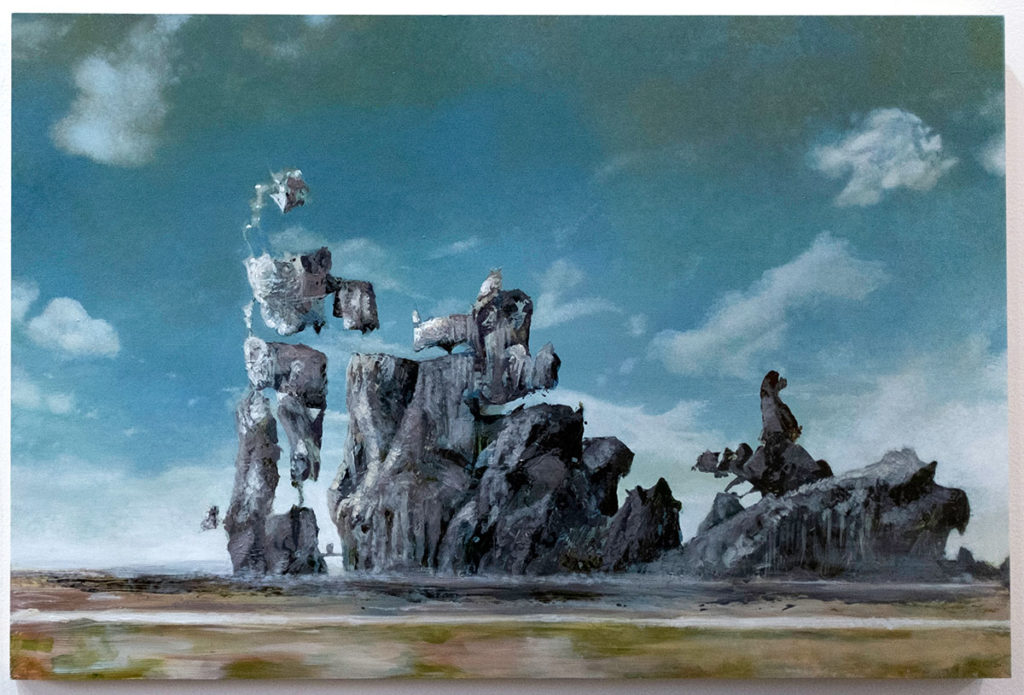

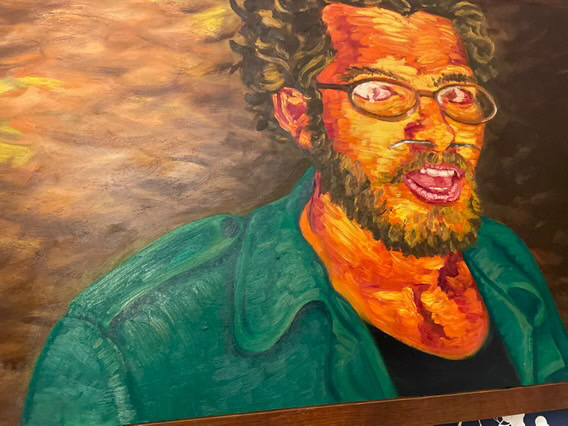

I saw a painter I knew at Kaleid Gallery and proposed the project to her. She was receptive and the collaboration began soon after. Her first painting (shown here) was a success and I saw good possibilities for a show in the future. I ended up moving to Berlin, but reached out later to see her progress. She had continued work and even made plans to exhibit the work at Kaleid. That show happened this January.

A few weeks before the show, we had a disagreement about attribution, ownership, and money. We had an informal agreement to split everything evenly. But, a last minute contract made very different claims. This is a prime example of the problems that arise from saying, “Don’t worry about it. We’ll work out the details later.” Those details were highly problematic. It was bad enough that I withdrew from the show and refused to participate. Kaleid director Cherri Lakey stepped in and rescued that show from the abyss. It wouldn’t have happened without her diplomacy and she deserves a lot of credit.

It’s a shame because it was the perfect time to stage a show like that. Lots of people were interested and bought some of the paintings. The show did well. If I had staged that here in Berlin, it would have been even more popular. Unfortunately, the paintings that did not sell were destroyed by the painter and our working relationship ended badly.

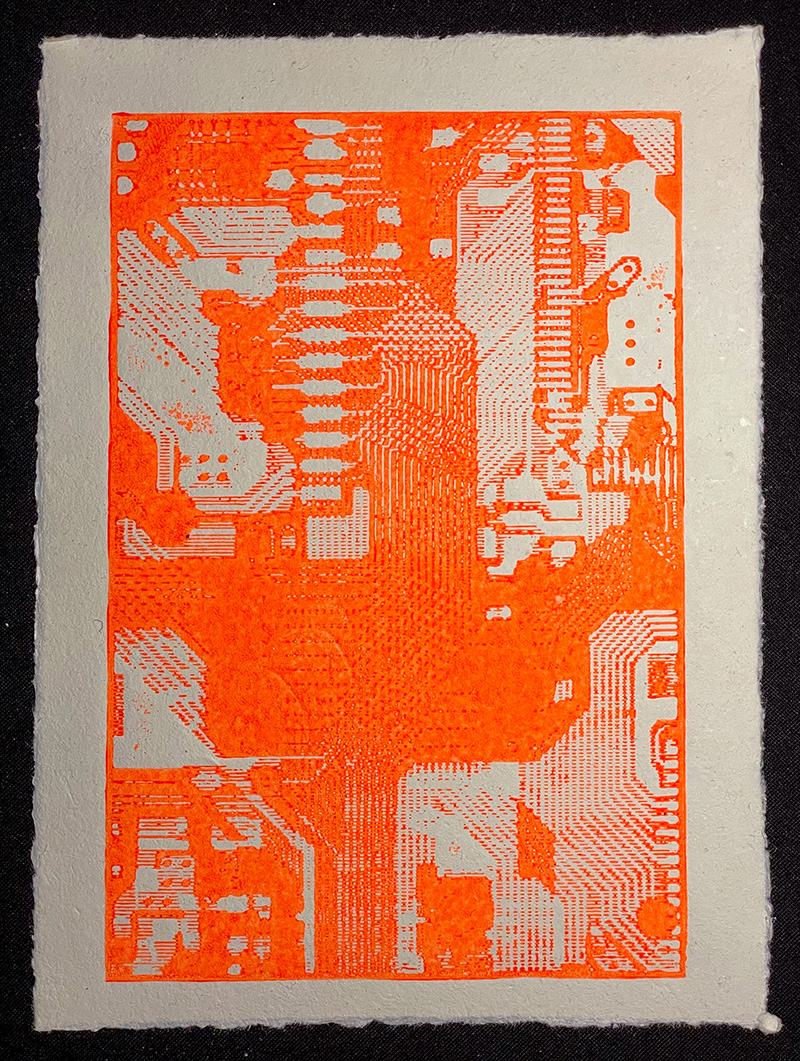

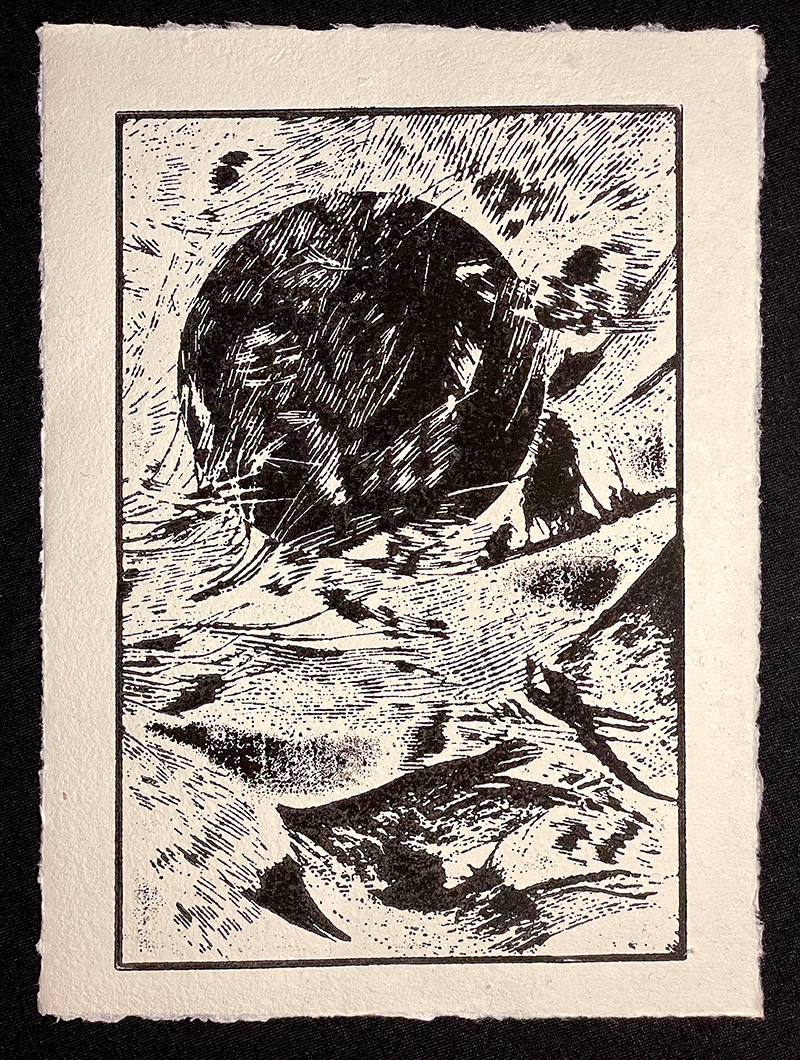

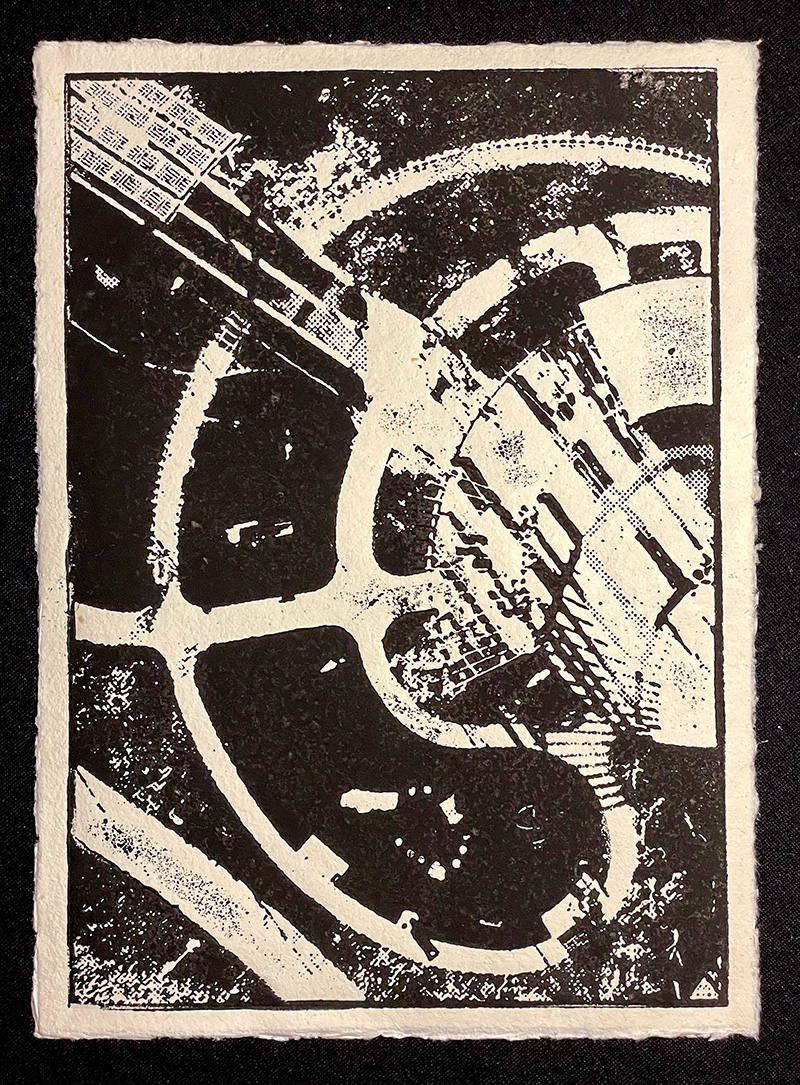

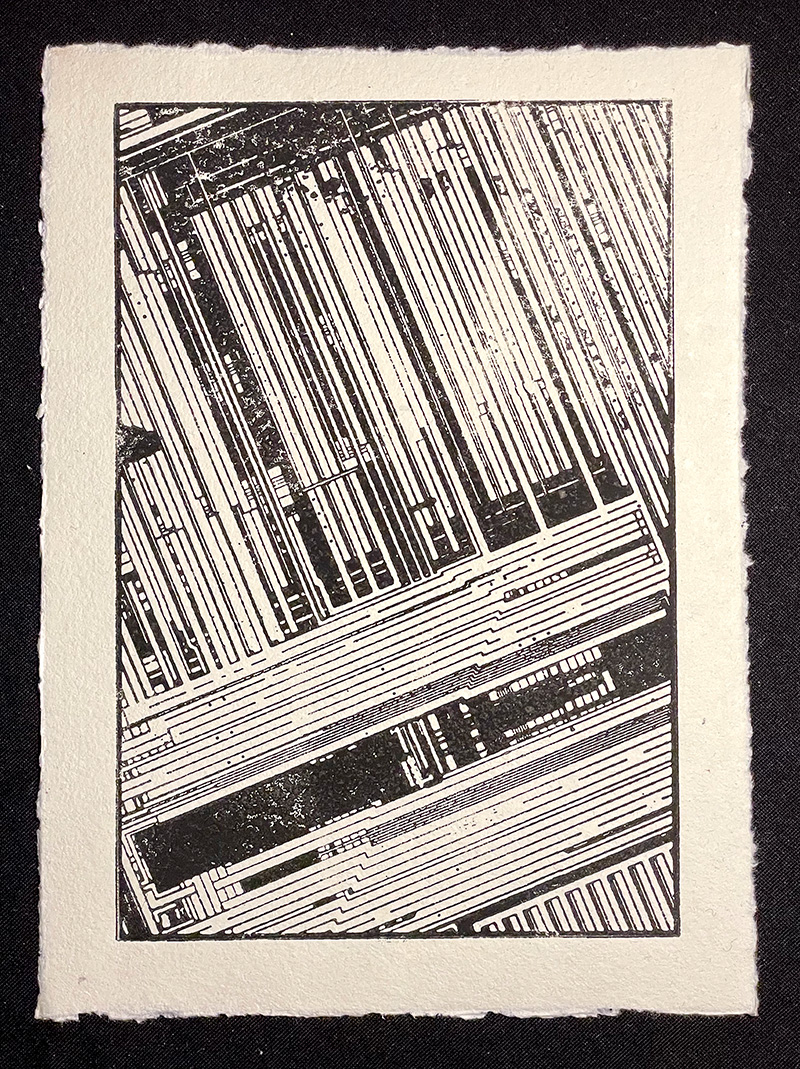

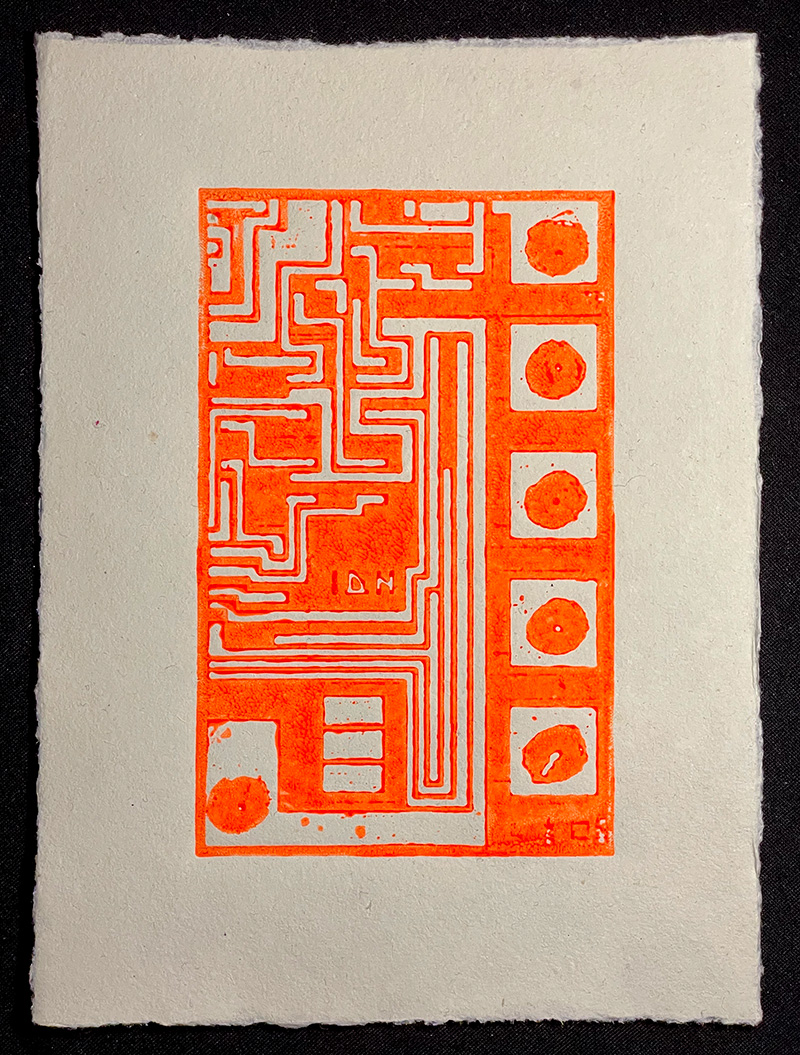

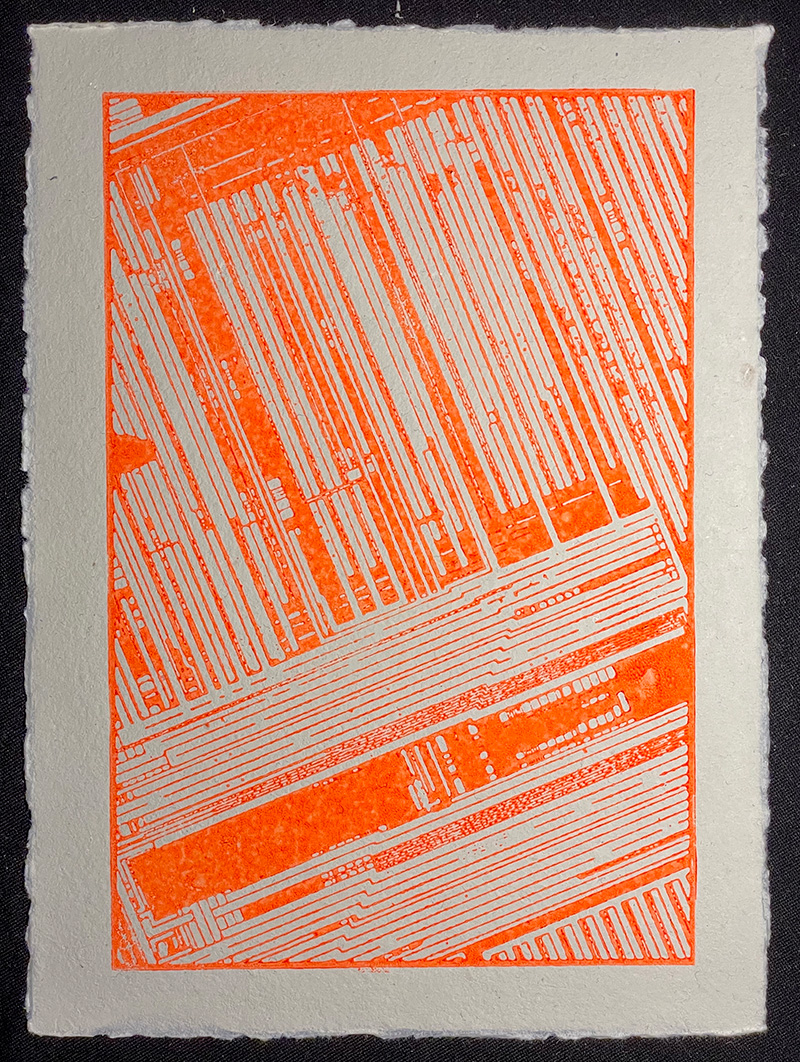

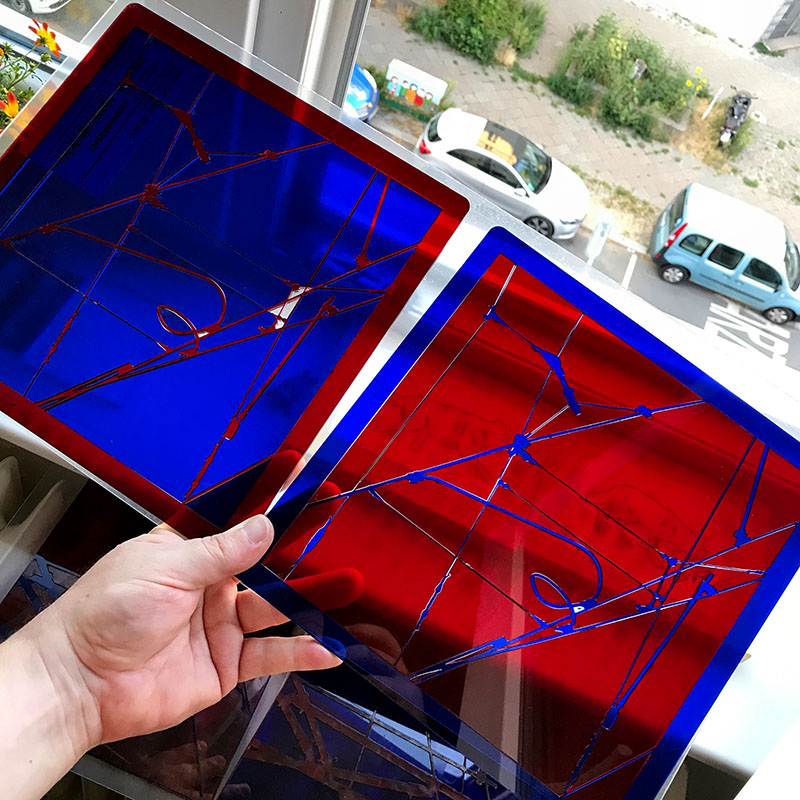

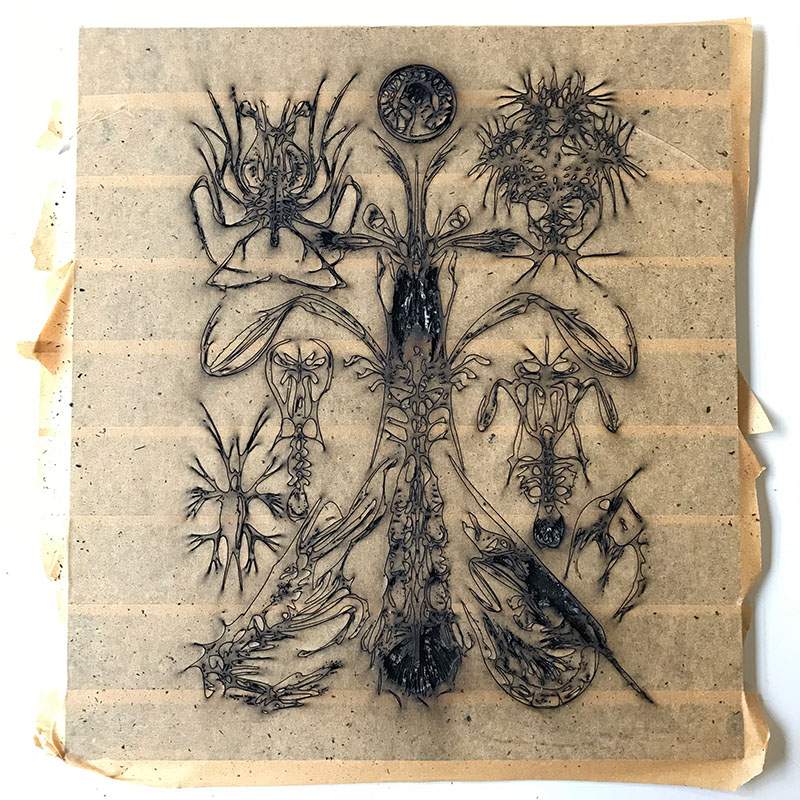

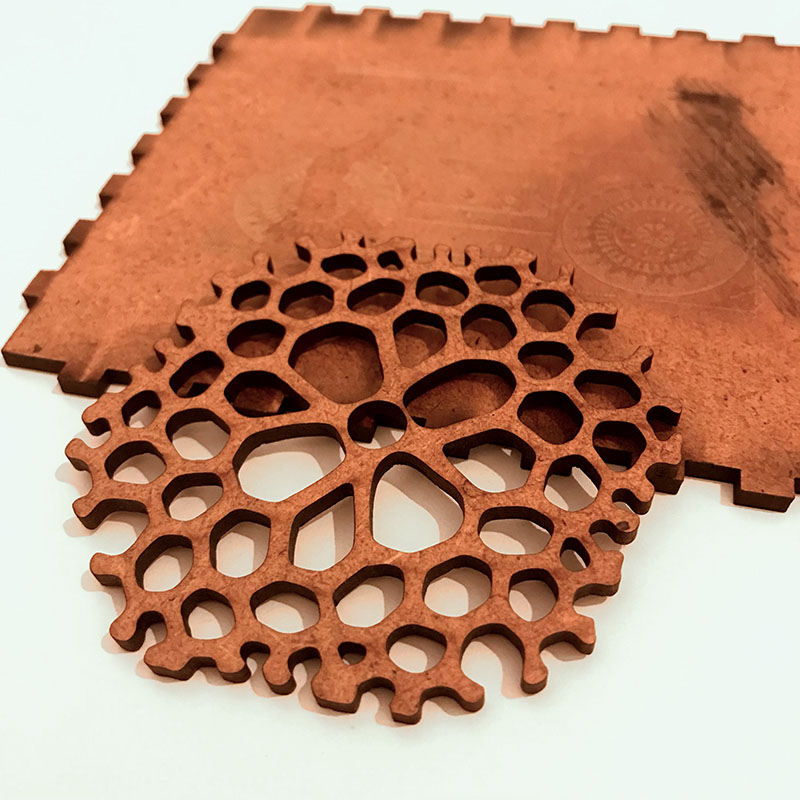

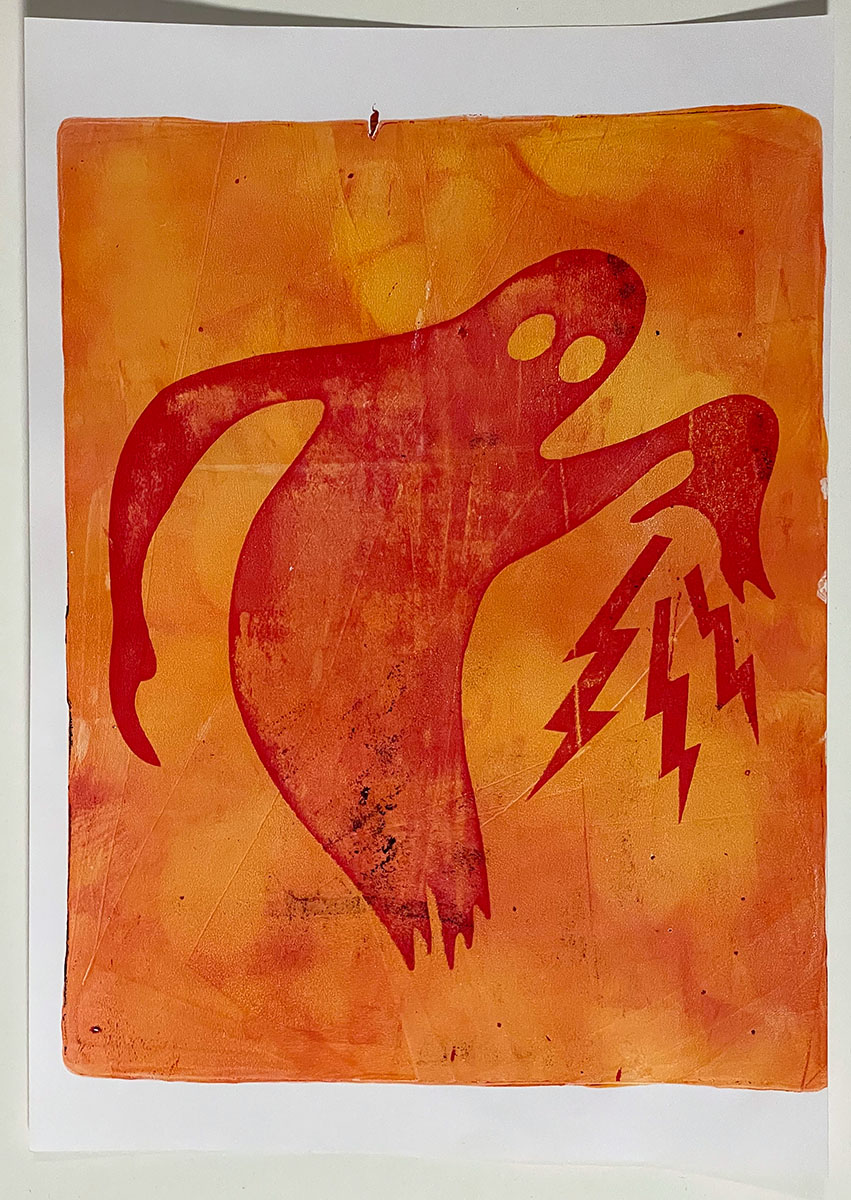

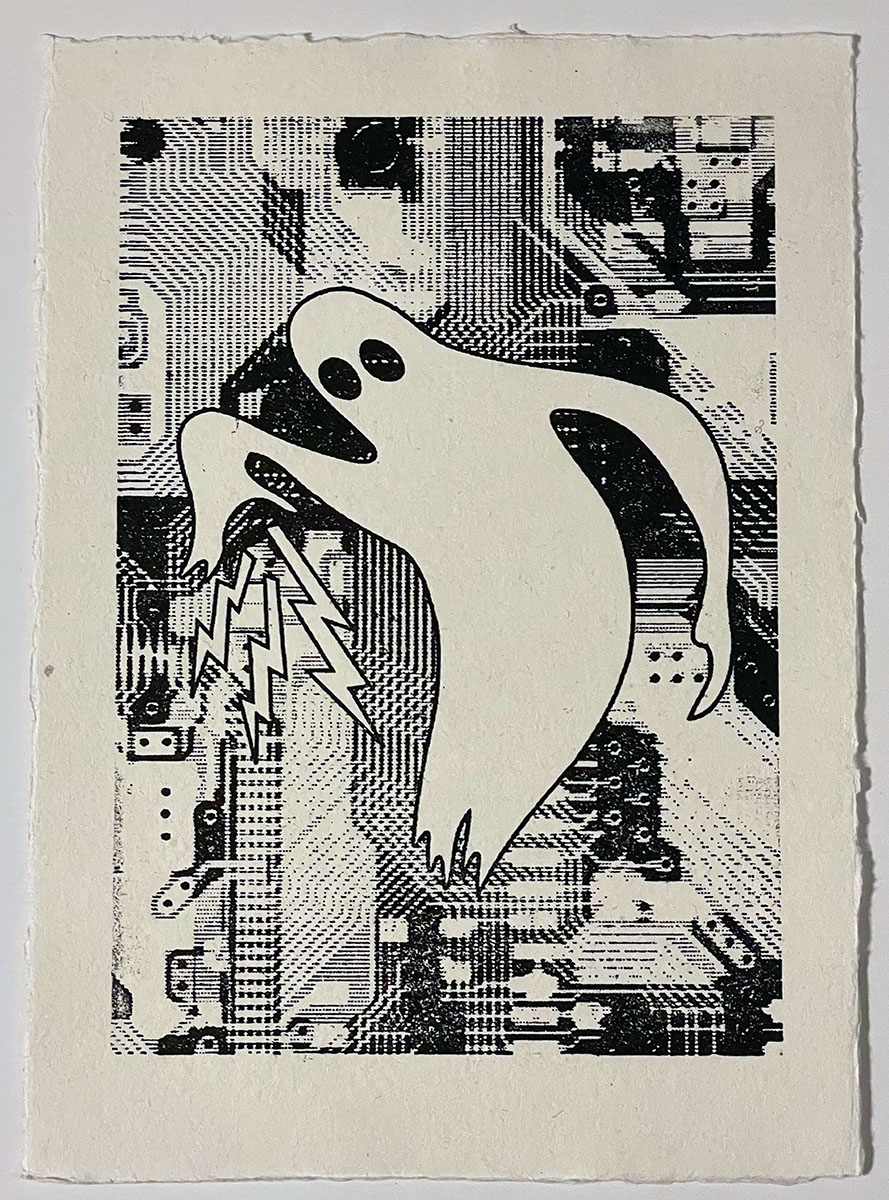

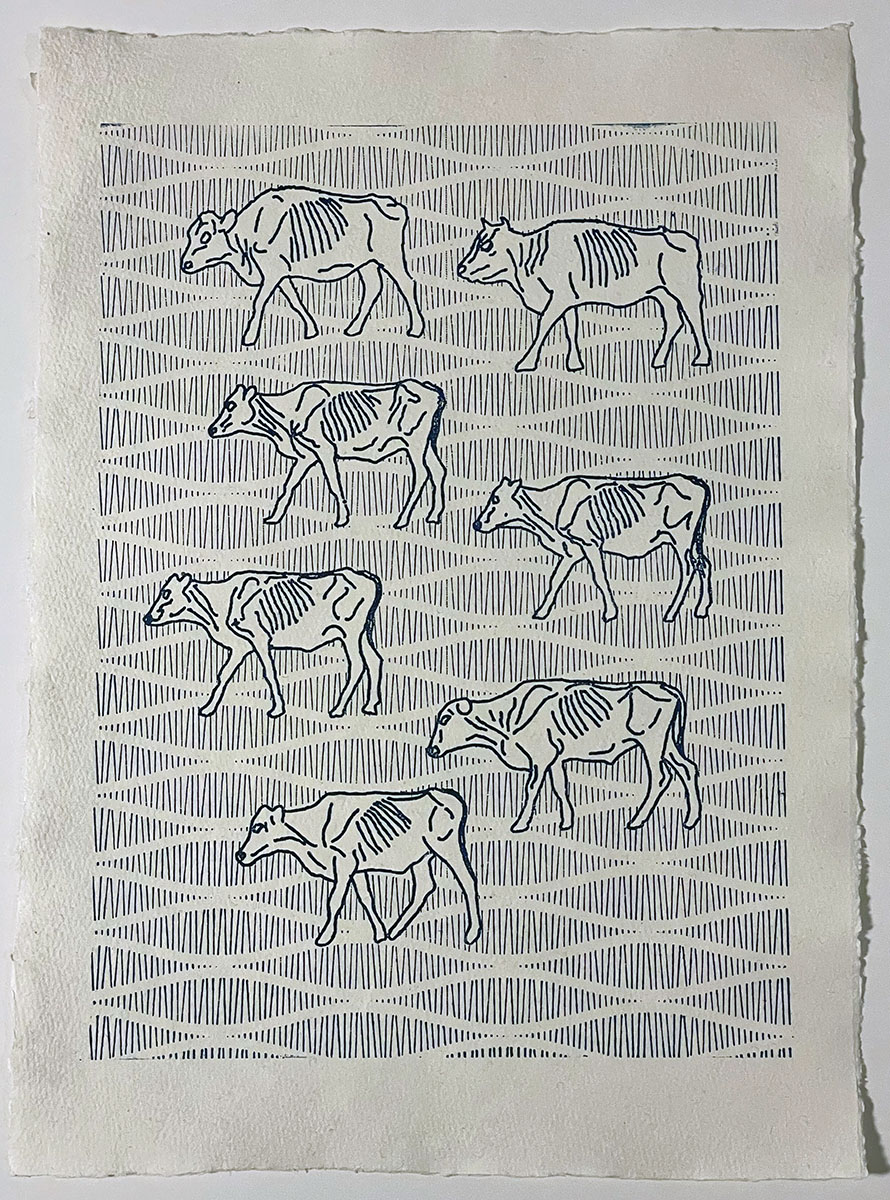

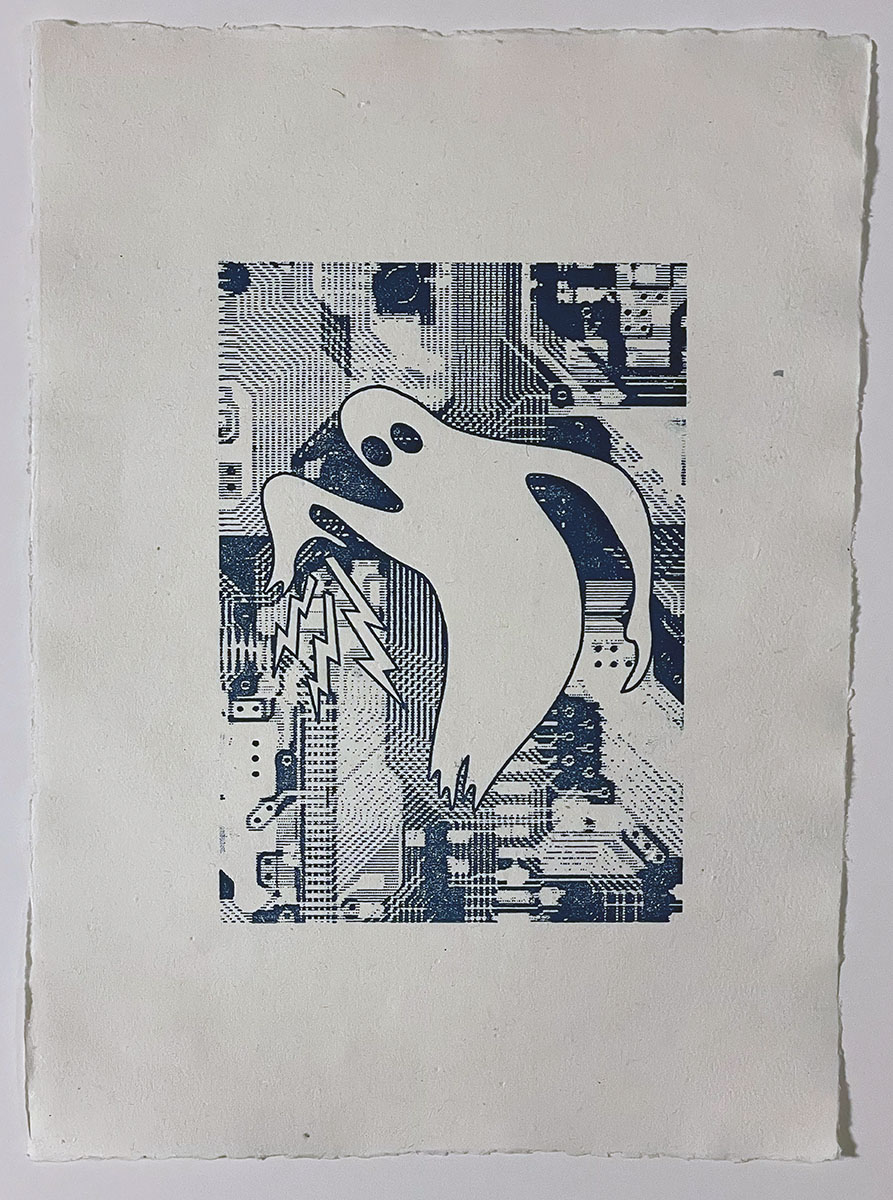

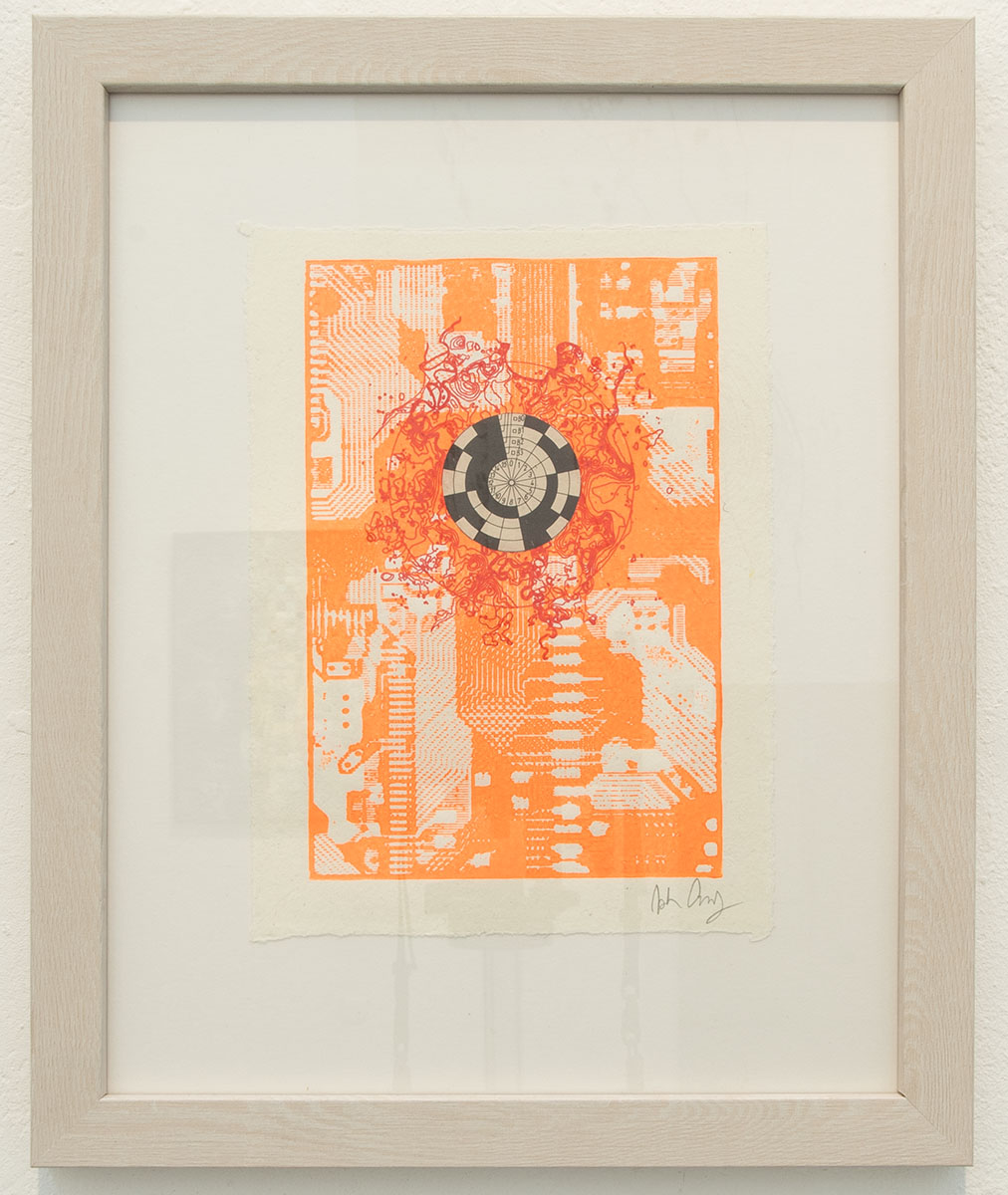

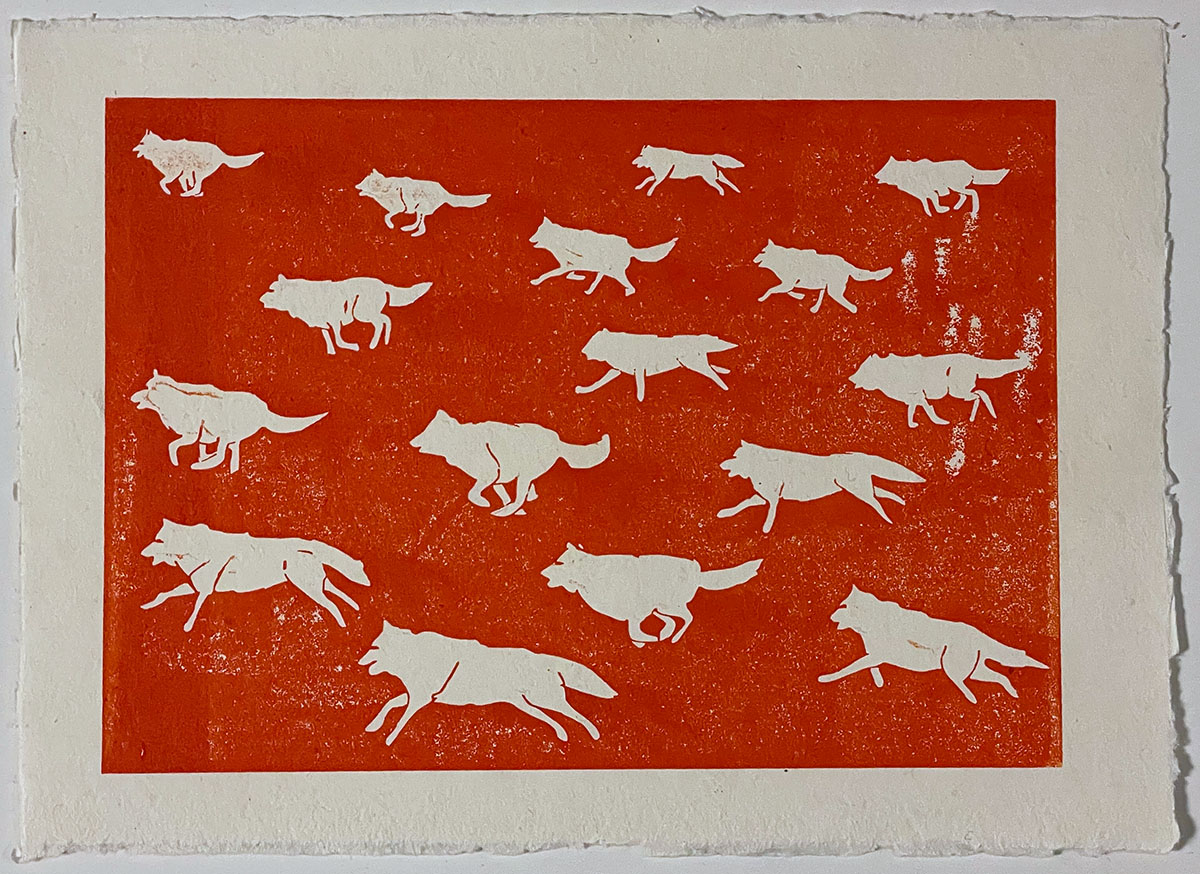

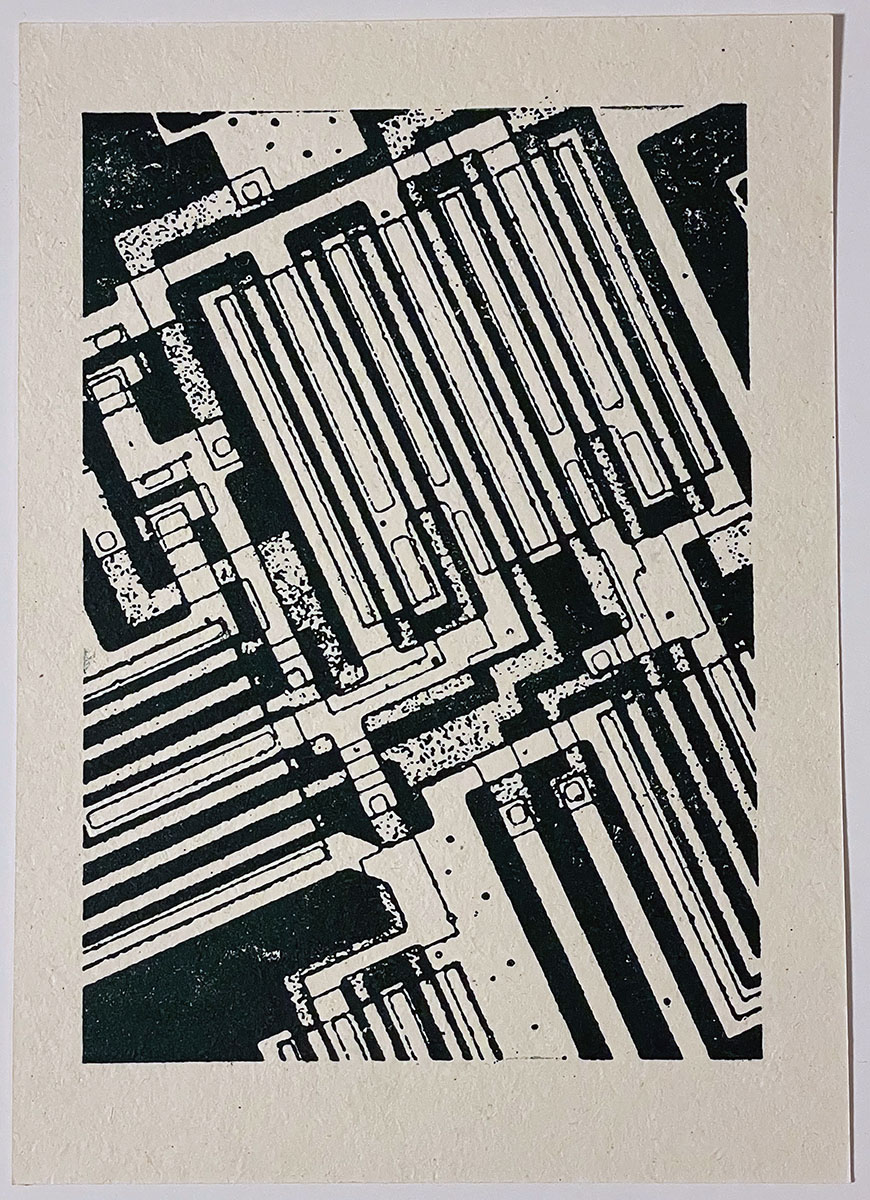

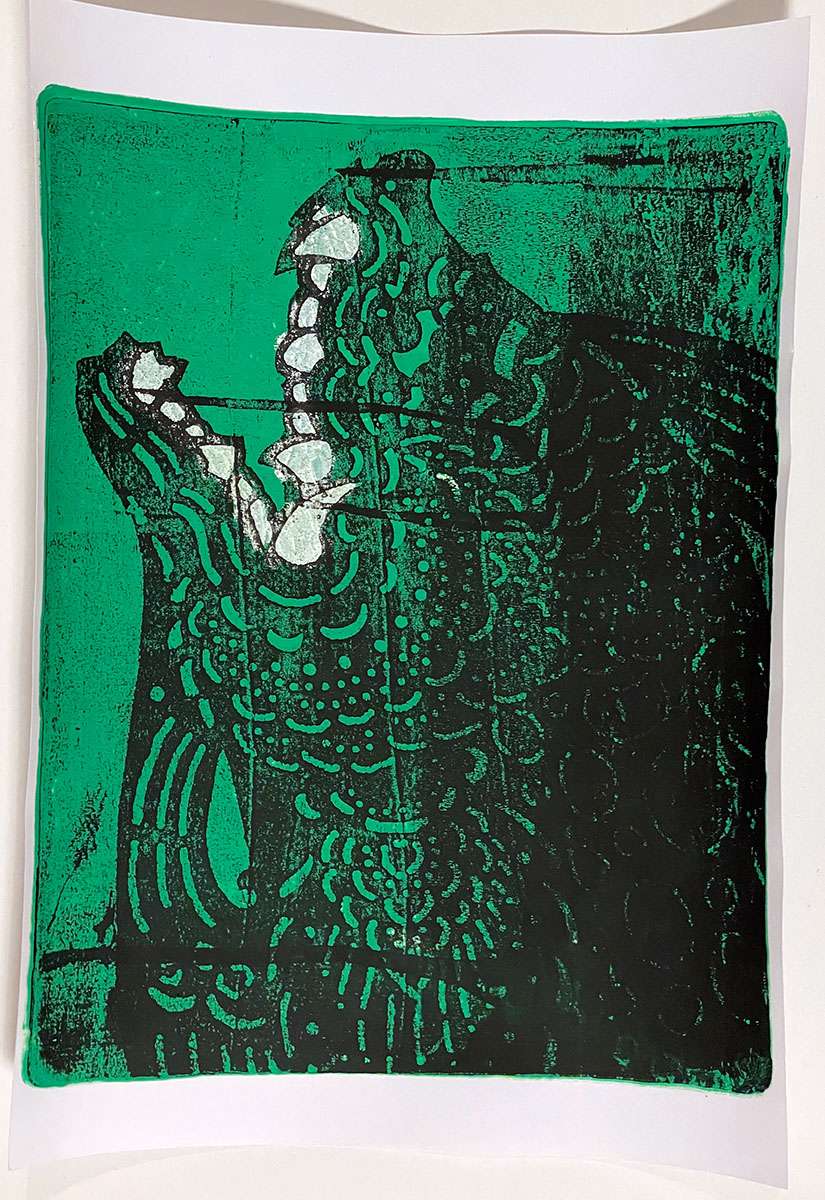

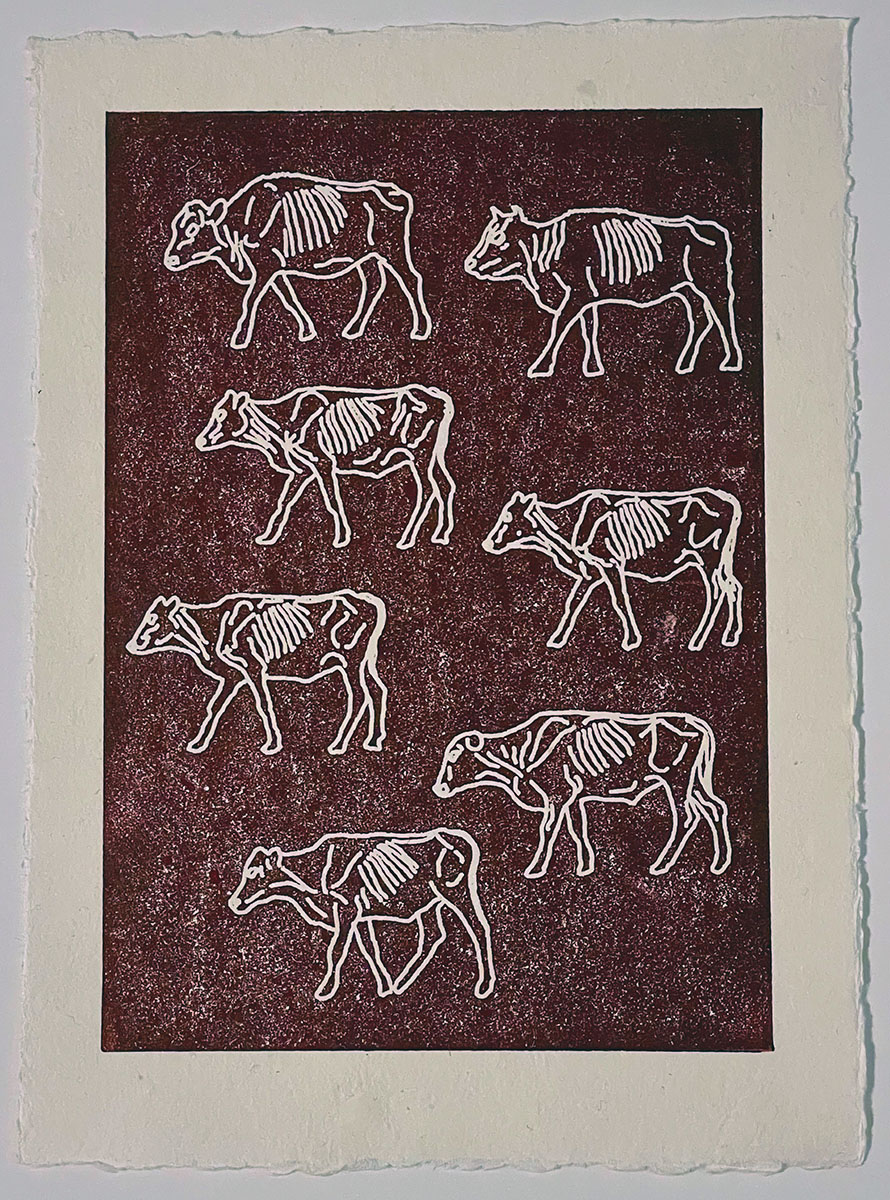

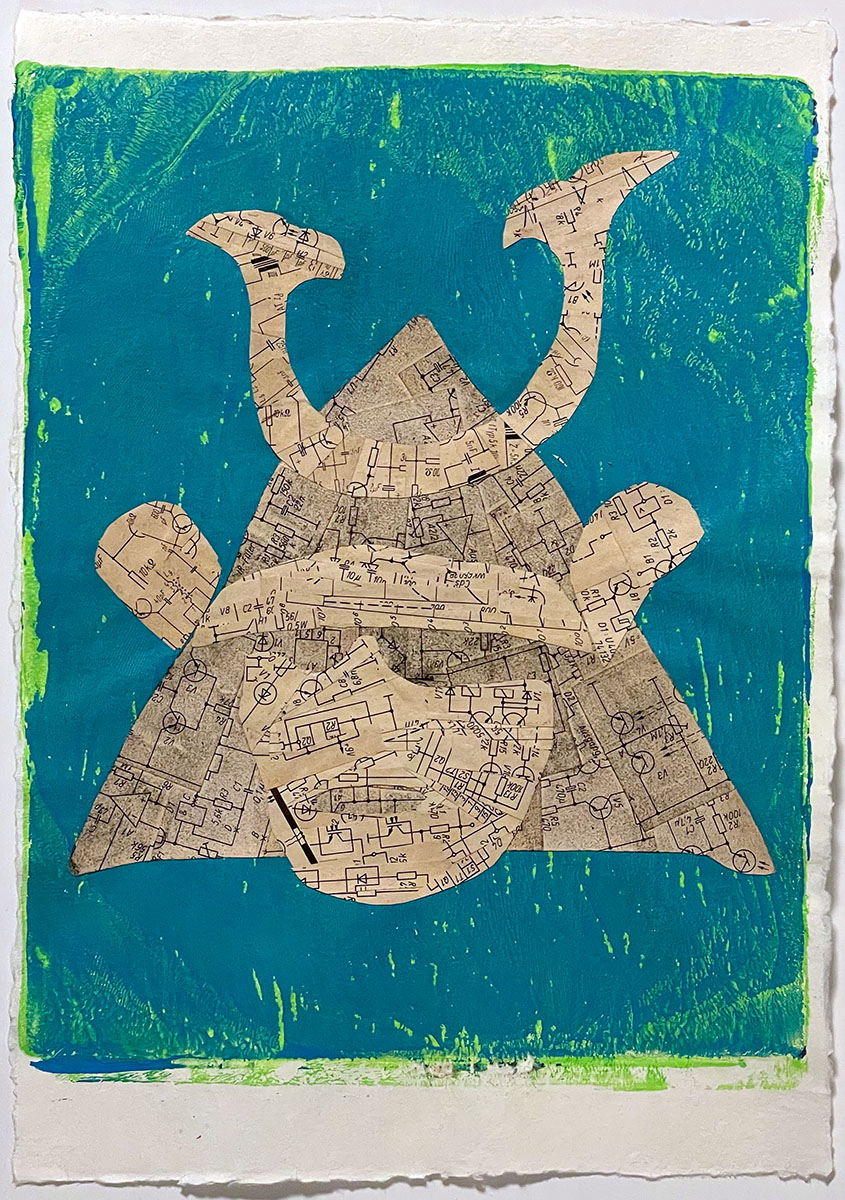

New prints

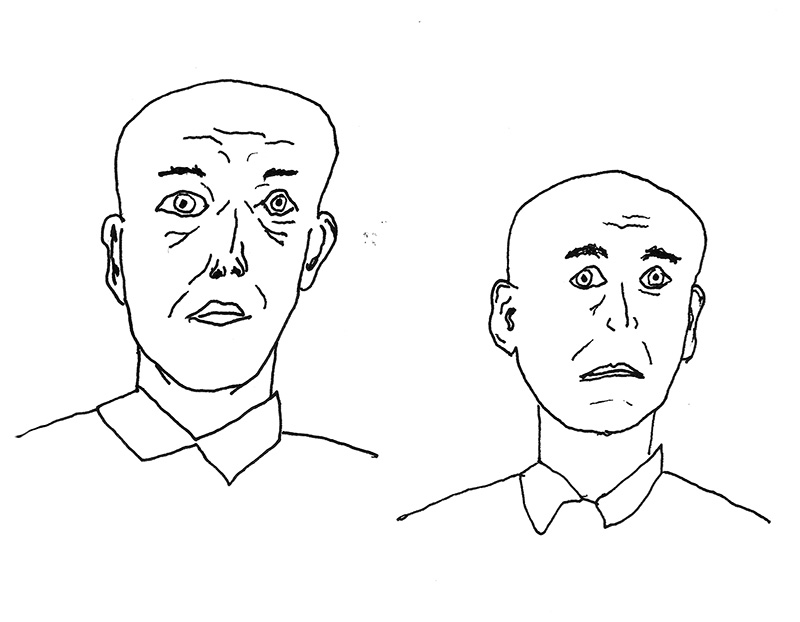

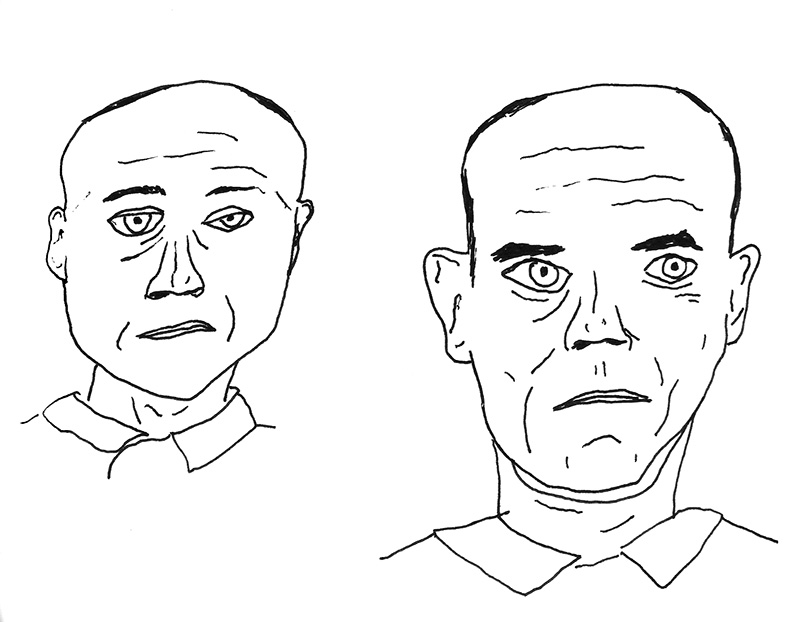

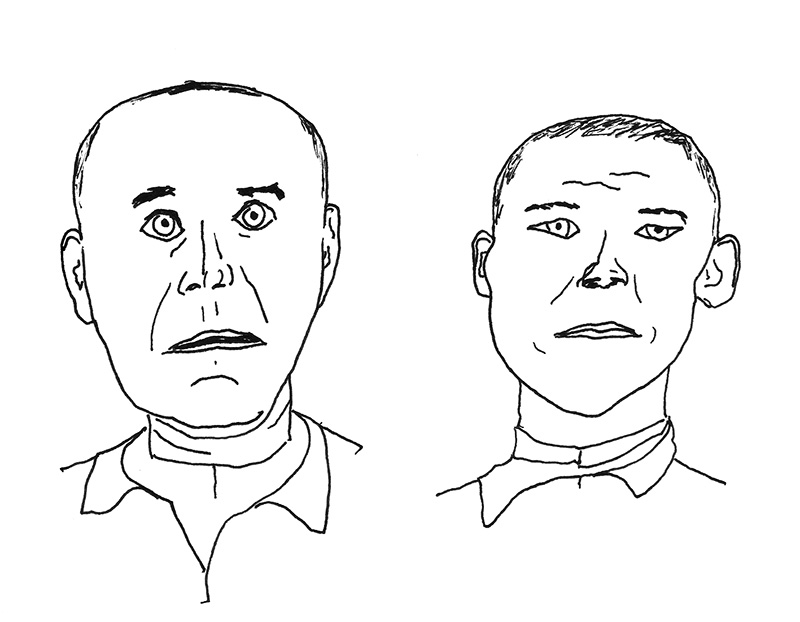

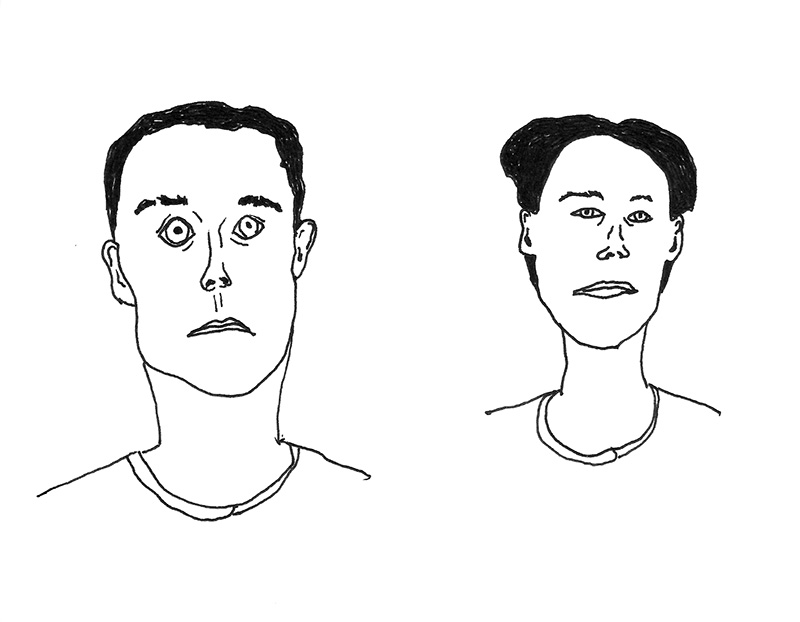

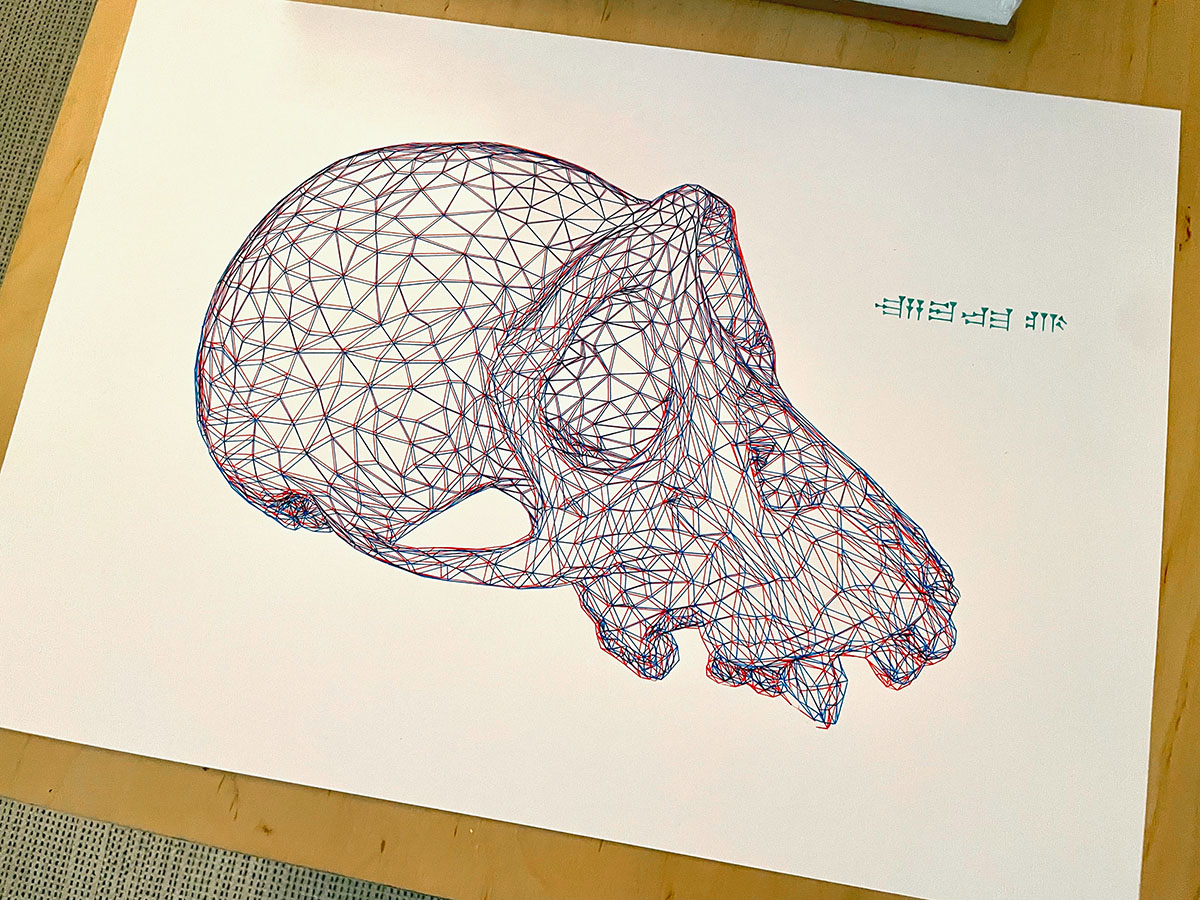

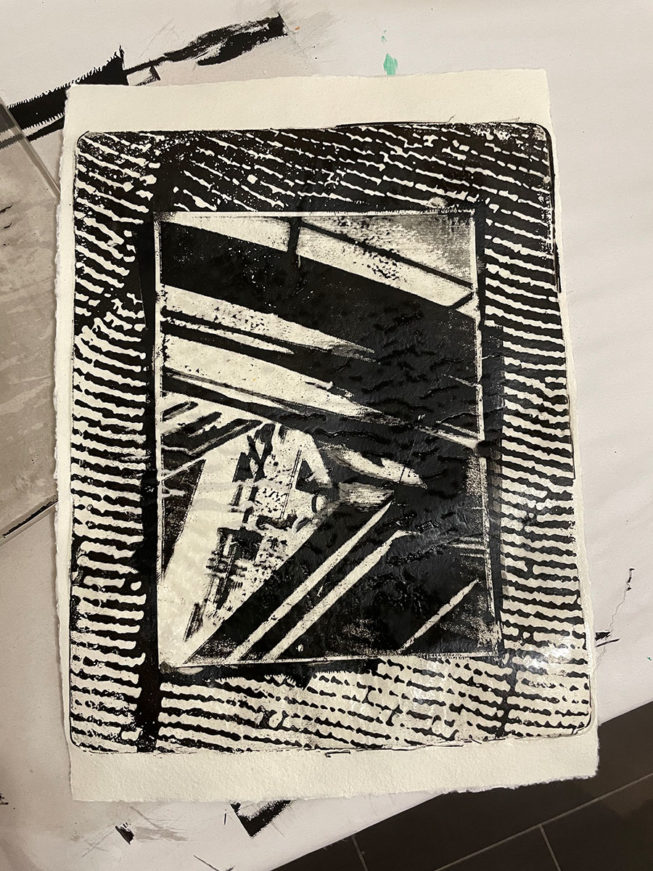

I continued printmaking and tried some new techniques. I still have a long way to go.

I made linocut prints in my kitchen and tried some multiple color techniques. It was difficult to make a good ink impression and I had a lot of paper waste. I kept all the results of that, though. I also bought a Gelli plate and had some interesting success with multiple generation imagery. I’ll continue working with that and have a collection of images I’ll use with it.

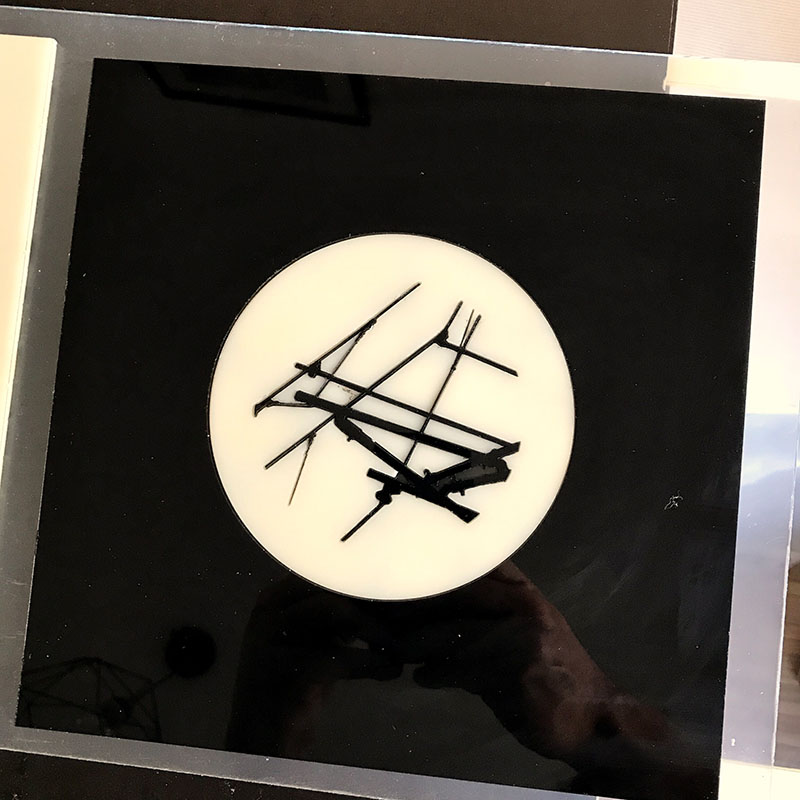

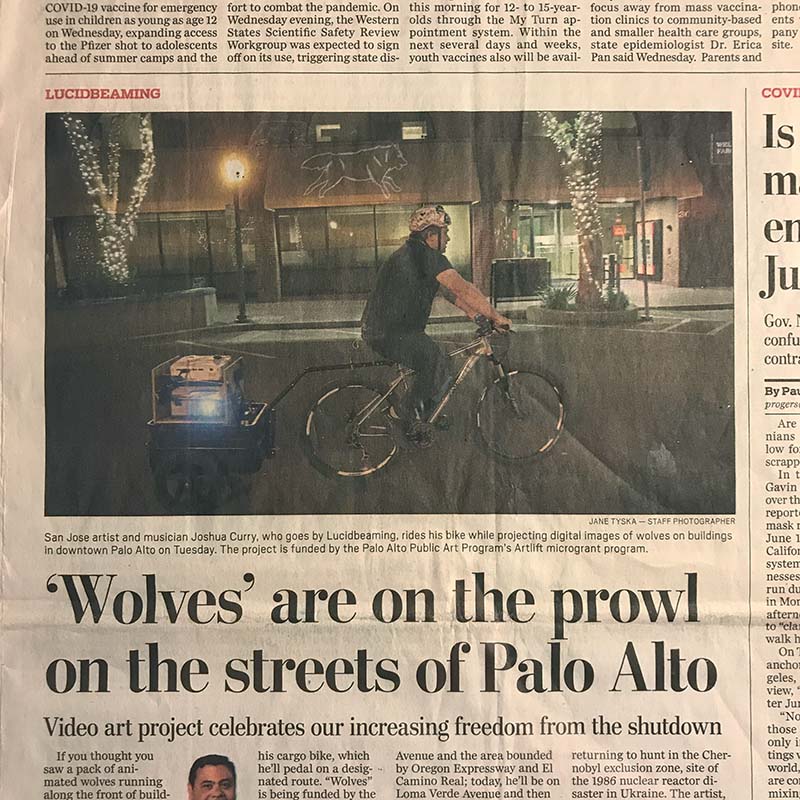

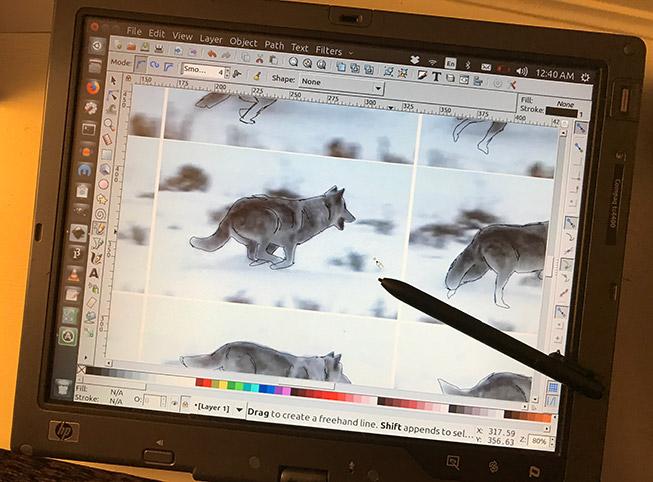

A new toy I got to use was an AxiDraw V3 pen plotter. I borrowed it from a colleague at the Creative Code Berlin Meetup. It uses real ink pens to draw paths sent from a computer. The kind of file it uses is the same I used for Wolves, so I had a good technical foundation.

100 posts in 100 days: an Instagram experiment

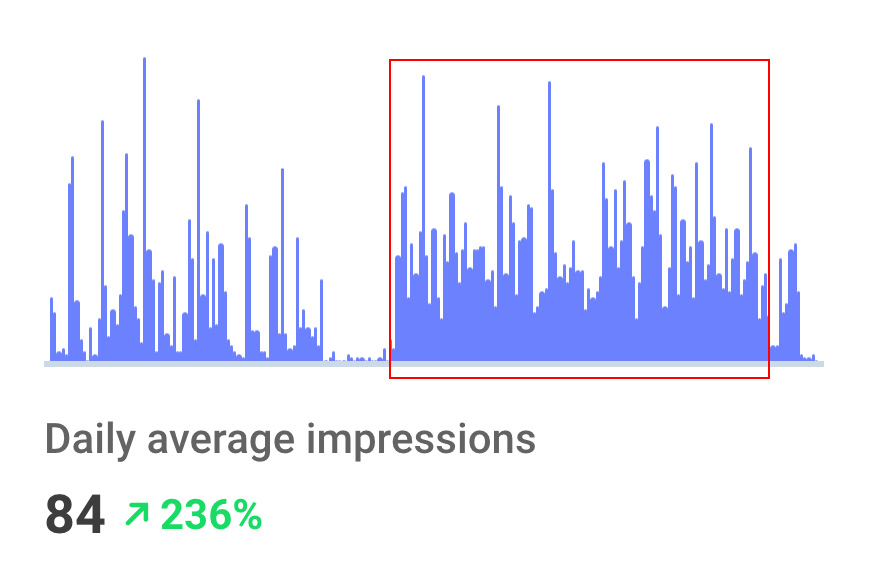

I wanted to know if all this posting on Instagram was worth it. It’s not.

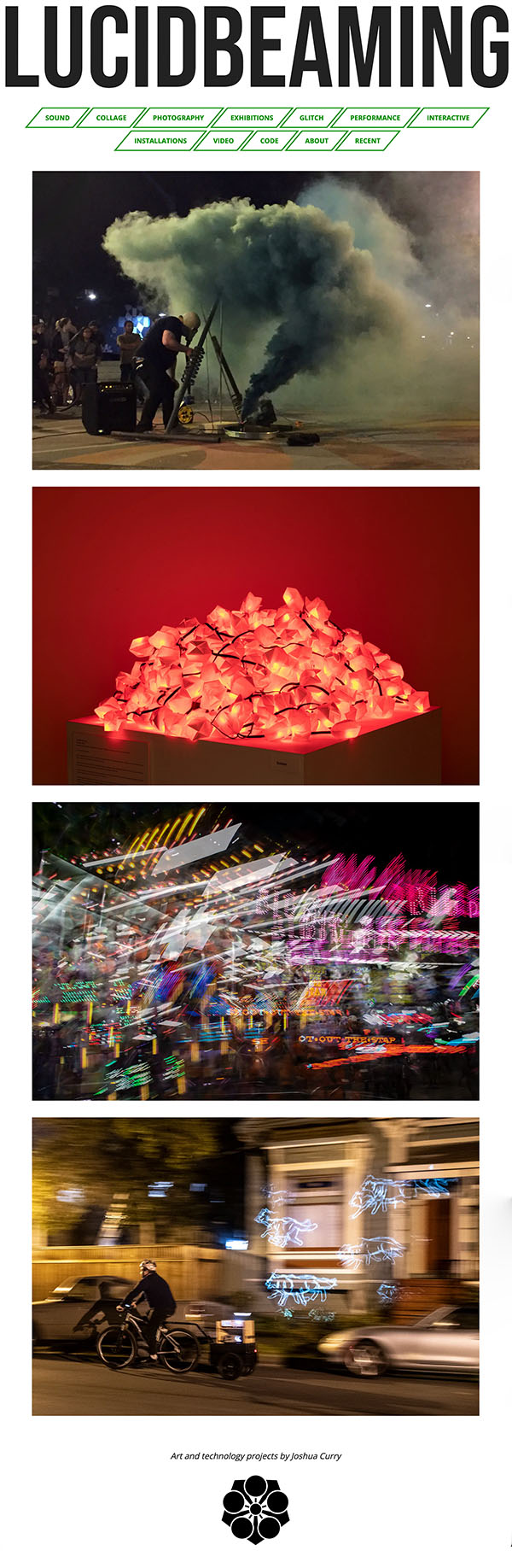

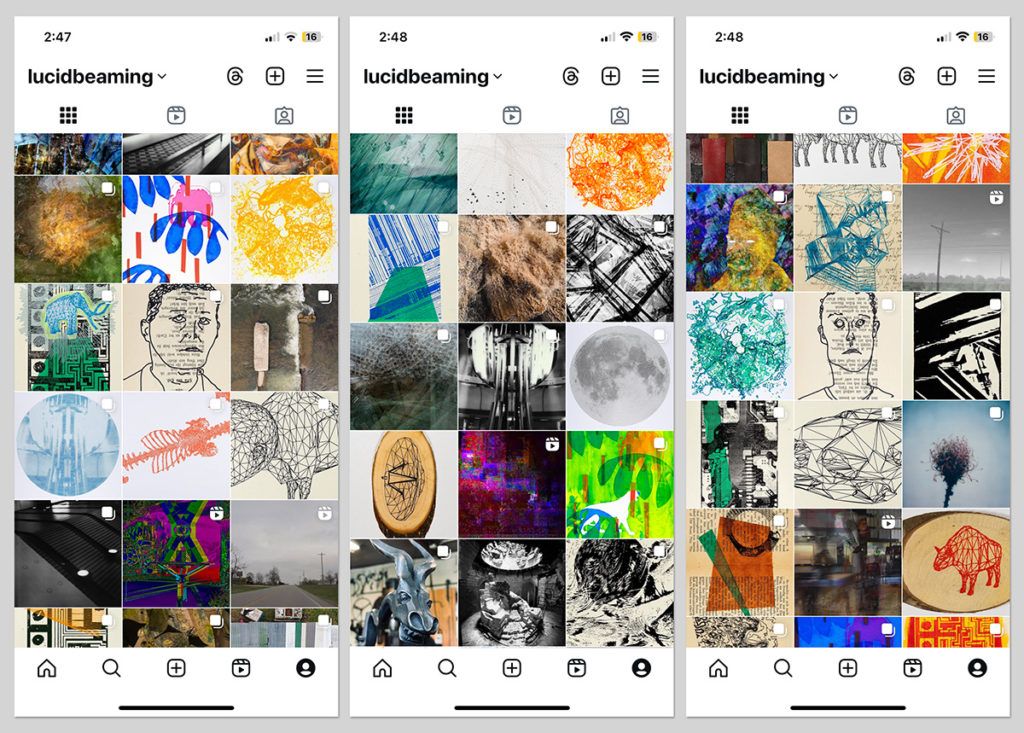

Having an online presence is a reality for most working artists. The vast majority choose Instagram to be their main platform. I have been on the internet since the mid-90s and tried all kinds of ways of showing my work online. I don’t like Instagram at all, but it is a necessary evil. I spent a lot of effort build a website but the way people look at content has totally changed in the past 10 years.

So, if I’m going to use it, I want to get some benefit from it. I don’t like the idea of just feeding an algorithm monster for its own profit. I’ve researched, experimented with free and paid solutions, and even paid to boost posts in the past.

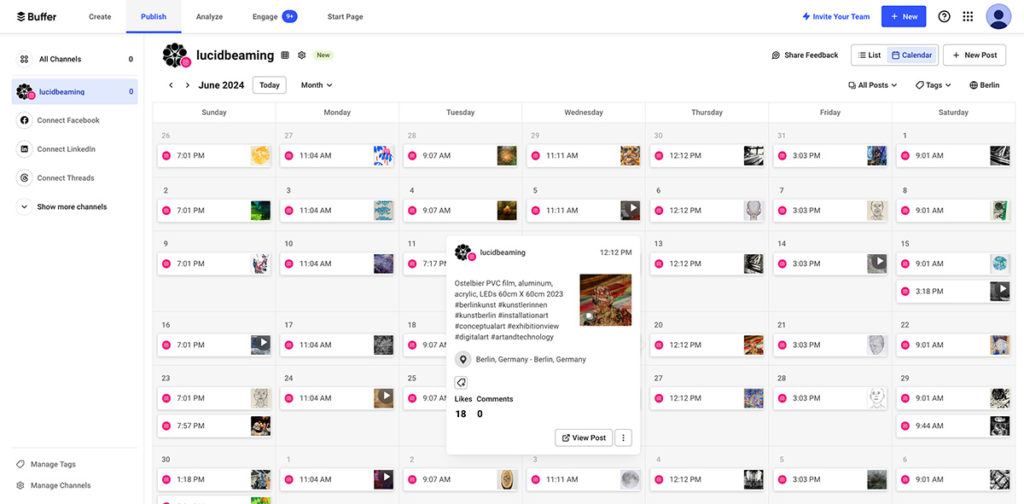

This year’s experiment was to try a posting service so I didn’t have to deal with Instagram every day. I had a marketing job years ago and we used Buffer to schedule posts months in advance. It kind of worked in that context.

I put together 100 slideshows, posts, and videos of my art. Then, on Buffer, I scheduled a post every day to see what happened.

So, what was the result? Not much. I got 40 new followers and a bunch of likes. Most of the engagement I got come from people who already knew me. I’m sure they got tired of seeing all those posts.

My takeaway is that none of the paid ways of doing Instagram really matter for individuals. It probably helps for big brands like Pepsi, but it feels pointless for us regular folks.

It really feels like a big scam and I hate being a part of it. But, I have used it as a kind of contact manager for other artists. Many art shows have begun with an Instagram DM. That’s undeniable. But, the amount of time I’ve spent posting has not been very useful.

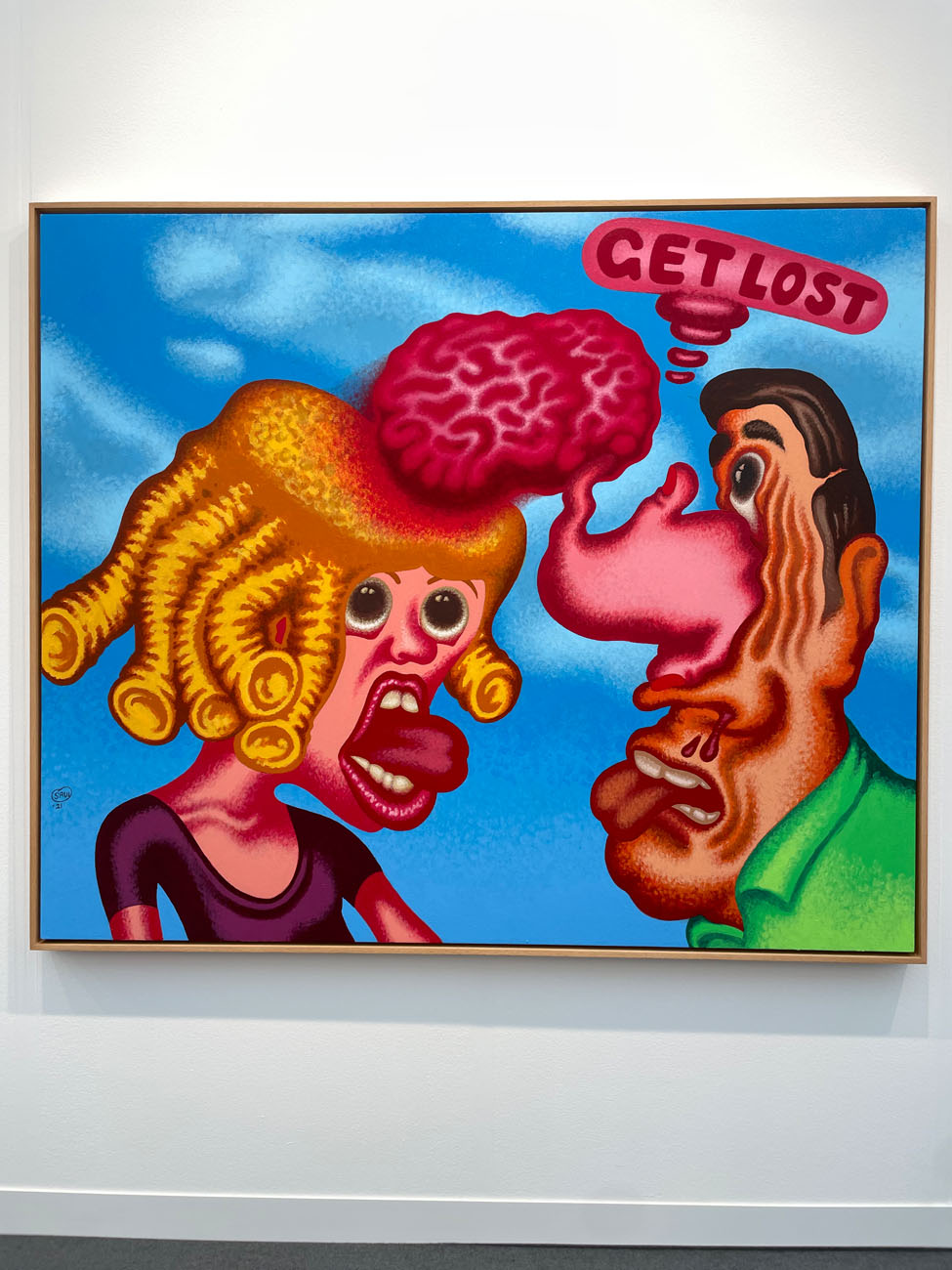

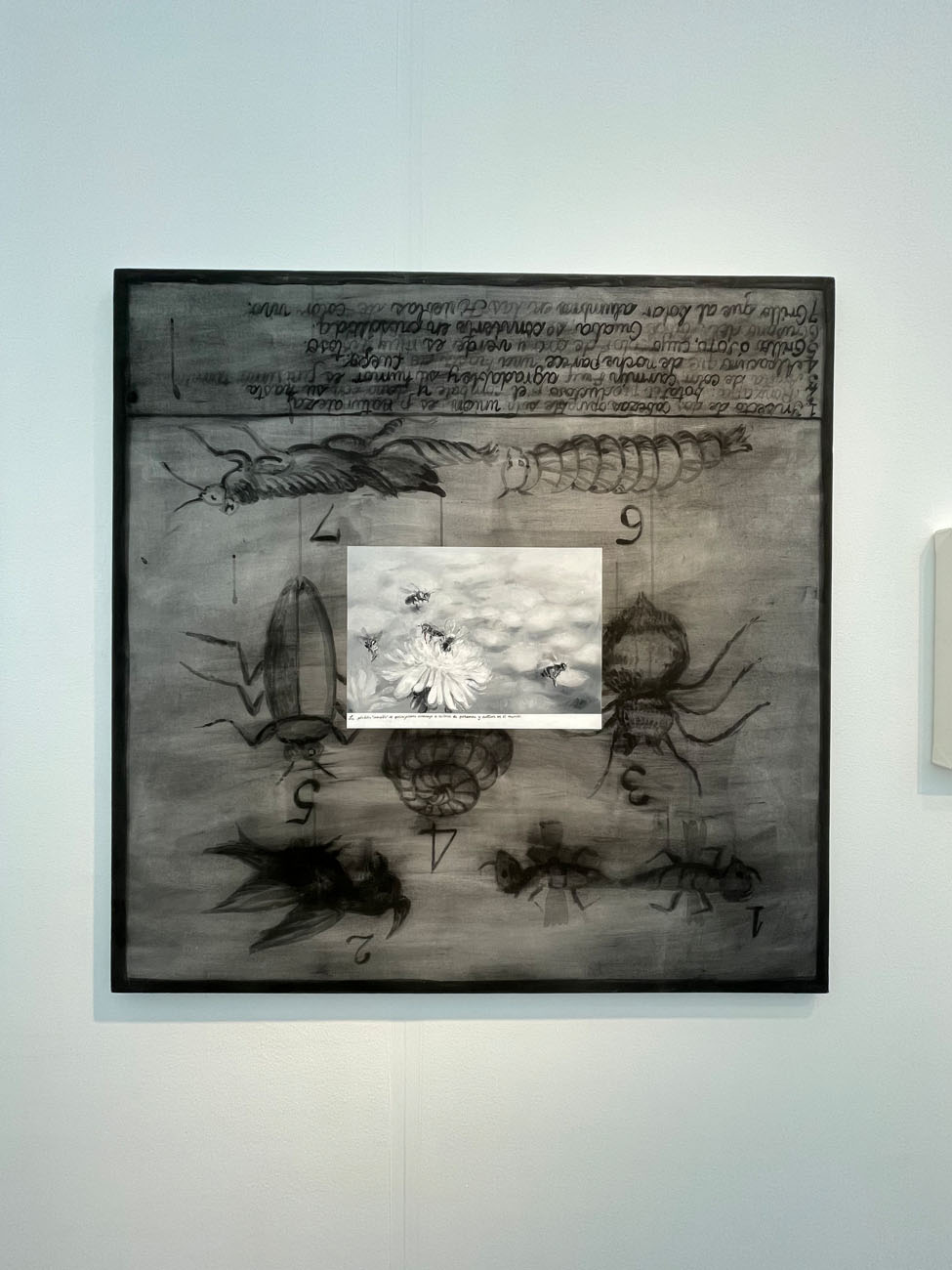

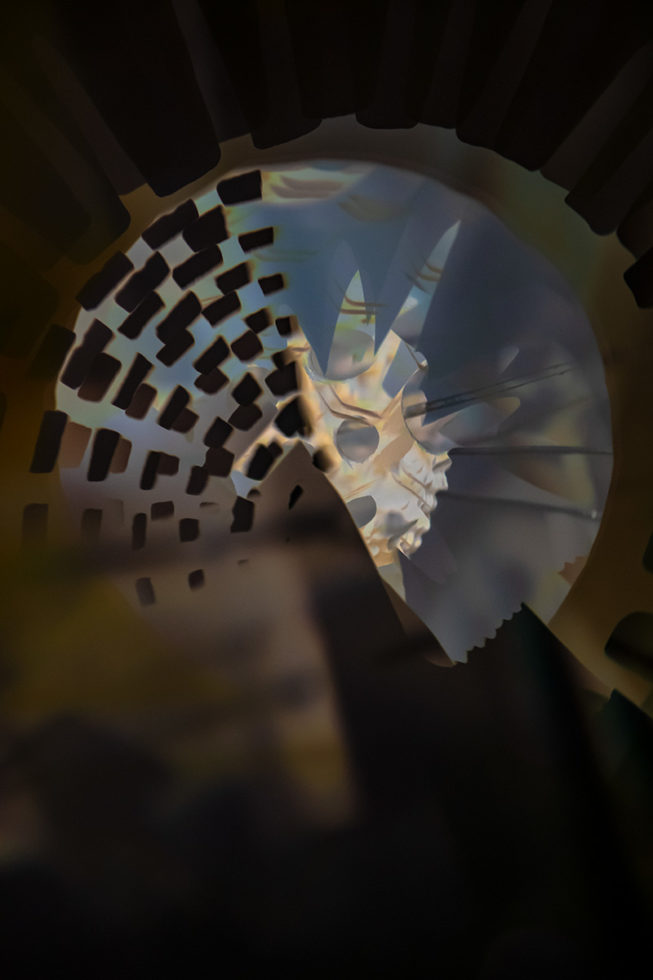

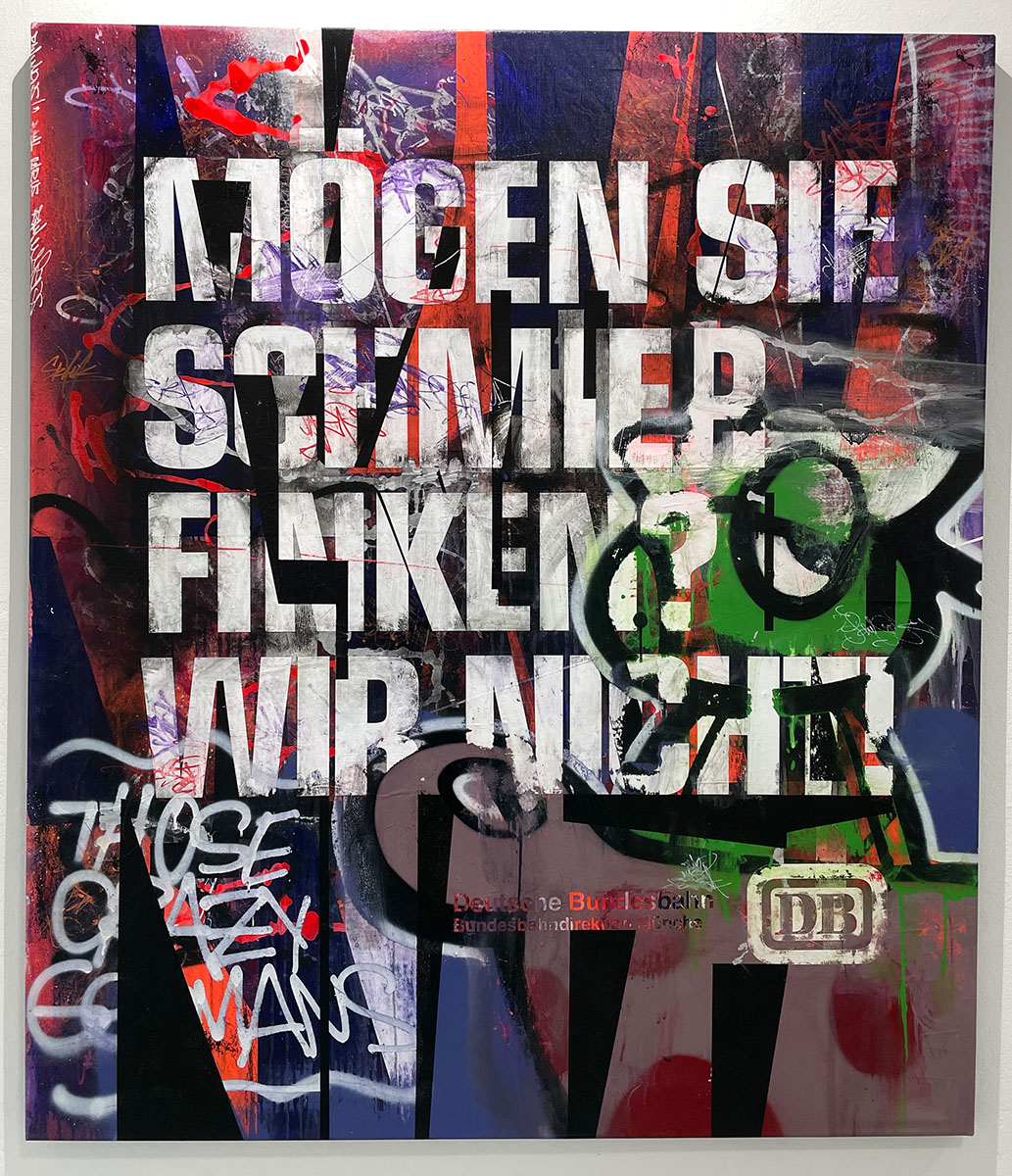

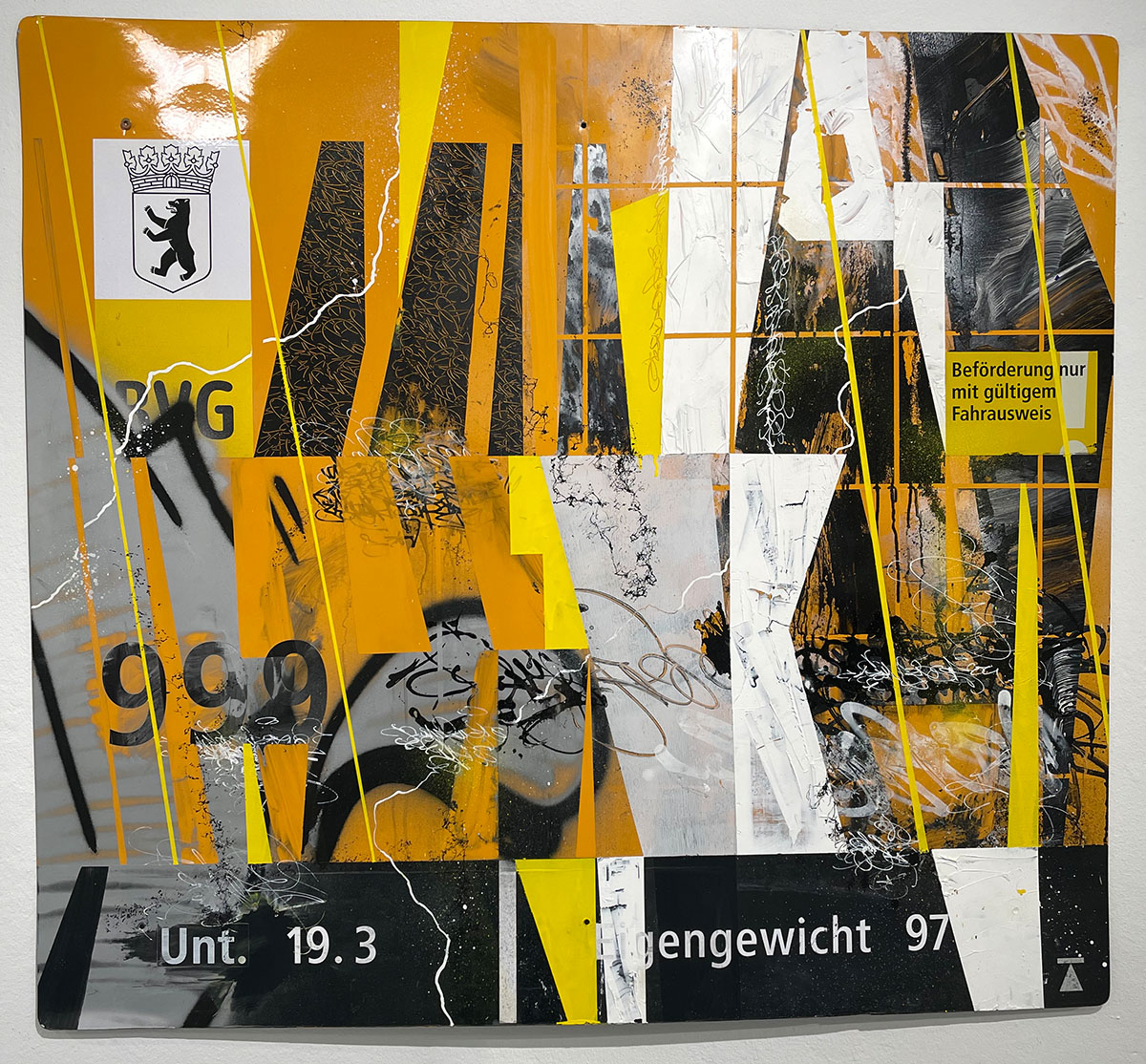

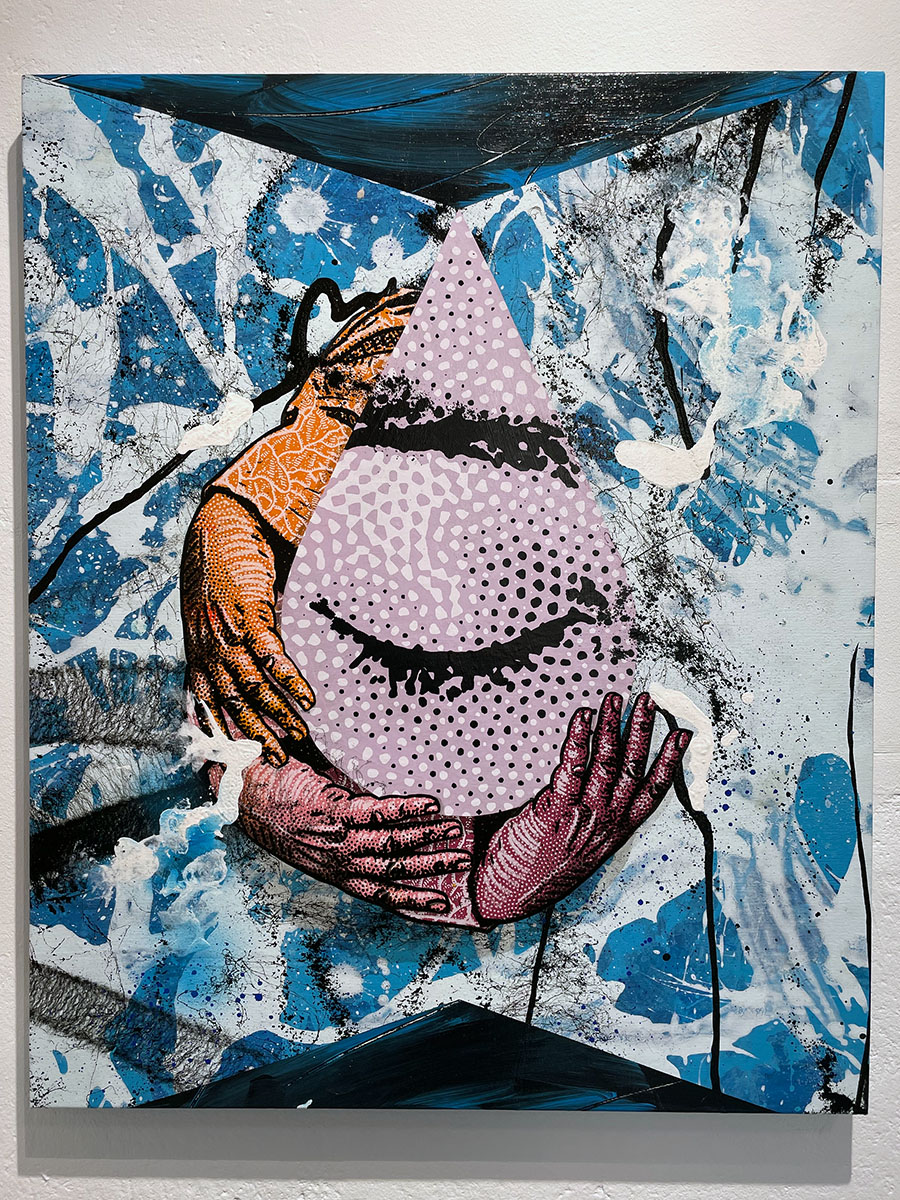

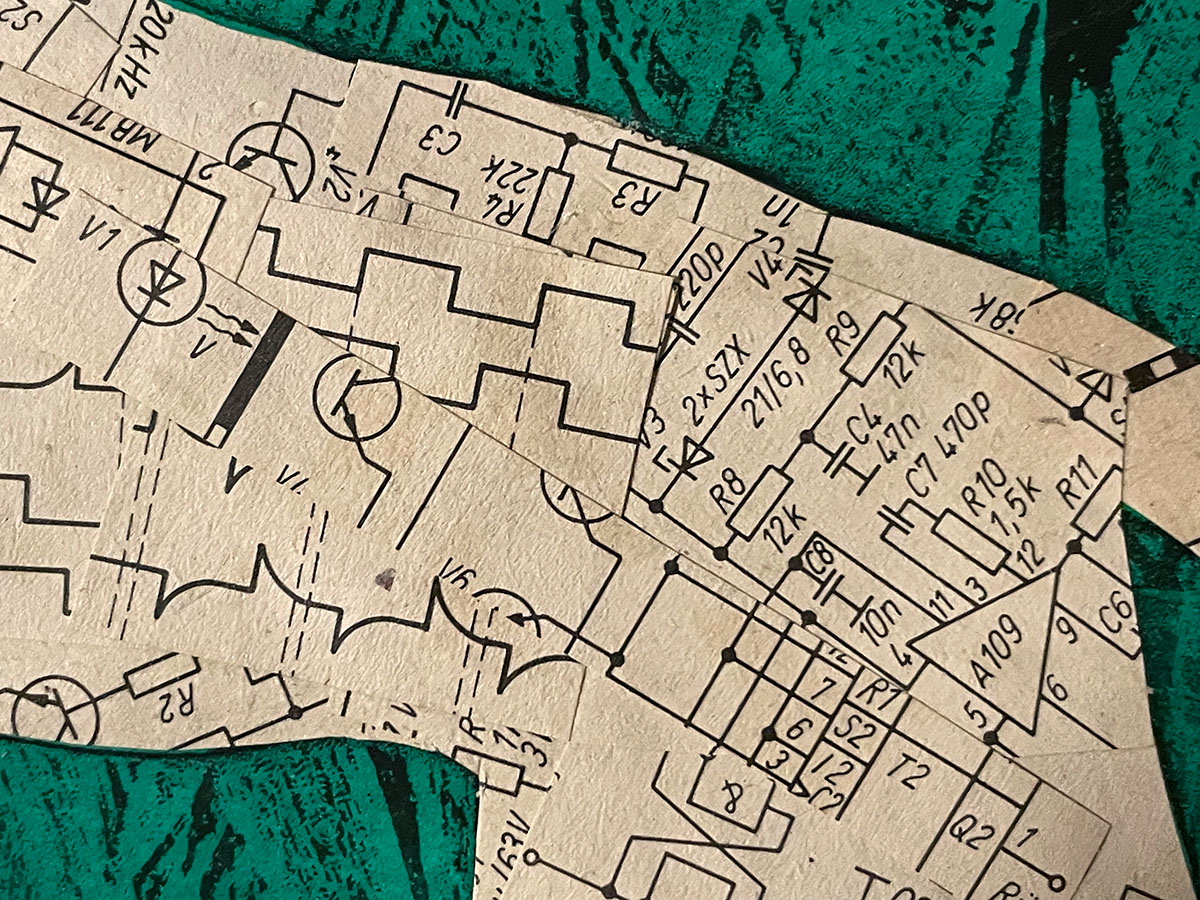

Layer Cake in Berlin

One of the best art shows I’ve seen since I moved to Berlin was Layer Cake at Urban Nation. It was a collaborative show with the main artist chopping, painting and re-assembling submissions from other artists. Apparently they are all famous street artists, but I didn’t know them. What I saw was at a very high level of aesthetics, though.

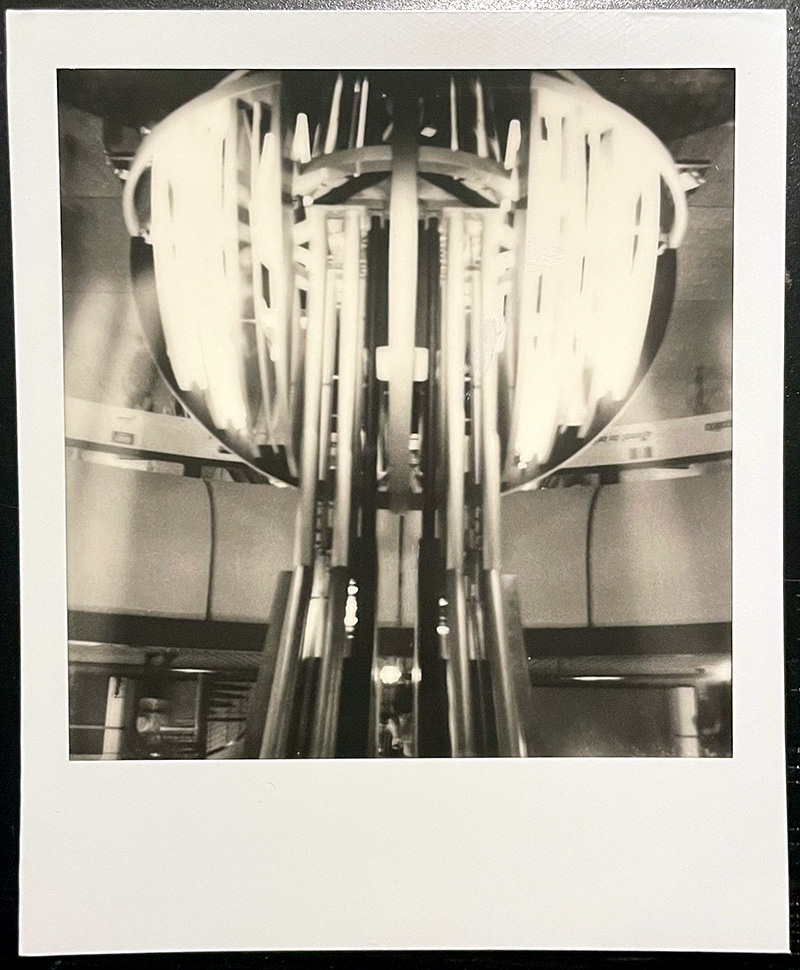

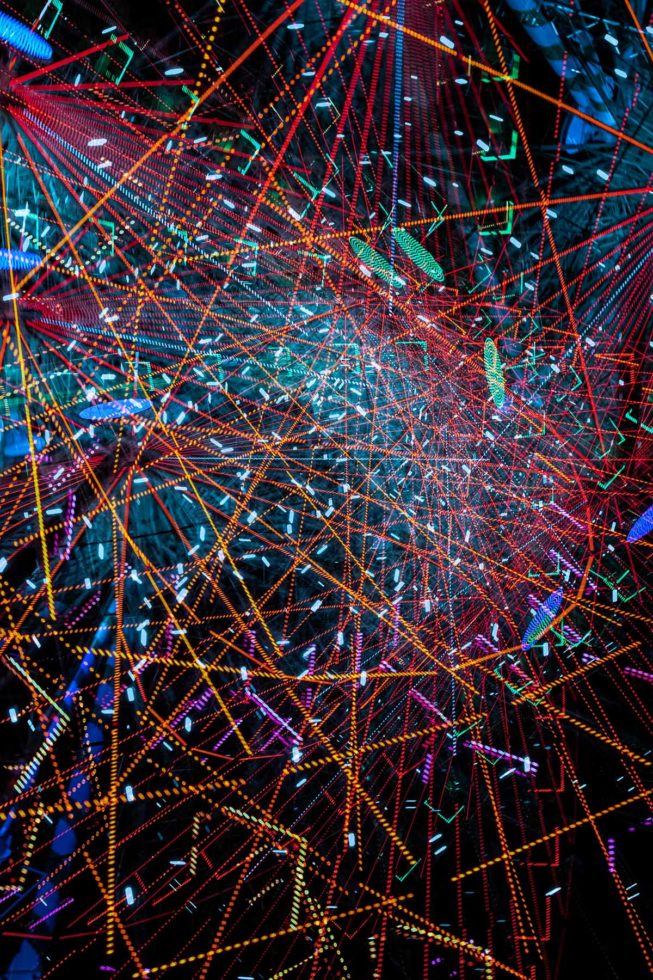

Superbooth24

The mother of all modular synth festivals is held each year in Berlin. It’s the Mecca of modular knob twiddlers.

I don’t own this kind of gear, but I like to play with it. I’m not big on commercial synths in general and prefer to build my own for specific audio aesthetics. But, I’m not gonna deny these machines are badass and look very cool. They sound cool, too.

Norwegian heavy metal

My friend Benjamin Kjellman-Chapin is a blacksmith that lives in Nes Verk, Norway. We went to the Atlanta College of Art together back in the mid 90s. Our paths diverged after school, and I didn’t see him again until this year.

Ben was immortalized back then in a painting my friend Neil Carver made, based on a photo I took of him. Neil made a bunch of paintings from my photos and I always thought the one of Ben was one of the best.

He ended up in Norway and has built an amazing life as a blacksmith with his wife Monica. They have a blacksmithing shop next to the Næs Jernverksmuseum a few hours outside of Oslo. Never in a million years did I think I would get to visit him. But, being in Berlin made that trip much more practical and realistic. We managed to arrange it so I was there for a regional blacksmithing festival. It was a whole other world, very different from my tech art life back in Berlin.

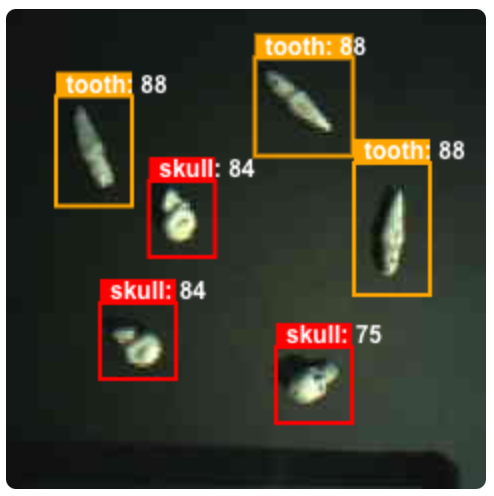

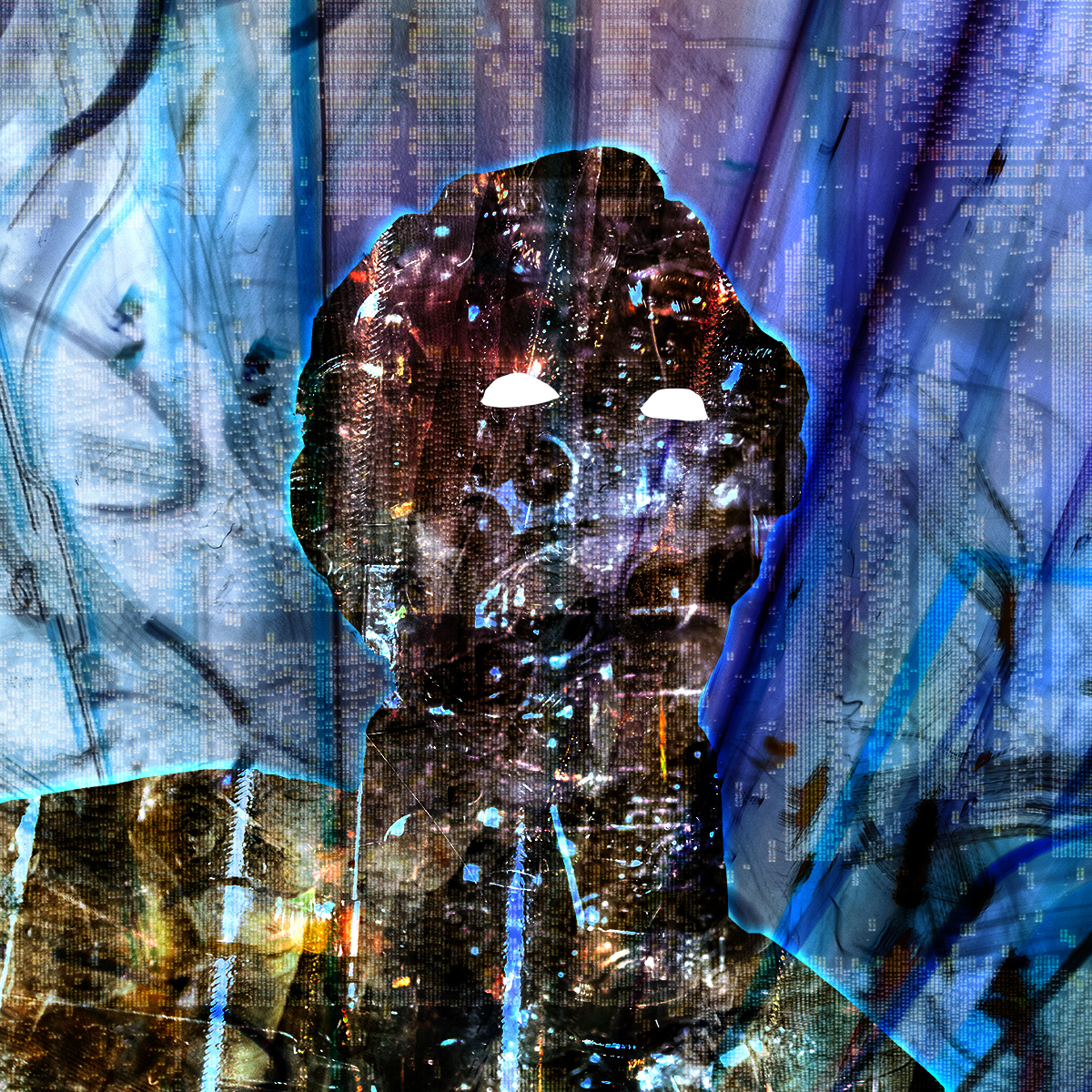

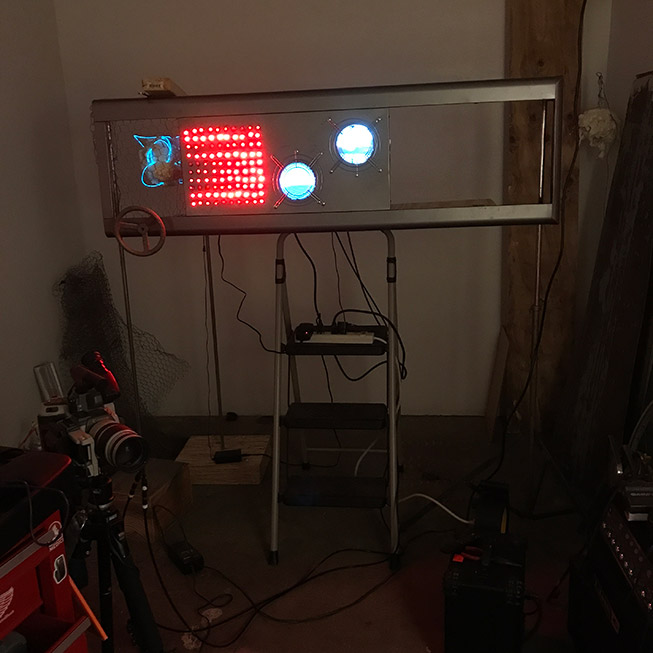

Skulls: a more successful AI project

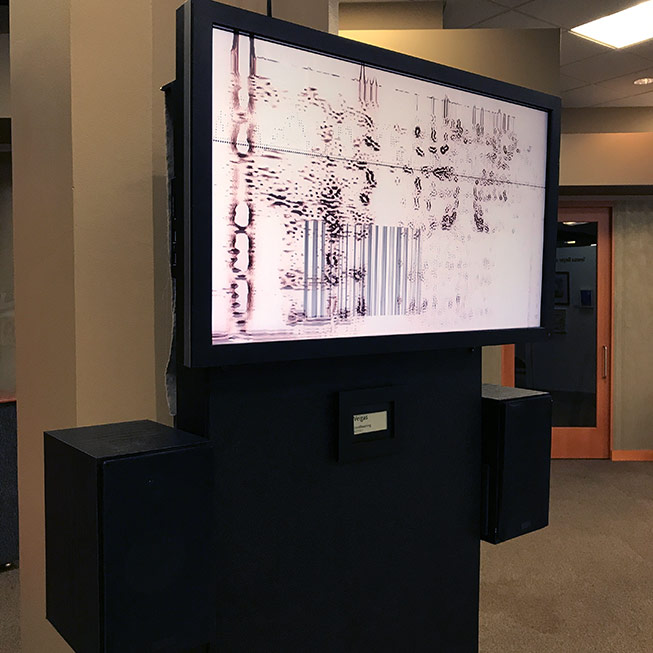

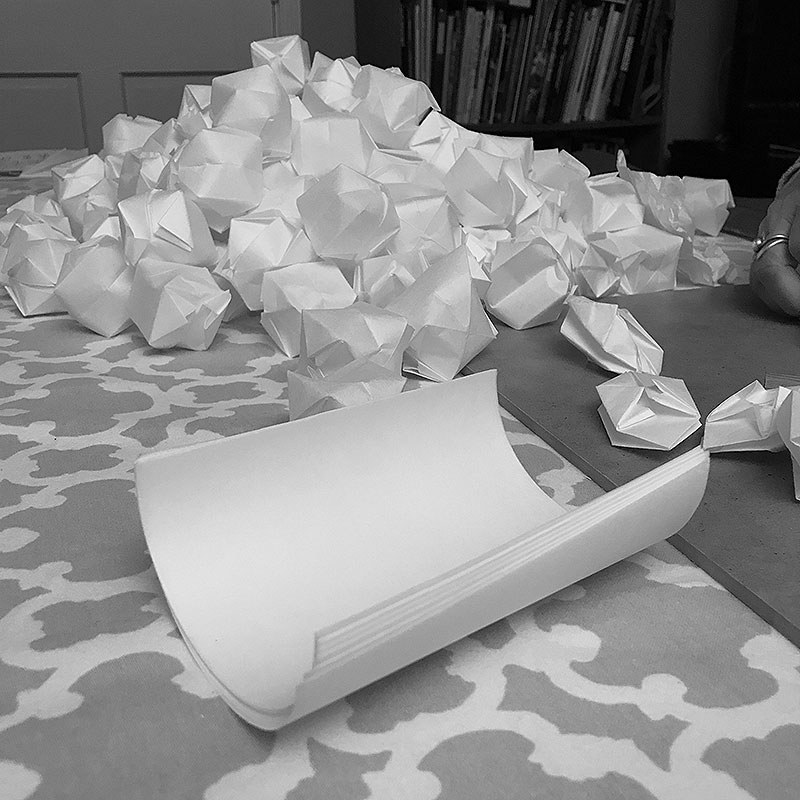

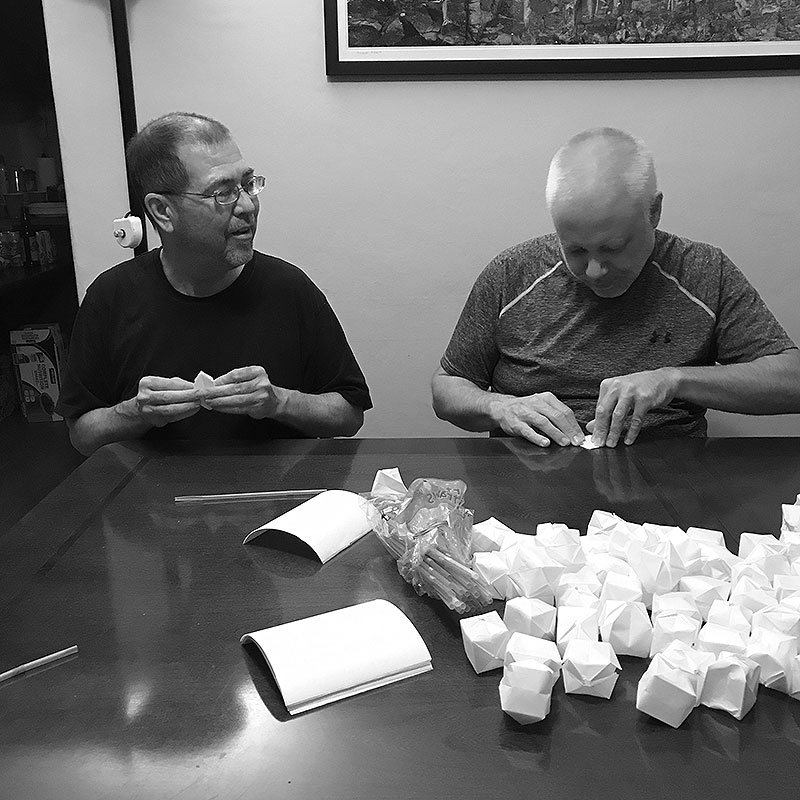

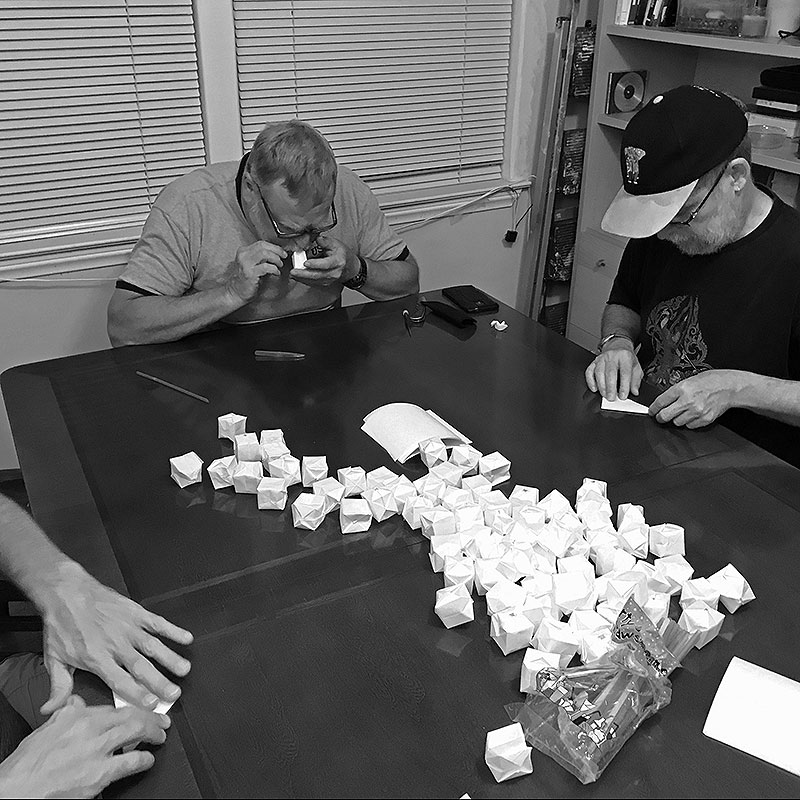

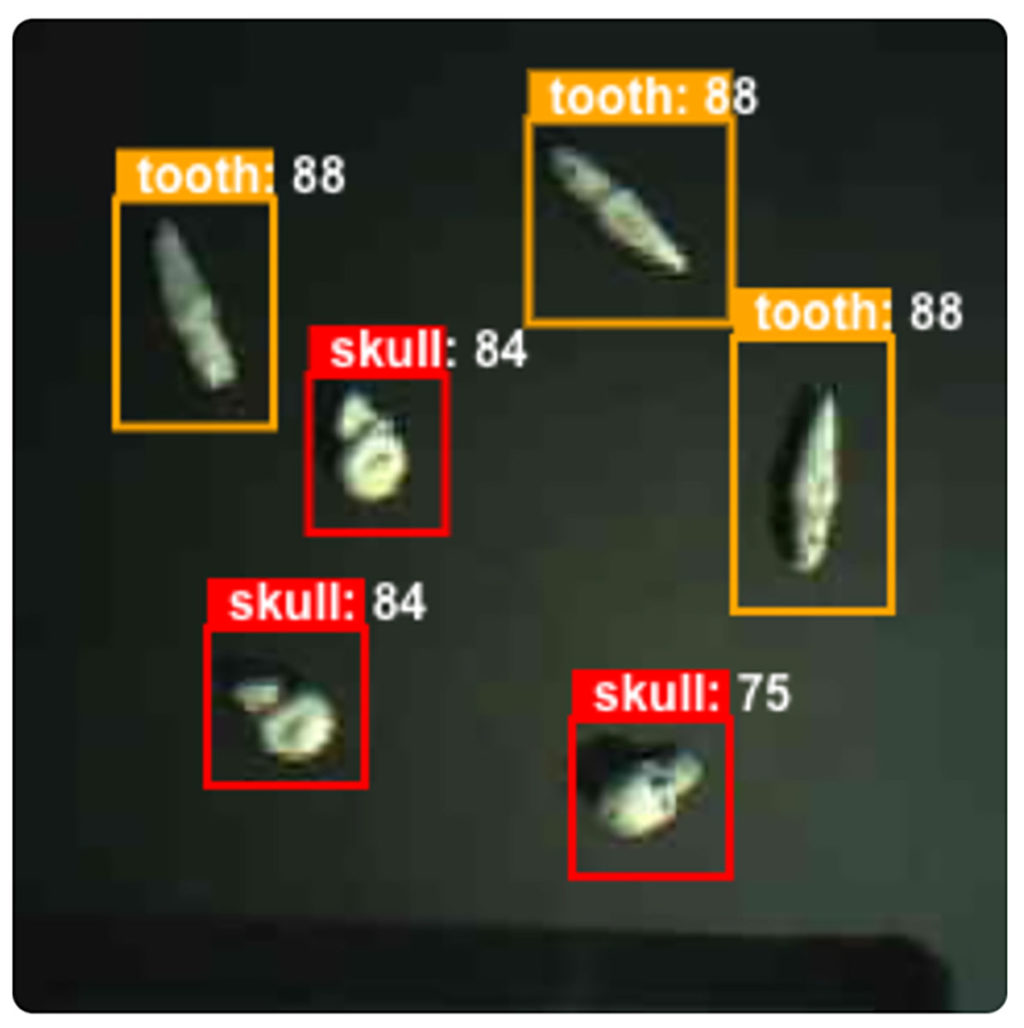

I built a project to identify and track little plastic skulls and then play music based on their position. It won a prize.

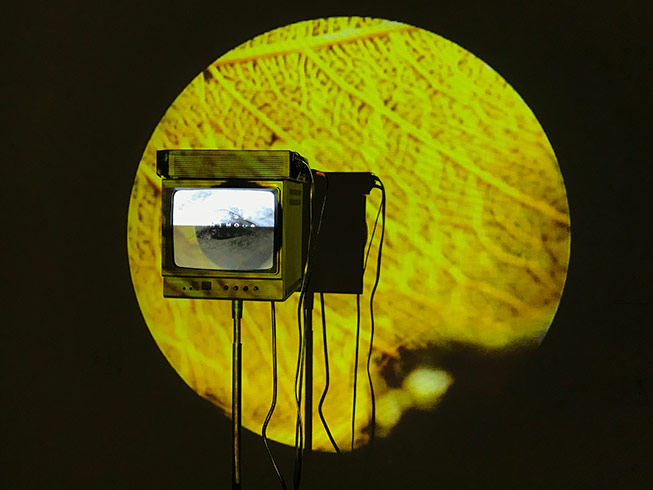

The idea came from a more primitive version I made back in San Jose called Oracle. I am always looking forward to new ways of performing electronic music that don’t depend on a laptop or MIDI controller. This overall approach was inspired by seeing an oracle toss chicken bones to tell the future in a movie.

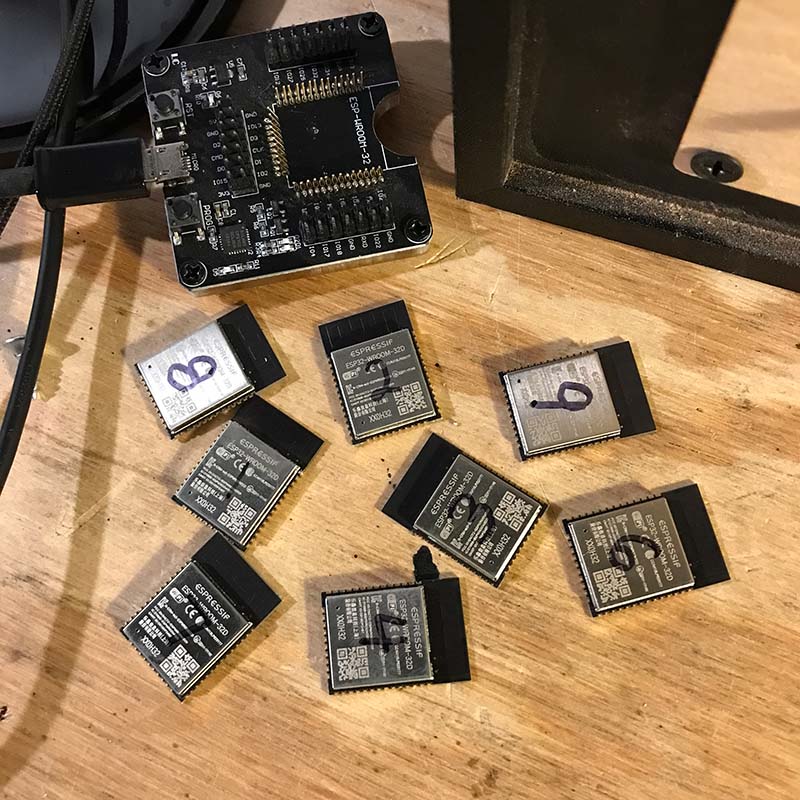

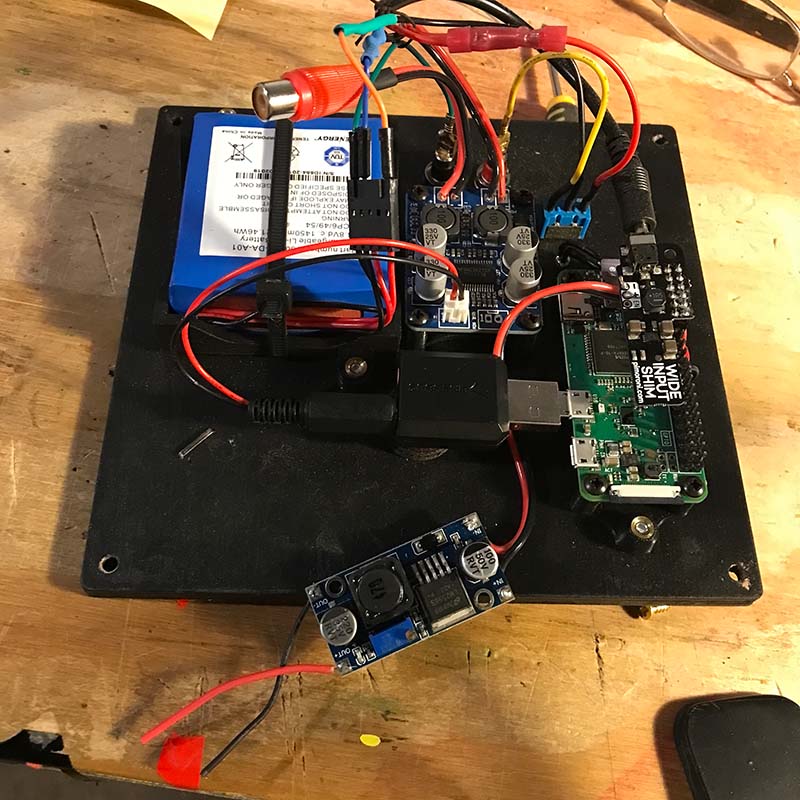

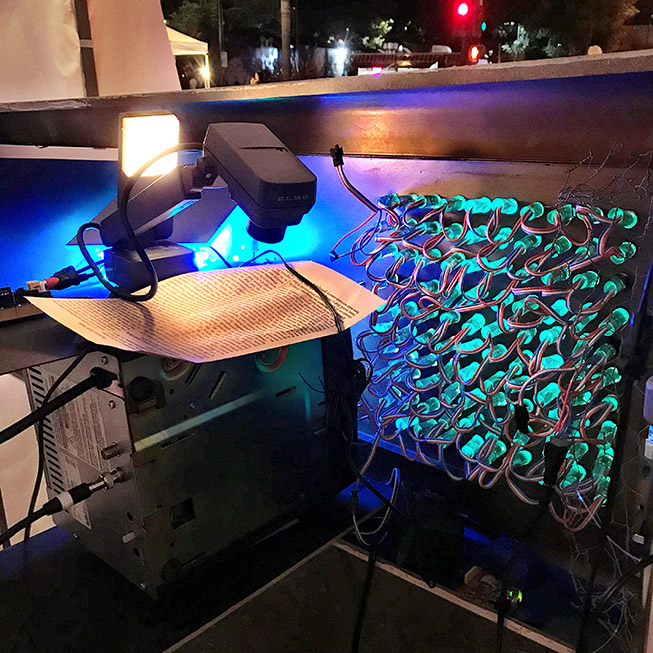

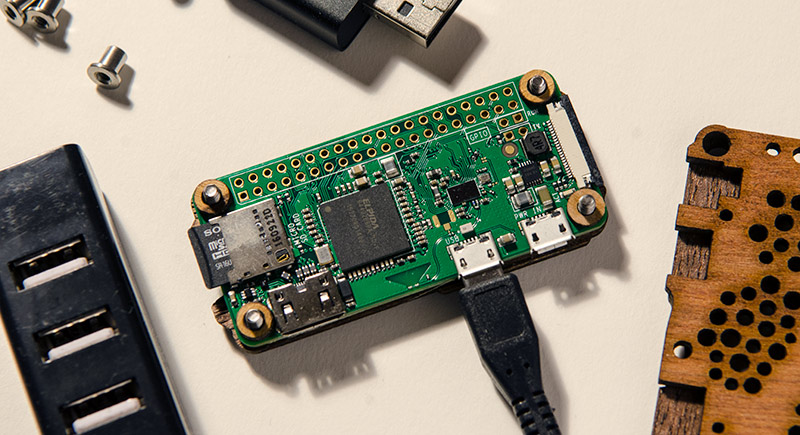

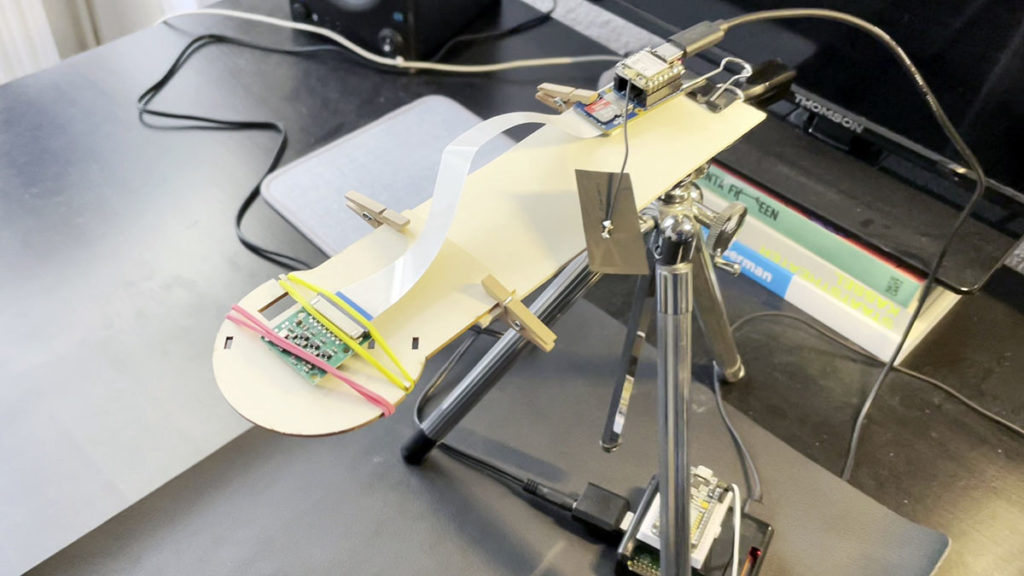

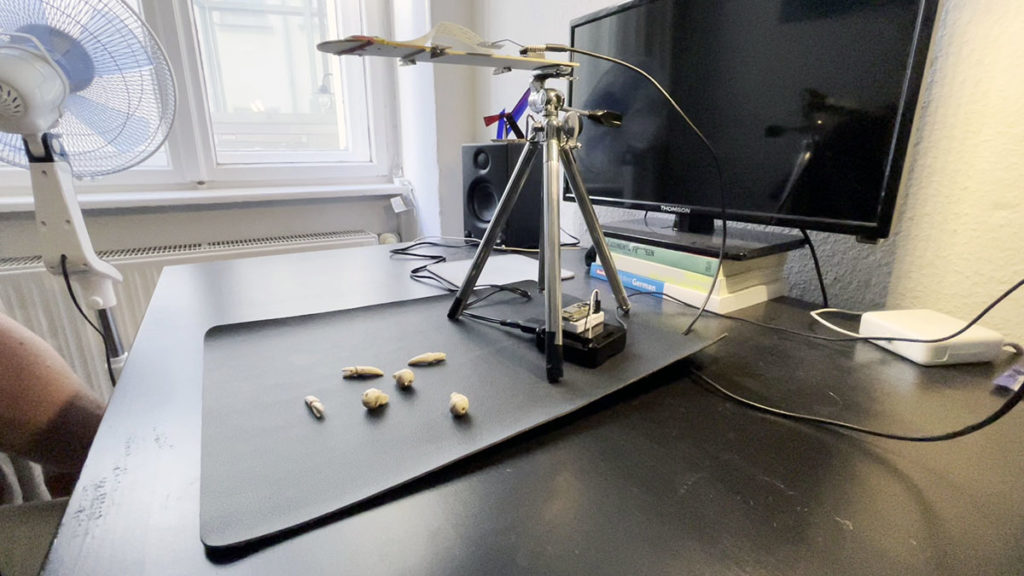

The earlier approach used simple image thresholding within a predefined grid. It worked but was susceptible to lighting changes. This approach used a Grove Vision AI Module V2 with a custom model I trained myself. It turned out pretty well.

To use it, I scatter a handful of little plastic teeth and skulls underneath a camera connected to an AI module. The module identifies the objects and sends their position to a Raspberry Pi, which interprets that and triggers a software synthesizer running internally on Linux.

This project won first prize in a competition sponsored by the manufacturer of that module.

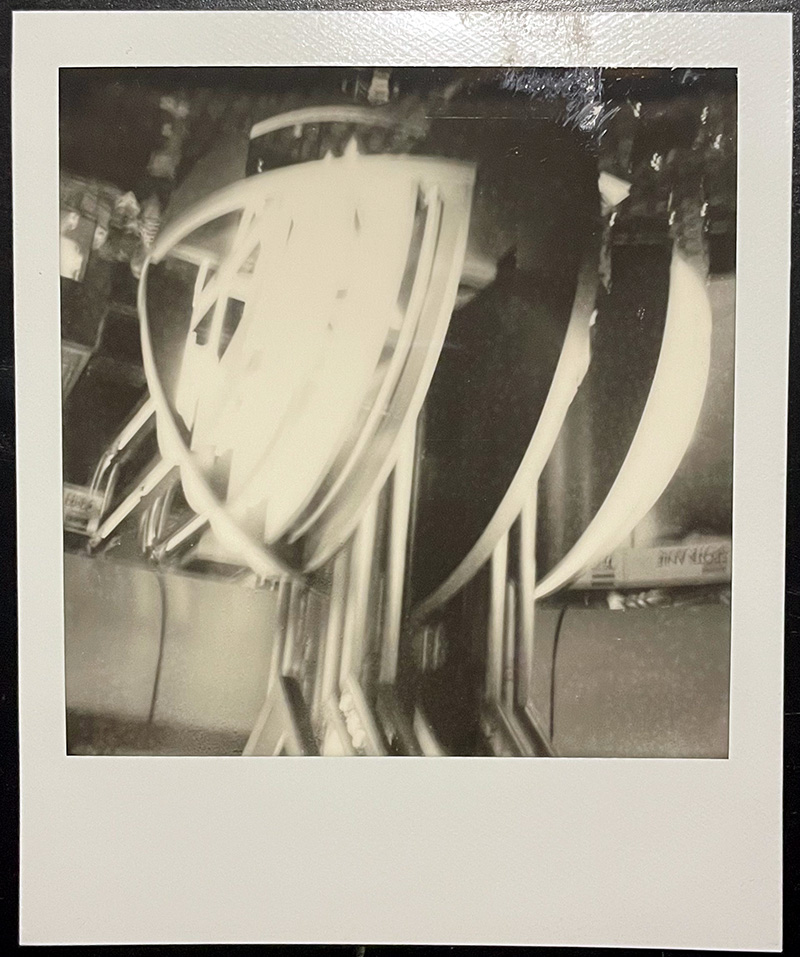

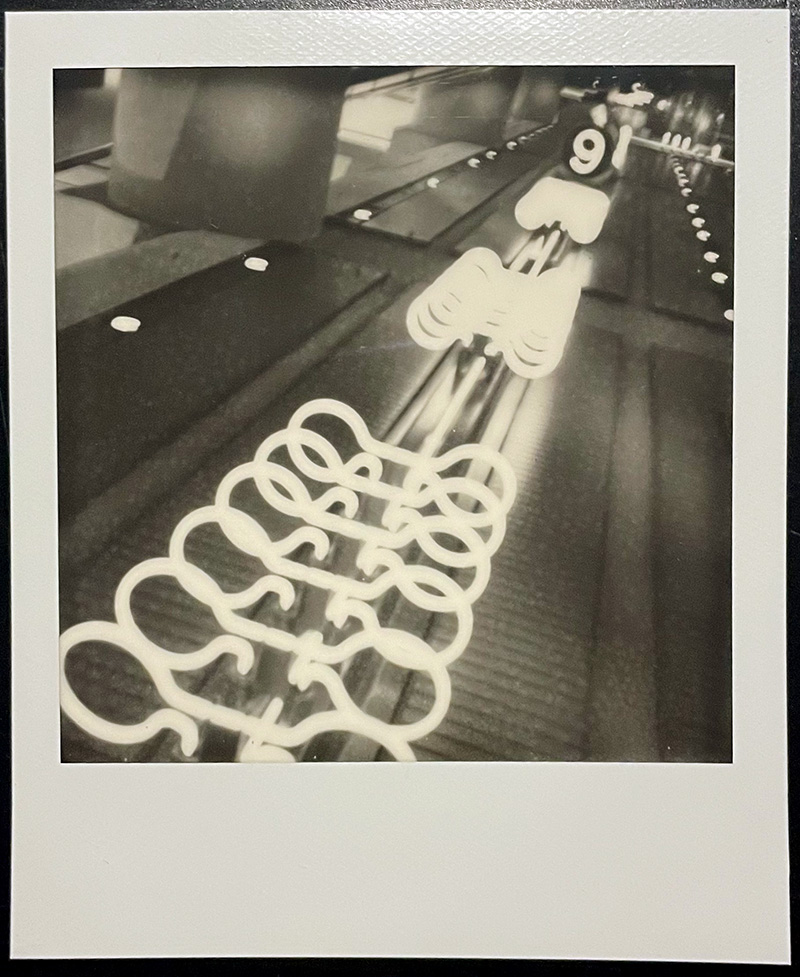

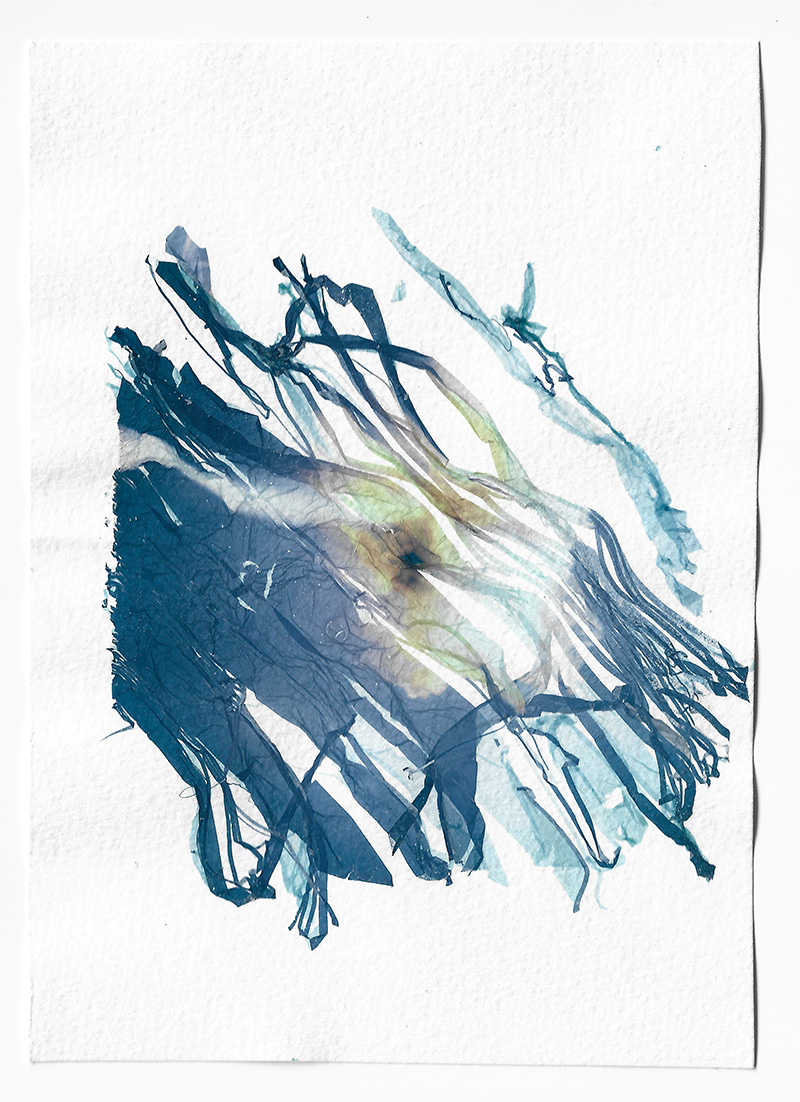

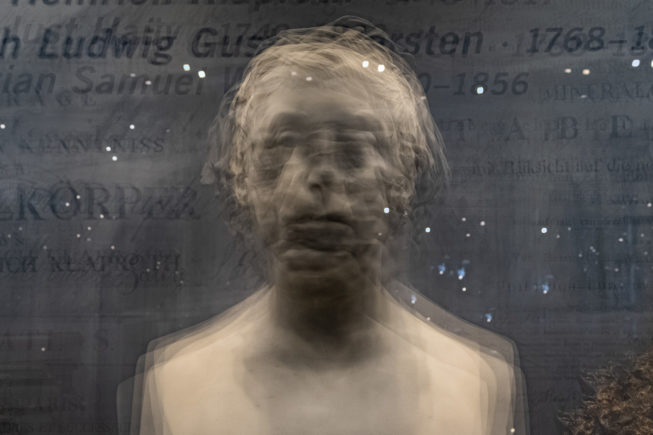

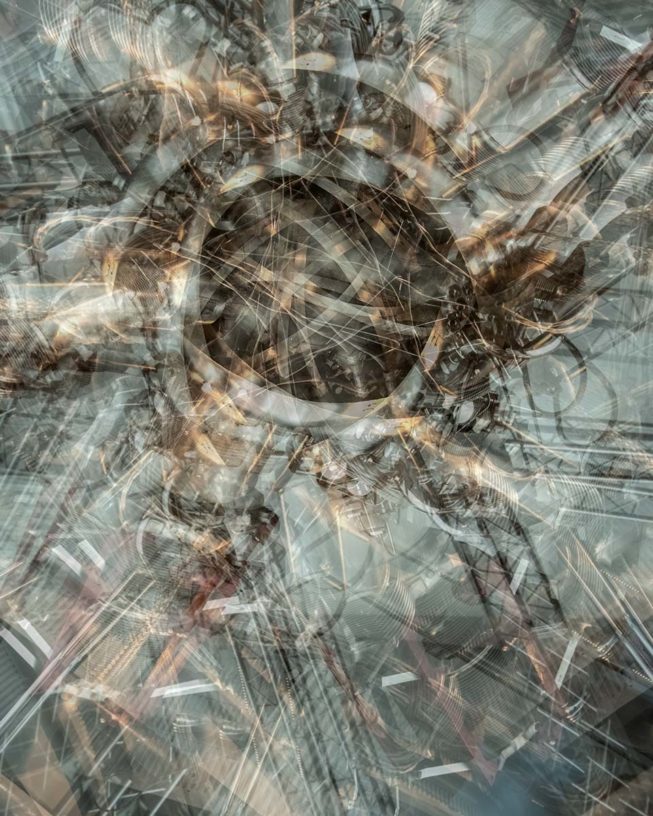

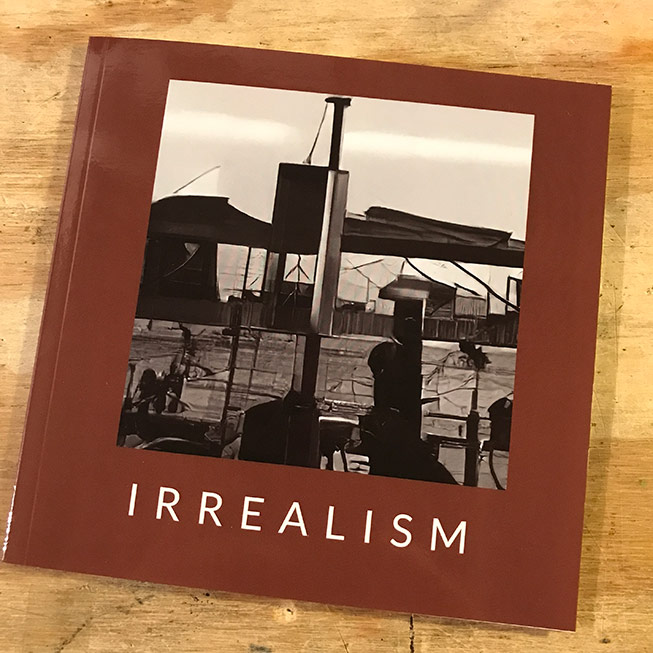

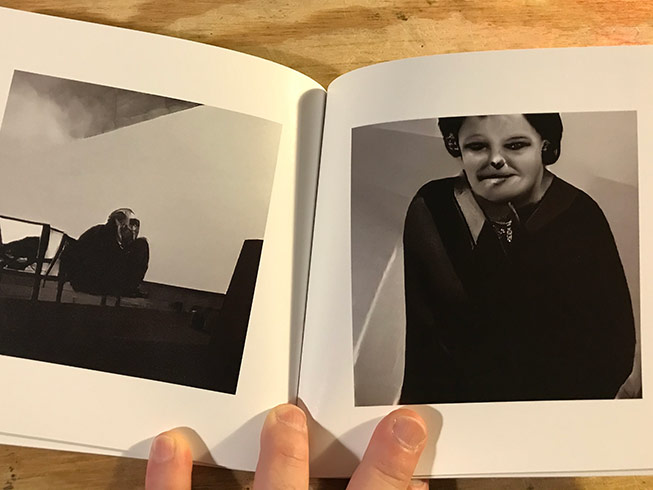

Experimental Photography Festival in Barcelona

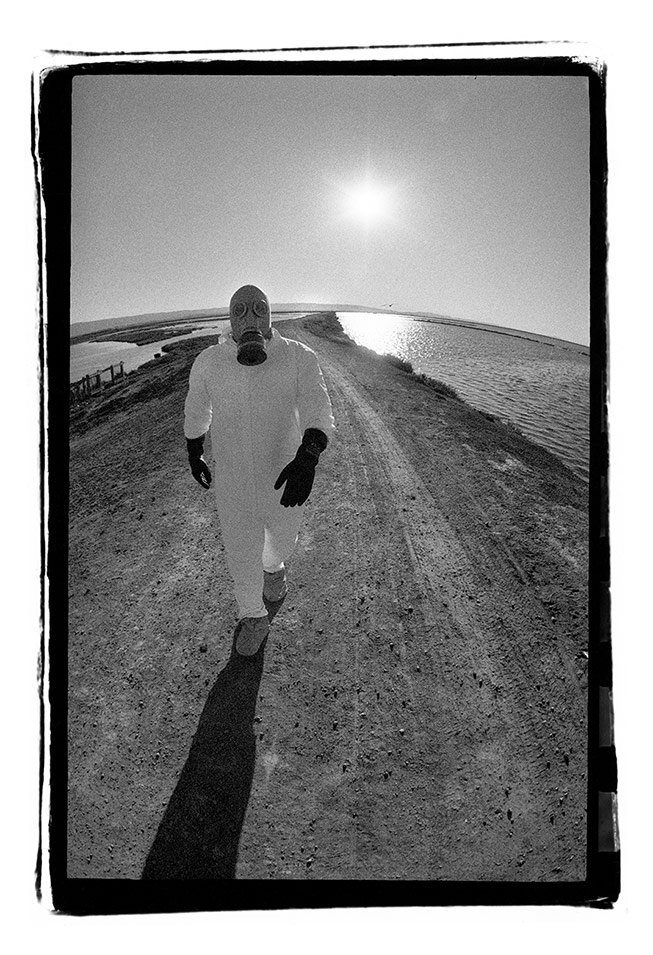

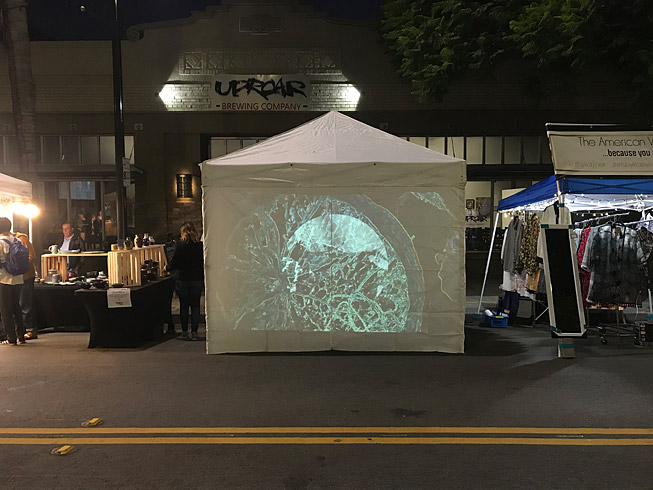

I had a solo show of recent experimental photography in Barcelona.

The Experimental Photography Festival is an analog-centric gathering of people who use alternative processes. That used to mean non-silver based processes like cyanotype but has expanded to include all kinds photographic techniques. This group features few computer-based images and definitely no AI work. It was refreshing and inspiring to be surrounded by such genuine experimentation..

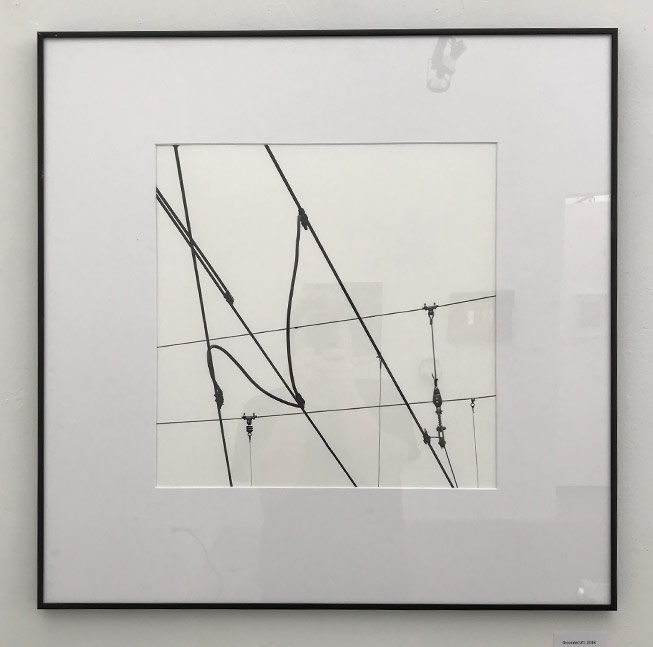

It also attracted a truly international group. I met people from Japan, Hungary, Peru, and more.

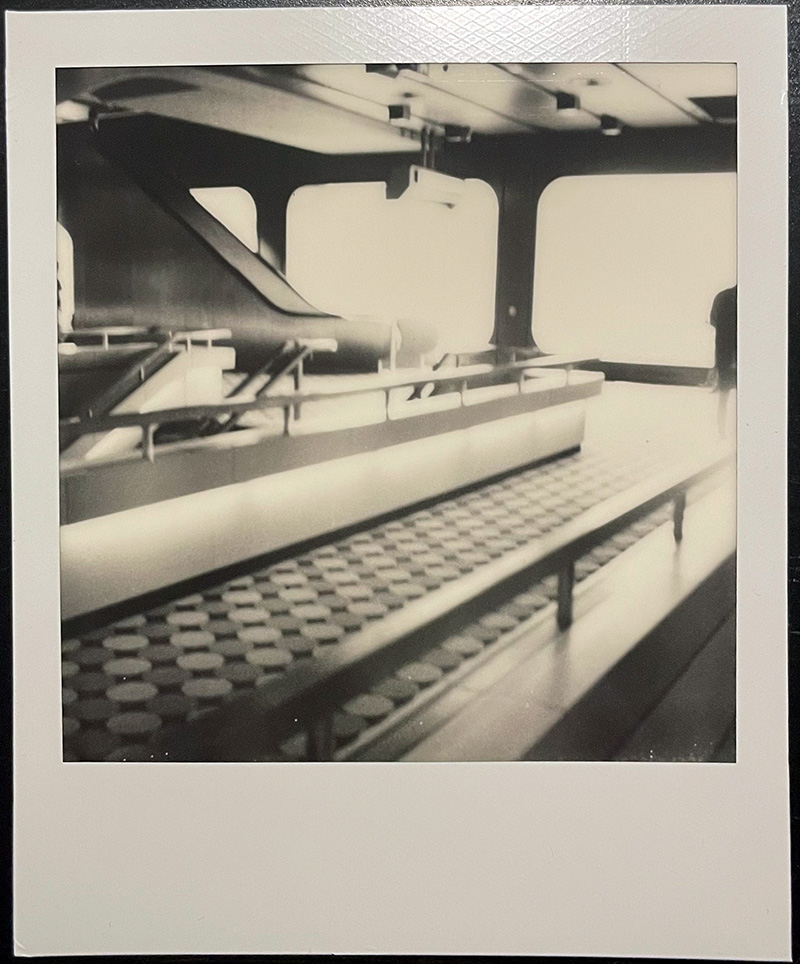

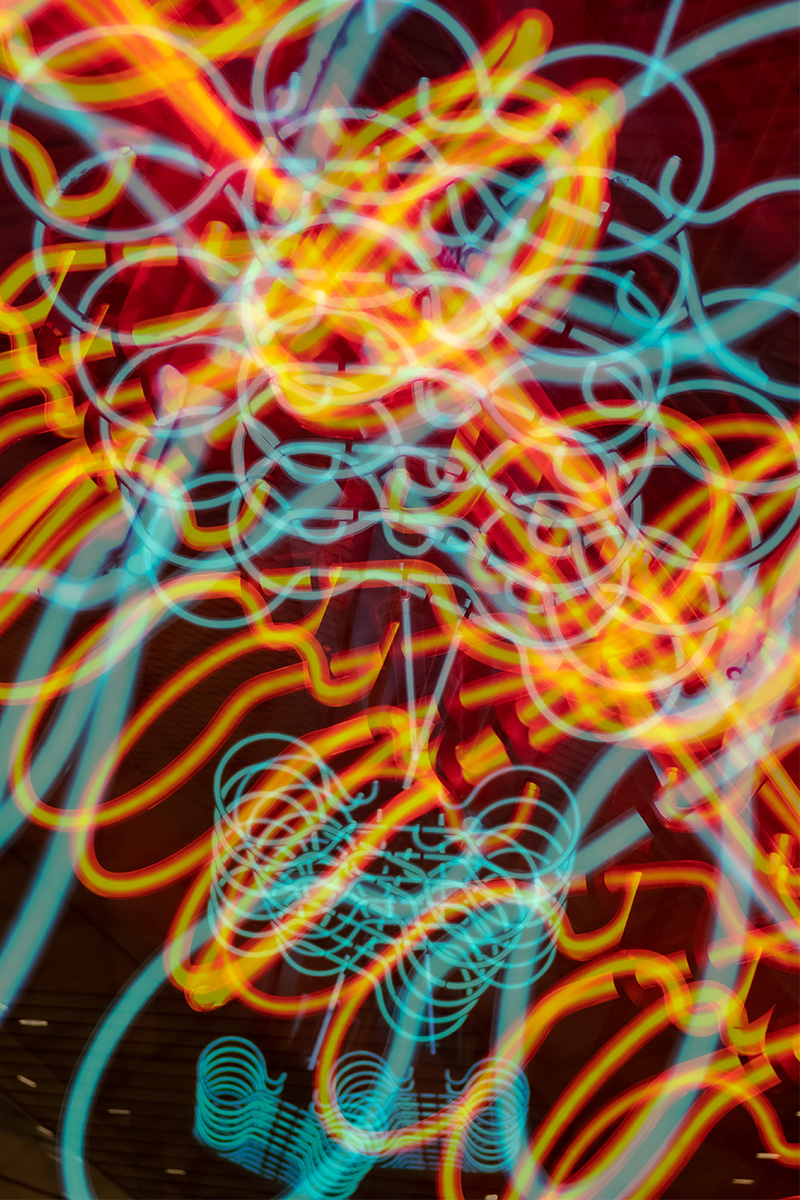

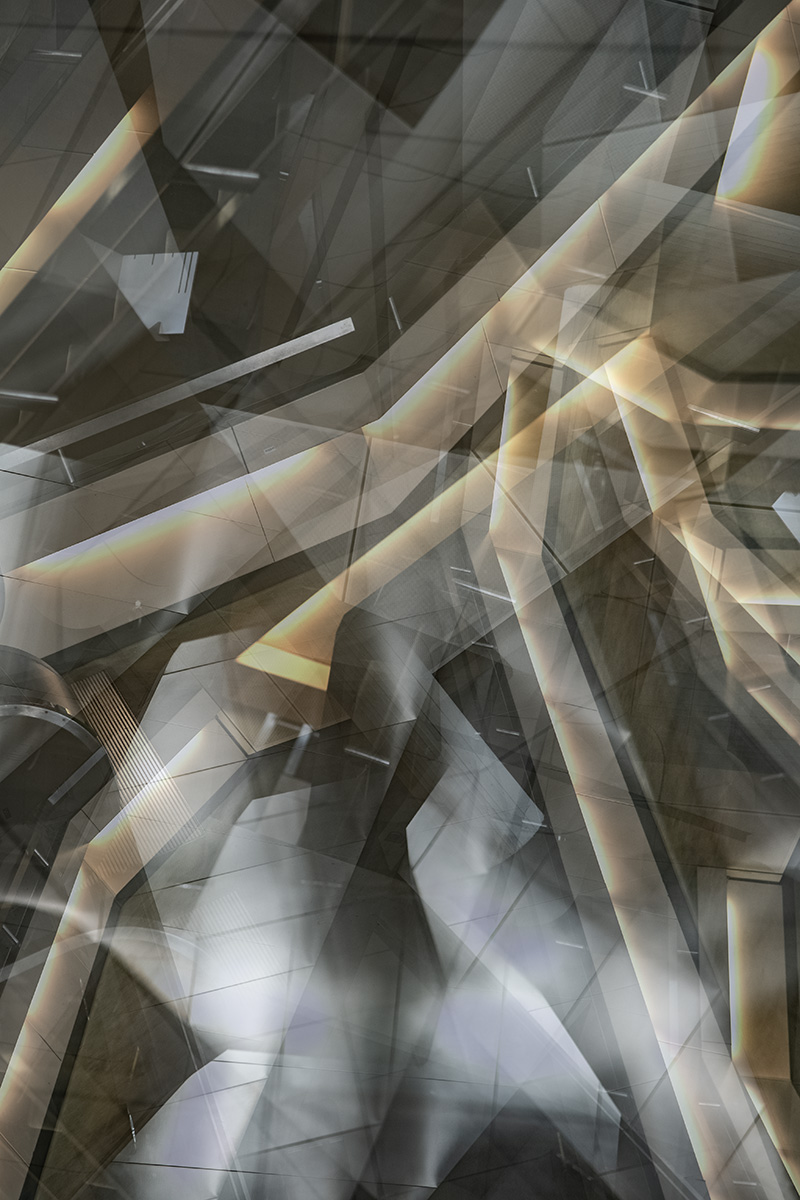

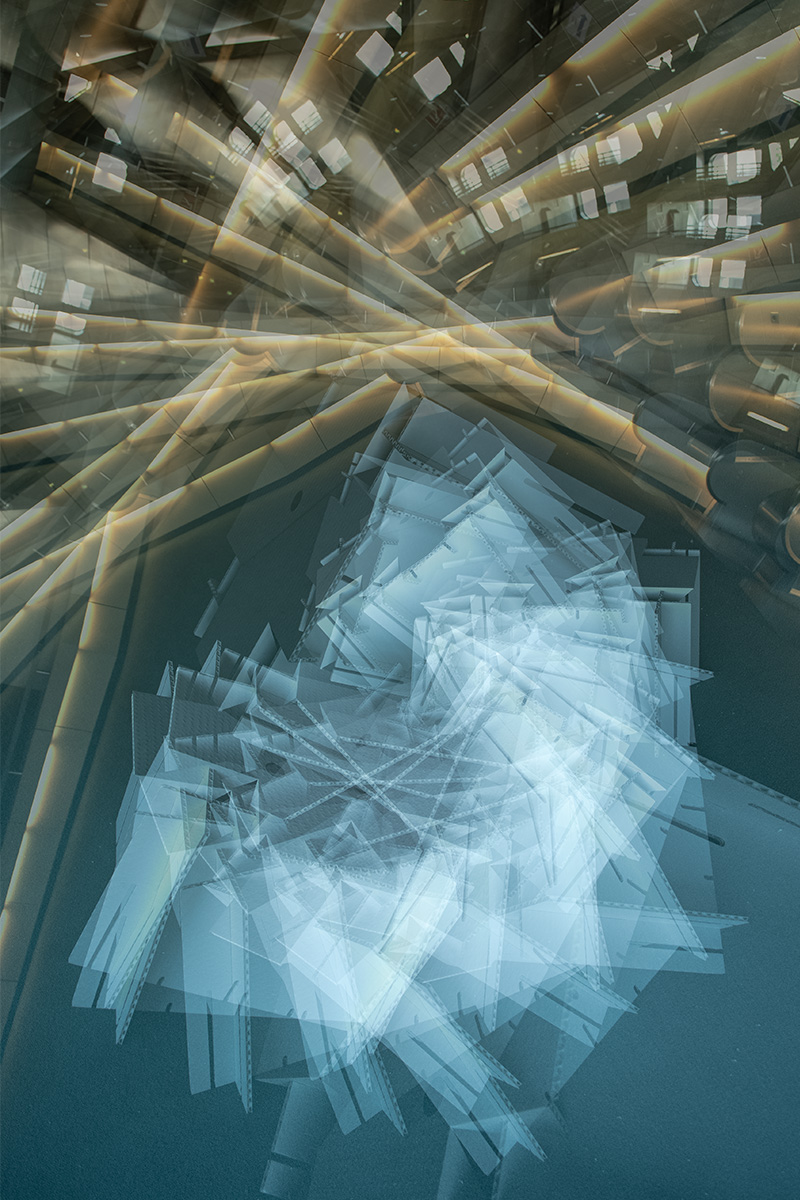

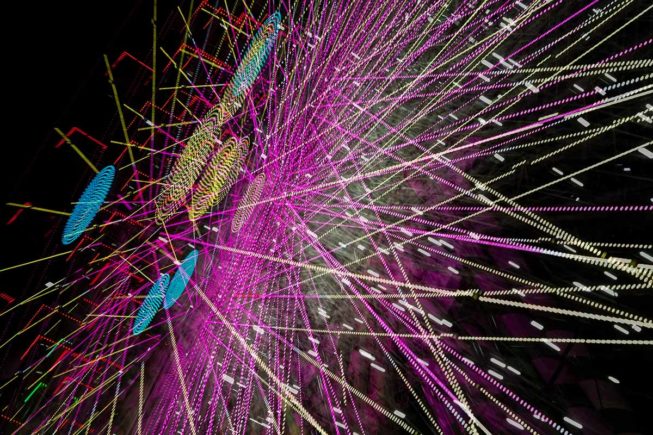

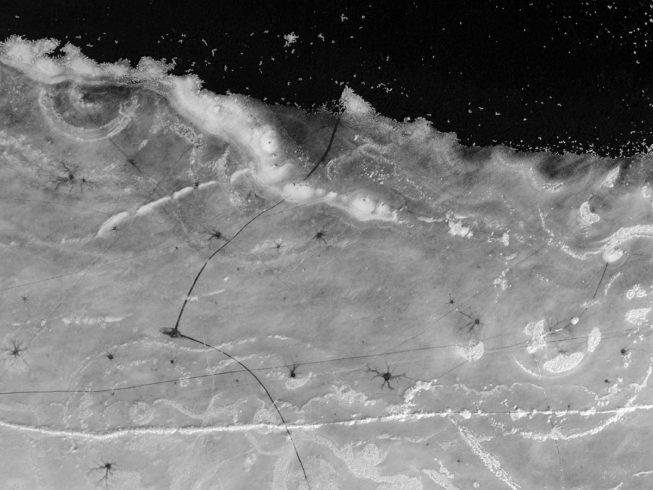

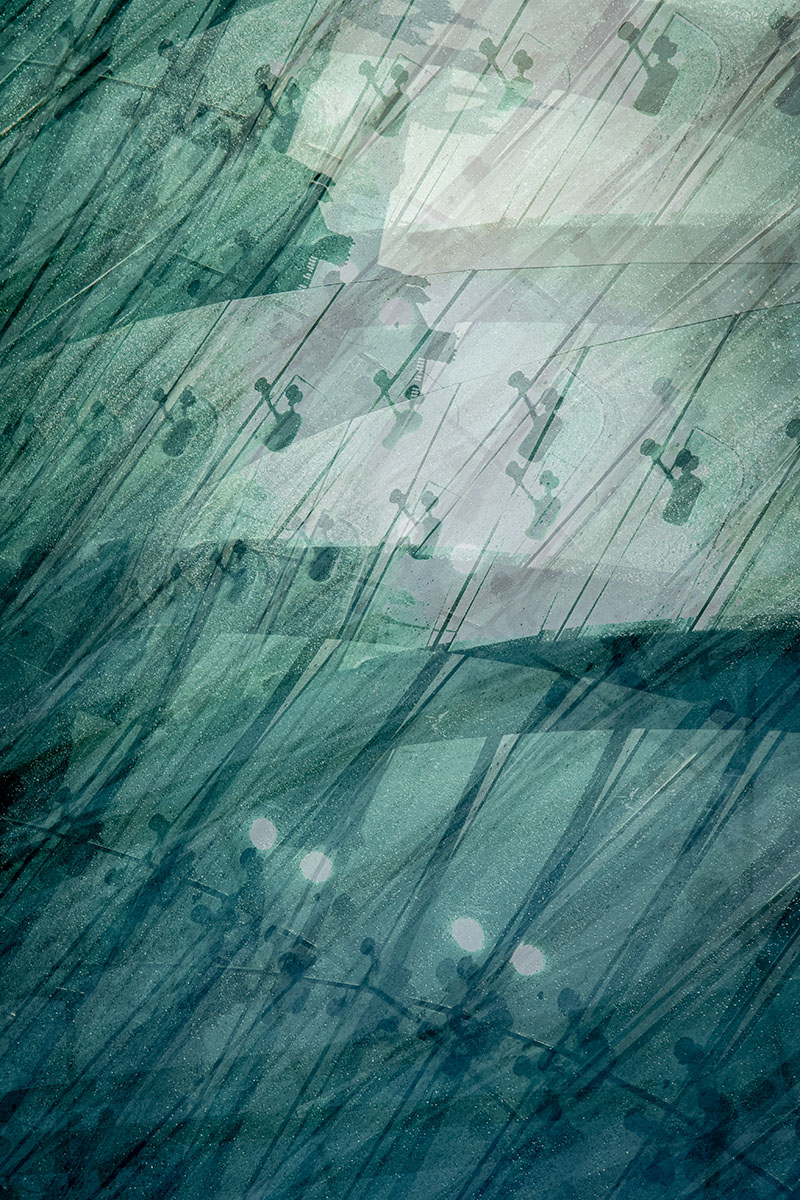

The festival curators chose my 10X images to be one of the solo exhibits at the festival. These images are made in camera with 10 multiple exposures. I started this group on my cross-country drive back in 2021. I’ve continued to explore the different composition and color combinations possible. It’s an eclectic group of images now.

I show these as large prints and it was a challenge to get them to Barcelona ready to hang. The prints for this show had been specially made in the U.S.A. and shipped just in time in a large mailing tube. I wanted them to hang flat at the festival so I decided to fly with them in a large flat portfolio case. That turned out to be a big mistake.

As the case was too large for the cabin, I checked it as special baggage. Unfortunately, airline baggage handling systems aren’t designed for flat items, even within size limits. The case missed my connecting flight and was subsequently lost in Frankfurt, beginning a three-day ordeal to locate and deliver the prints to Barcelona.

It was really stressful, but the festival organizers were helpful and understanding. We had some cheap prints made locally as placeholders and hung those until my prints were found. When they finally arrived I had a whole crew of people helping to hang them quickly.

Besides all that, it was a fantastic experience. I made some great connections that turned out to be useful the next month (see below).

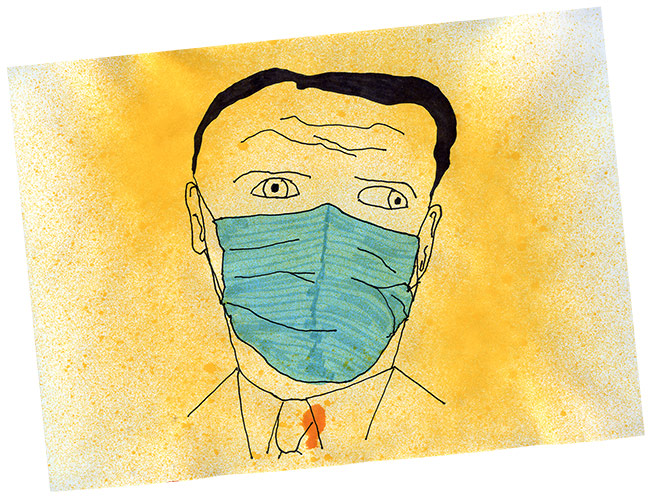

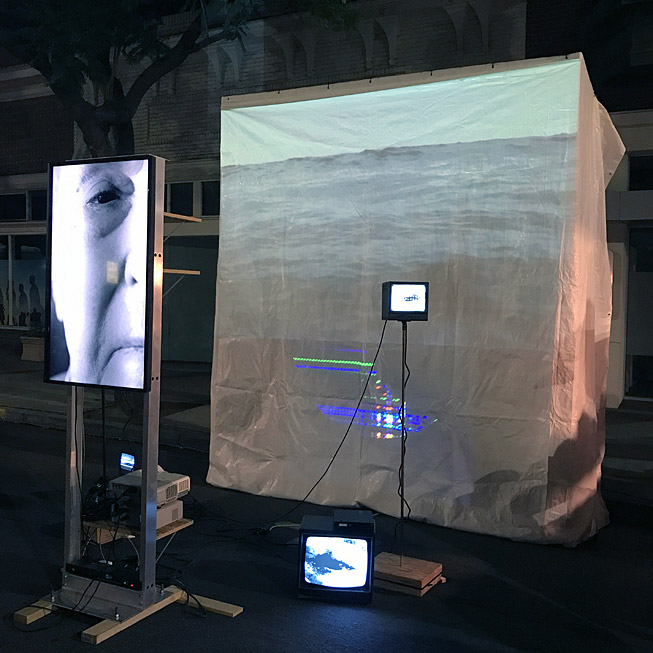

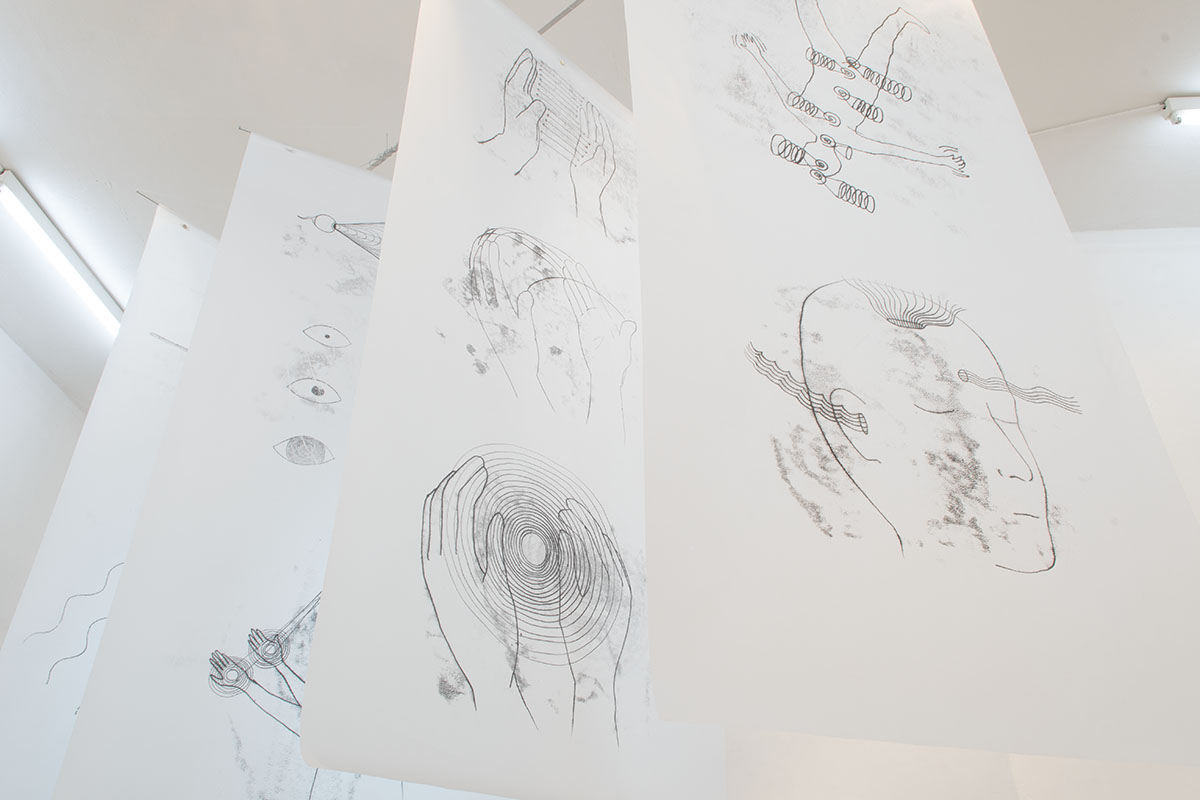

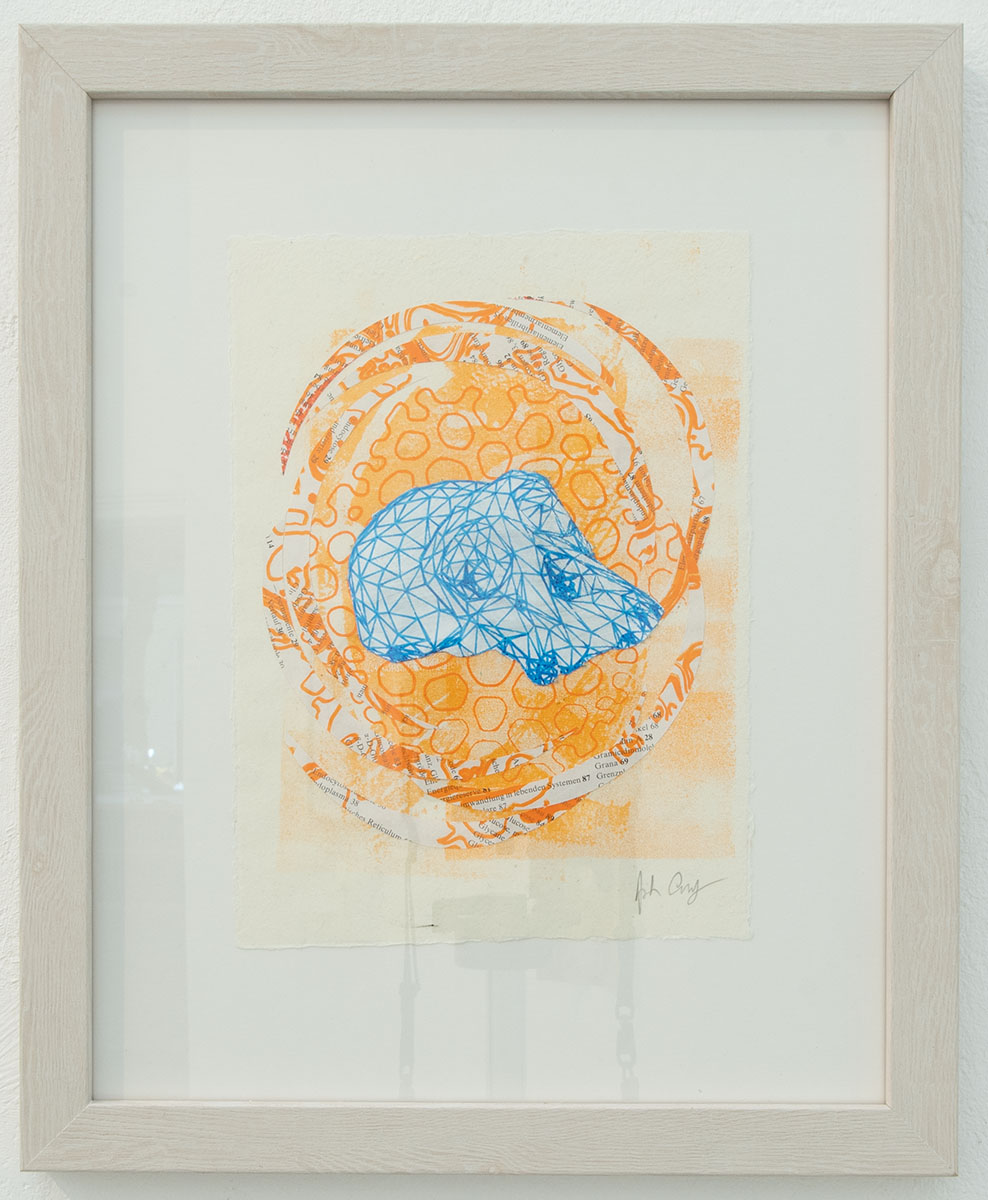

Phasenpunkte

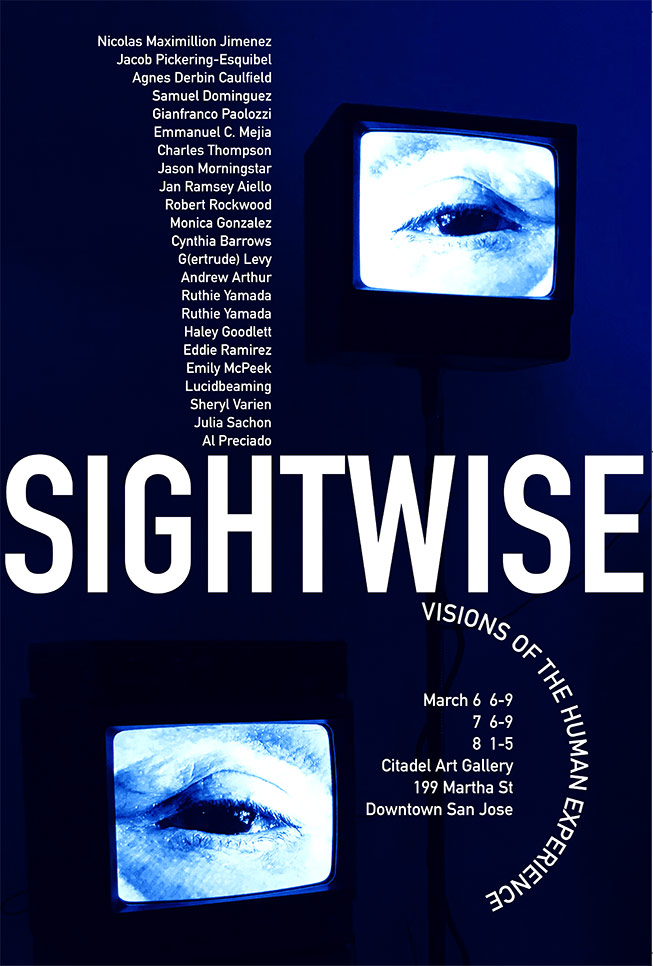

This was a last minute art show, organized and curated by me at HilbertRaum in Berlin. I got the offer to do it just a few days after returning from Barcelona.

The news came on August 7. The show was organized, hung, and then opened by September 7. That’s very fast for all those logistics, especially international. It was a minor miracle that it even happened. I ended up reaching out to 10 people to be in the show, mostly through direct messages on Instagram and Telegram. I knew some of them personally but found the rest through contacts from the recent Experimental Photography Festival.

8 artists ended up being in the show: Me, Samantha Tiussi, Hilde Maassen, Sofia Nercasseau, Gábor Ugray, Cecilia Pez, Hajnal Szolga, and Daniel Kannenberg.

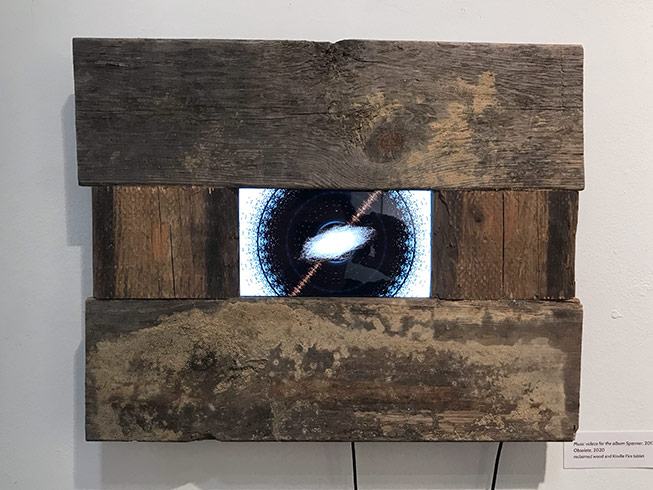

On opening night, I performed music with the new synths I built, and Samantha Tussi performed with glass clothing fitted with microphones.

Here is the promotional website I made for it.

Overall, it was a huge success. I was exhausted afterward, but how often will I get the chance to do something like that? Life is short.

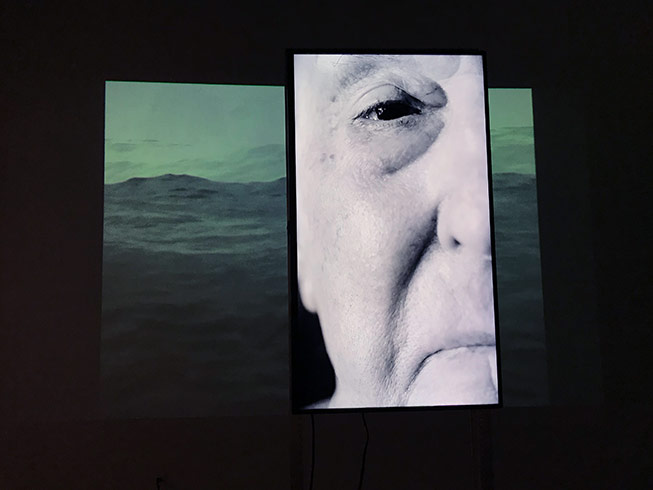

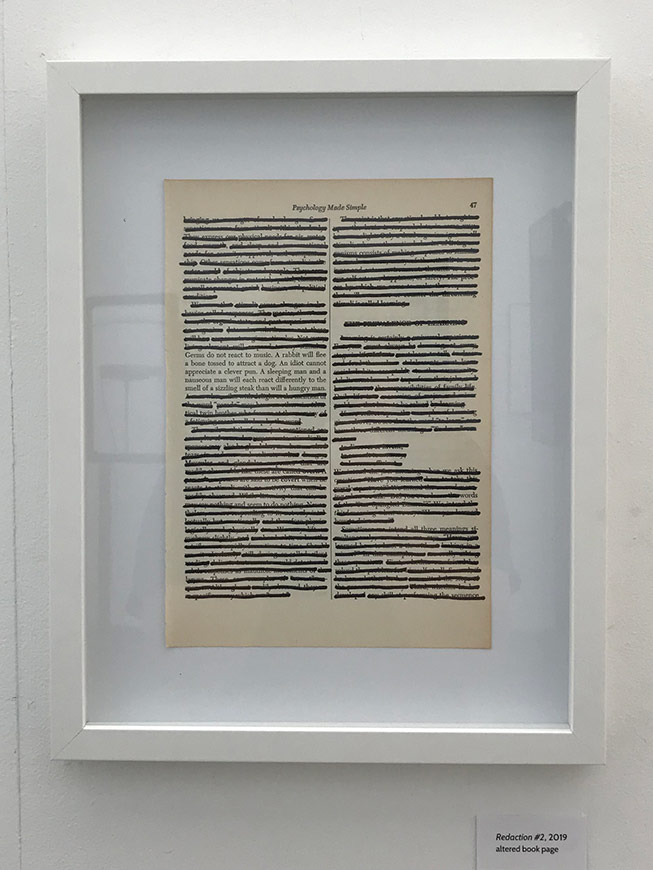

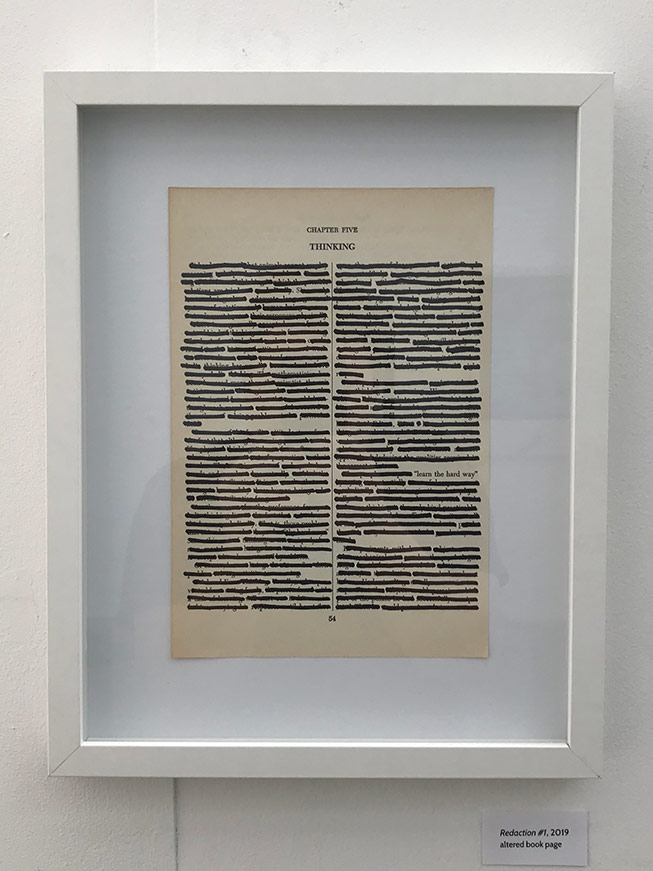

Phasenpunkte (“phase points”) is a reference to the points at which different materials change phase, like water turning to steam. It is a used as a metaphor to relate to the transition points between organic human experience and virtual and synthetic spaces. The human phase points are our feelings, thoughts, and imagination. Our consciousness is the membrane between the virtual and the real. This show is an aesthetic response to that idea. It’s not about technology, it’s about being human.

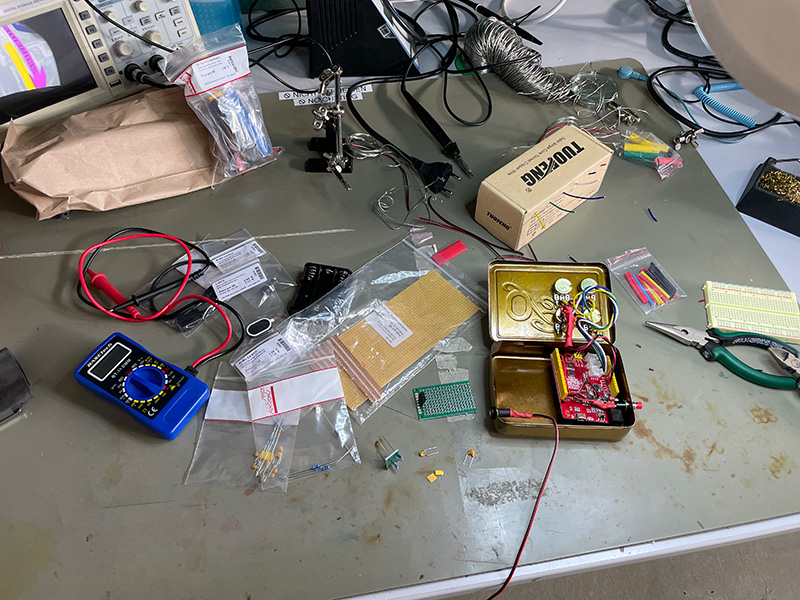

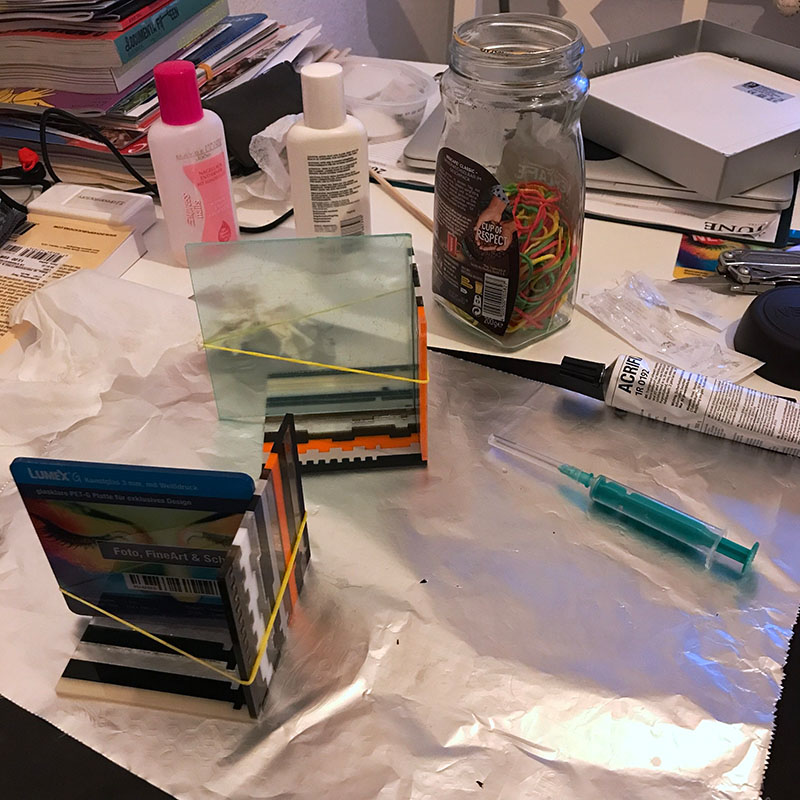

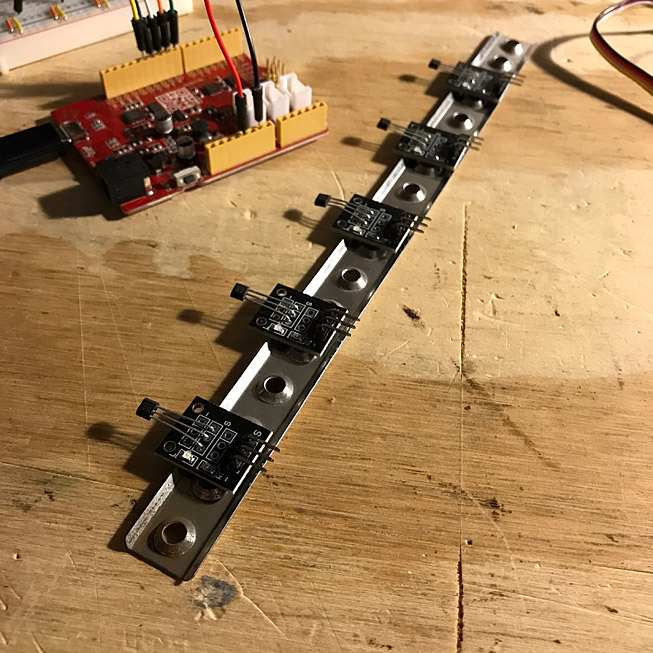

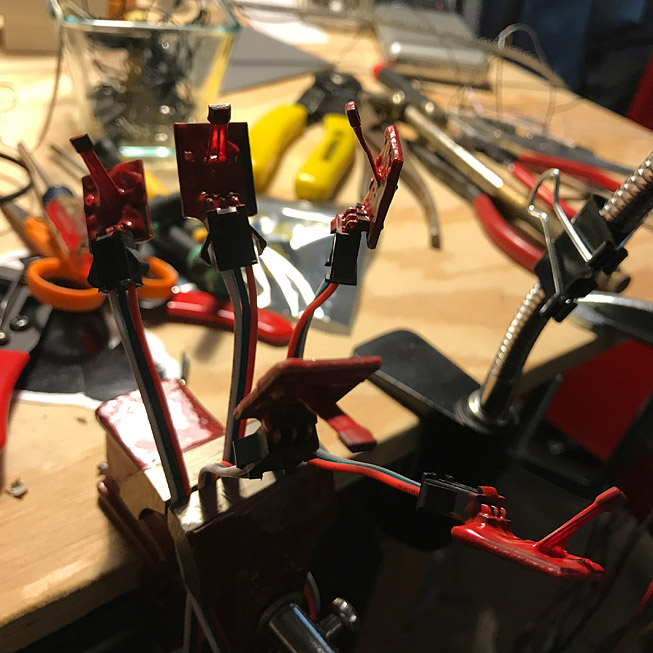

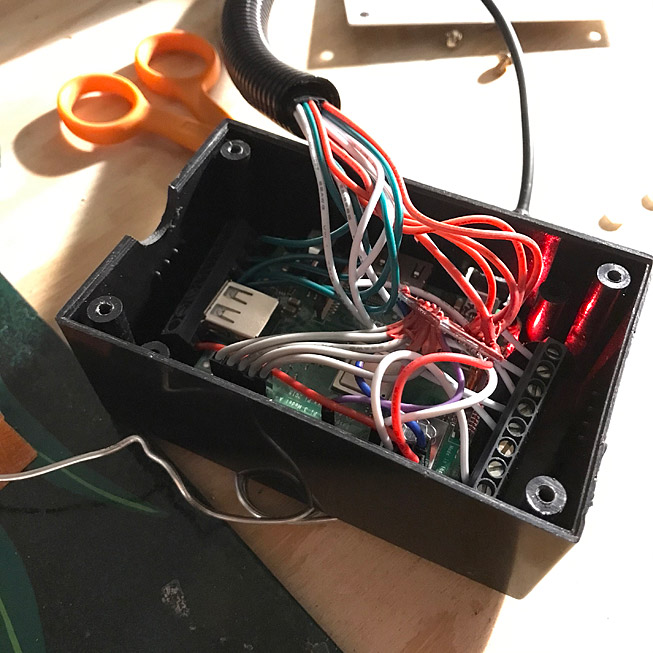

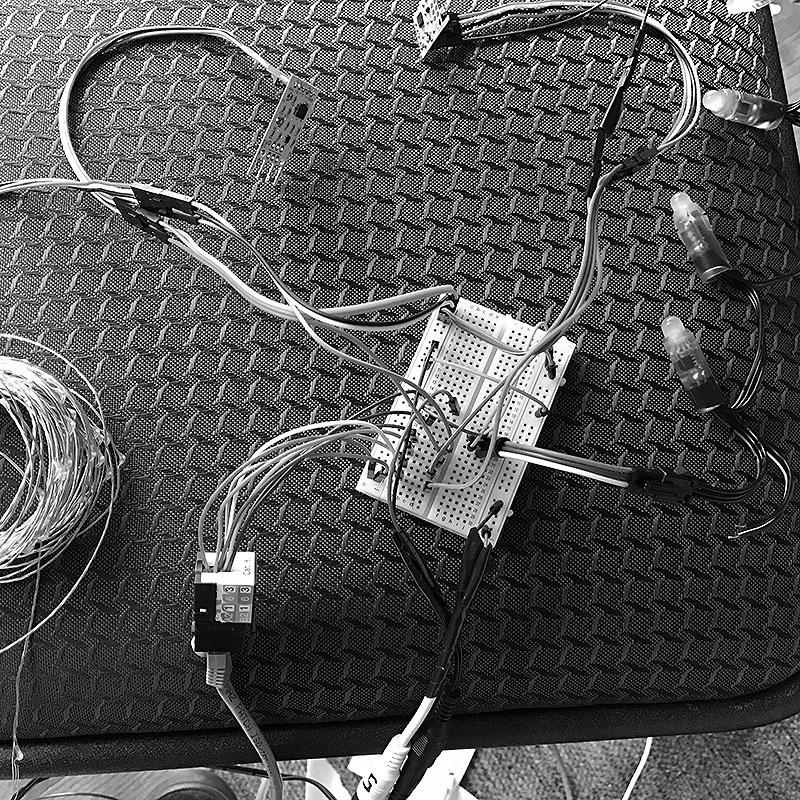

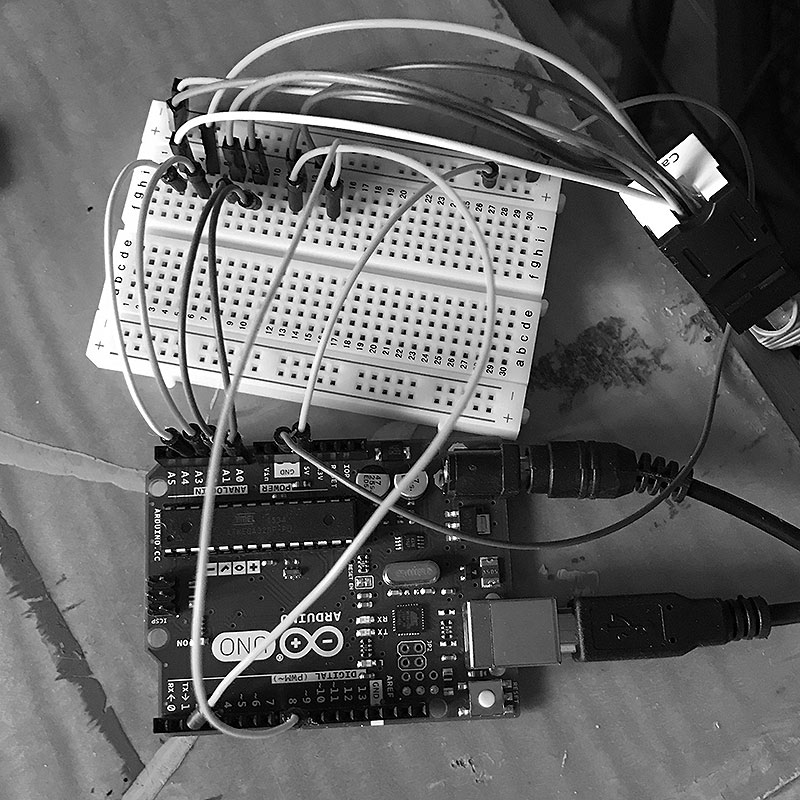

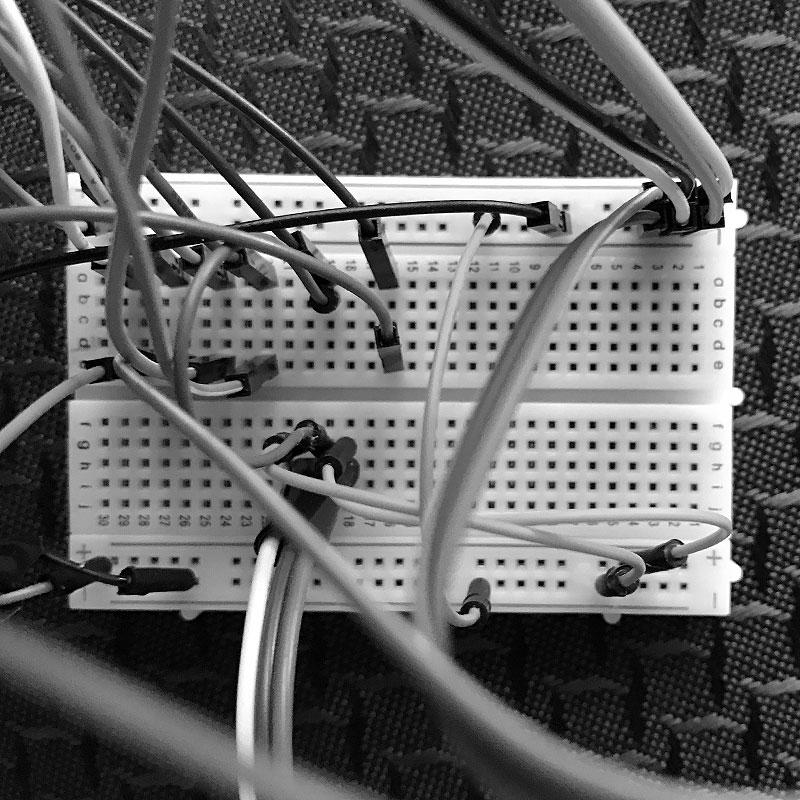

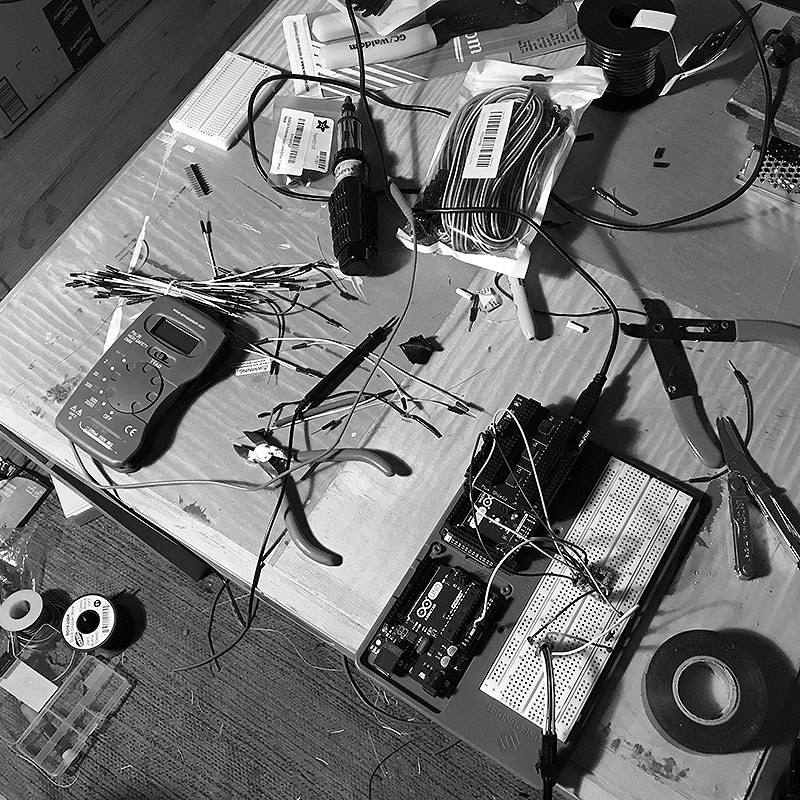

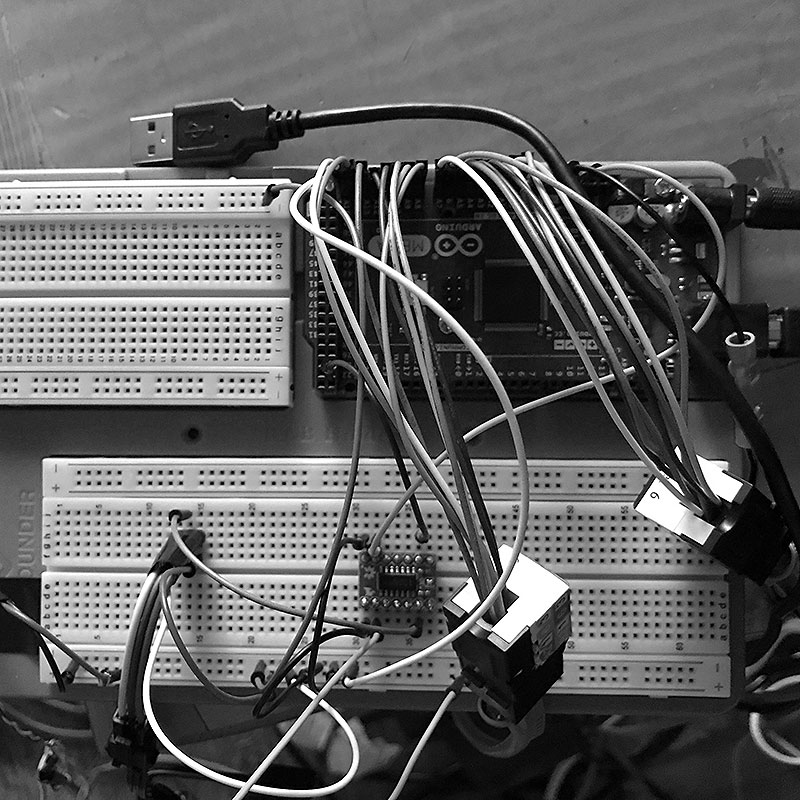

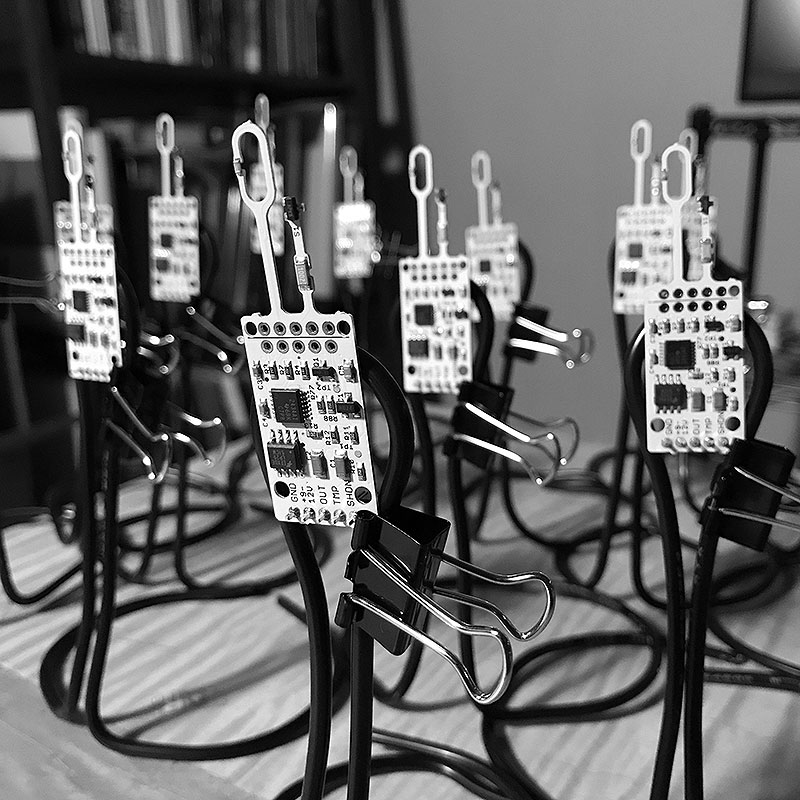

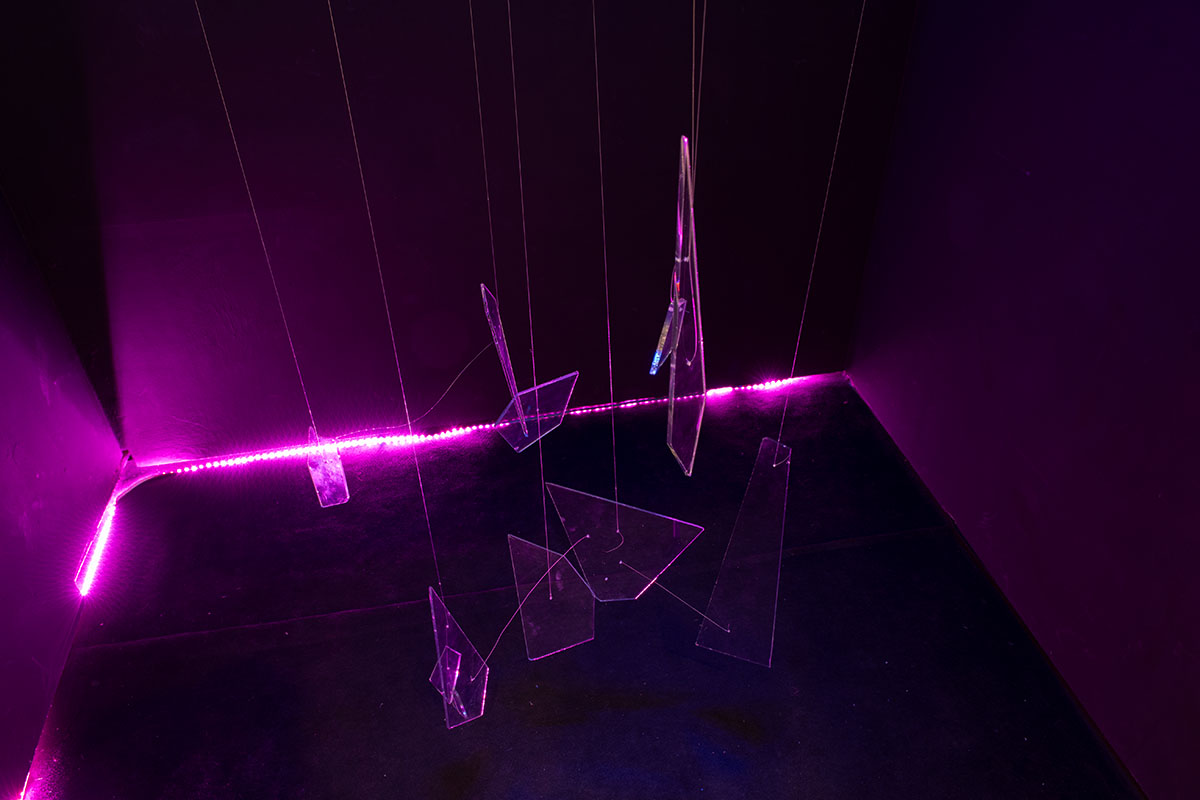

Programming glass robots

One of the artists in Phasenpunkte, Samantha Tussi, asked for some help programming the controllers for her glass sculptures. She constructed stepper motor assemblies that hung from the ceiling and raised and lowered pieces of glass according to her instructions. The glass was arranged as human figures and the movements conveyed emotions and a kind of slow dancing.

She used Arduino boards to send signals to the controllers and needed help with the code that ran on the boards. Although she has some technical background, she relied on ChatGPT to generate most of the code. While this initially worked, it proved difficult to modify when she wanted to make custom changes.

I agreed to help and we had coding sessions at her studio in Berlin. Even though tools like ChatGPT and generate functioning code, it can look like gibberish when a programmer is trying to read it. I ended up rewriting large chunks of that code to be able to make the customization she needed.

A big reward was getting to see her final show performed at the Acker Stadt Palast in central Berlin. It was a touching and interesting show. I was proud to use my coding skills for something like that.

These are all the changes I made to fix the code that ChatGPT had generated. It was a lot of work.

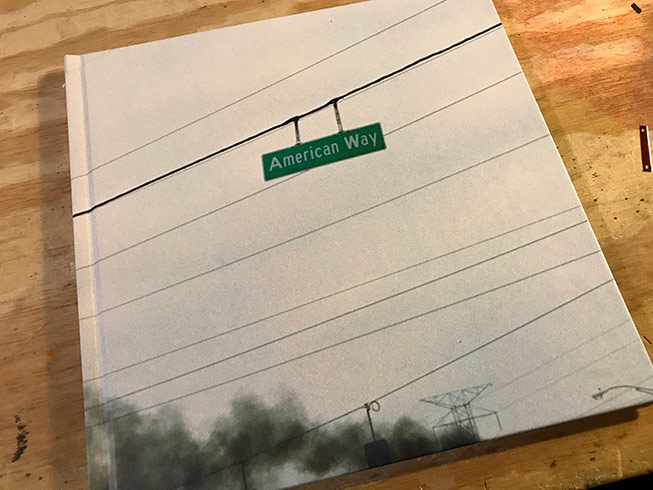

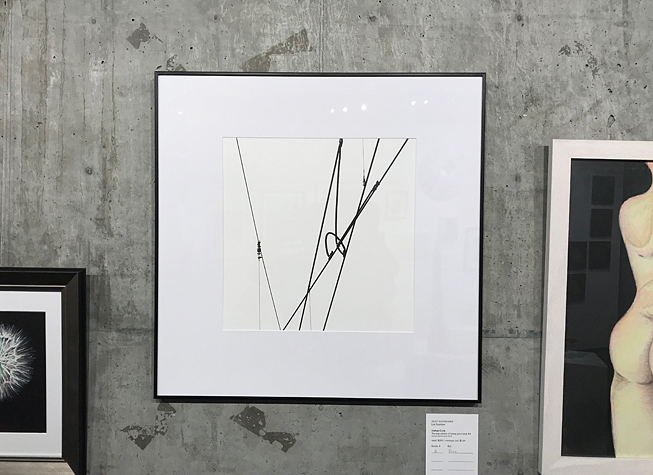

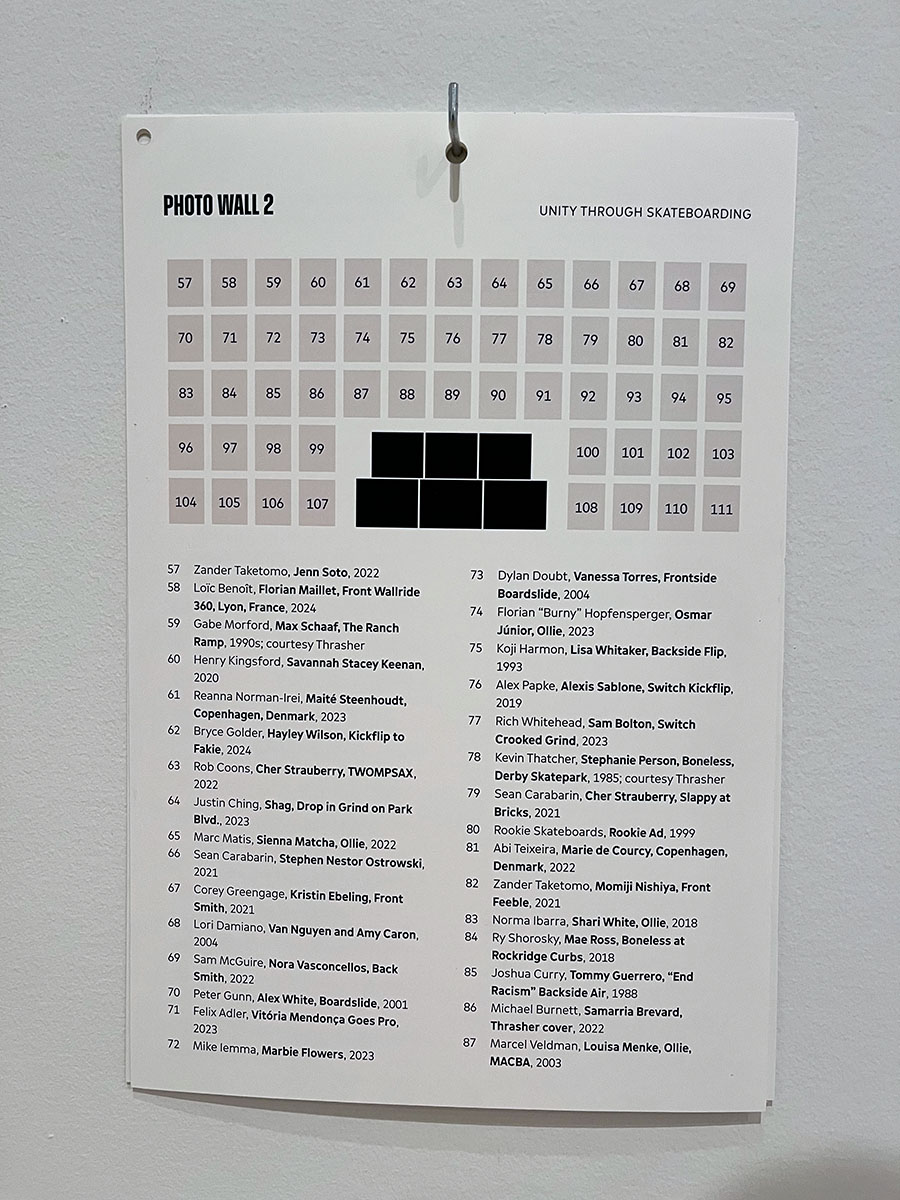

Skateboarding at SFMOMA

After all these years, what got me into the San Francisco Museum of Modern Art is one of my skateboarding photos I took when I was 17.

Jeffrey Chung contacted me about an upcoming show called Unity through Skateboarding. Tommy Guerrero had mentioned my photo of him riding a board with ‘End Racism’ written on the bottom—a photo that has resurfaced online over the years and garnered attention whenever Tommy shares it on social media.

I took that particular image on a trip to San Francisco with my friend Tony Henry. I took the photo at Bryce Kanights’ ramp in his San Francisco warehouse. I was only 17 at the time, using a camera I had just bought to replace one that had been stolen. I took many photos back then, working to support the cost of film, equipment, and road trips. I was convinced I would have a career as a skateboard photographer.

Back then, I didn’t get much support for that kind of photography. Most adults thought it was frivolous and my friends had no idea how much all that cost. I did get published though and made a little money. Most importantly, I got an amazing life out of it that nobody in my high school could compare with.

Now, 35 years later, that photo is hanging at SFMOMA. I haven’t even seen it yet, as I’ve been in Berlin during the organization and opening. It’s funny how things work out.

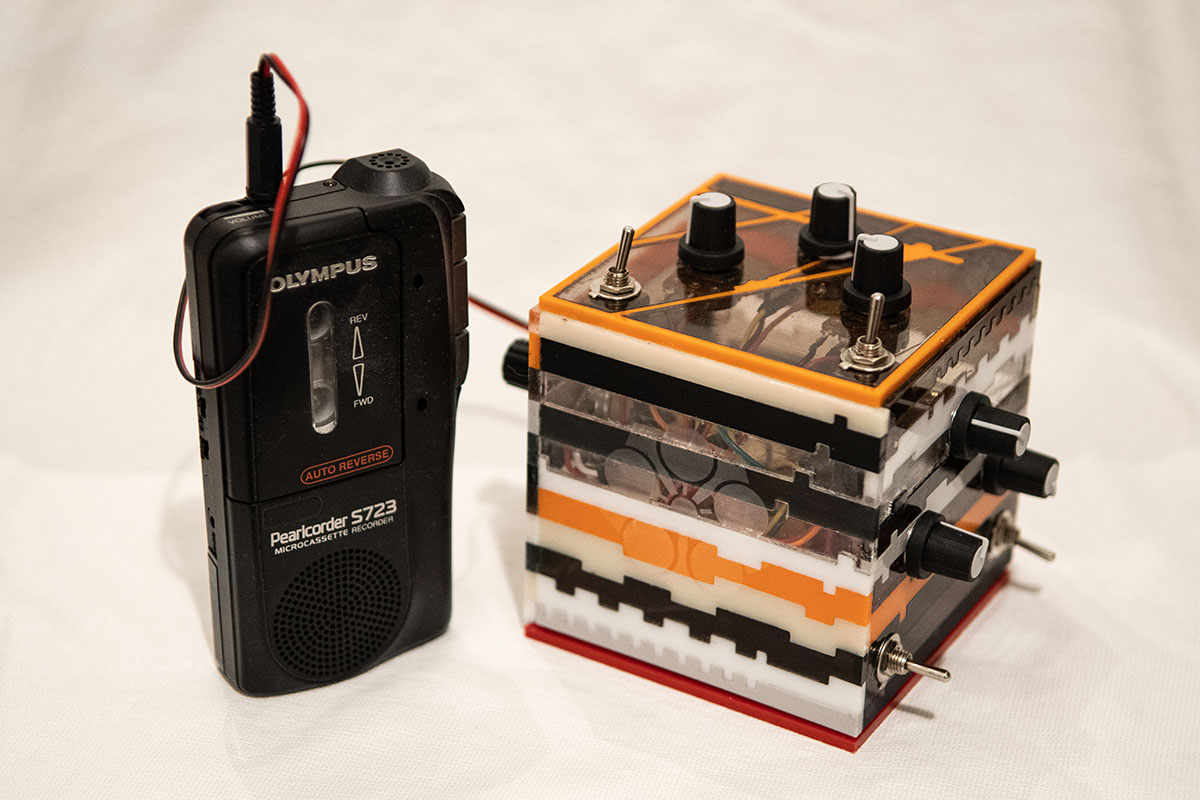

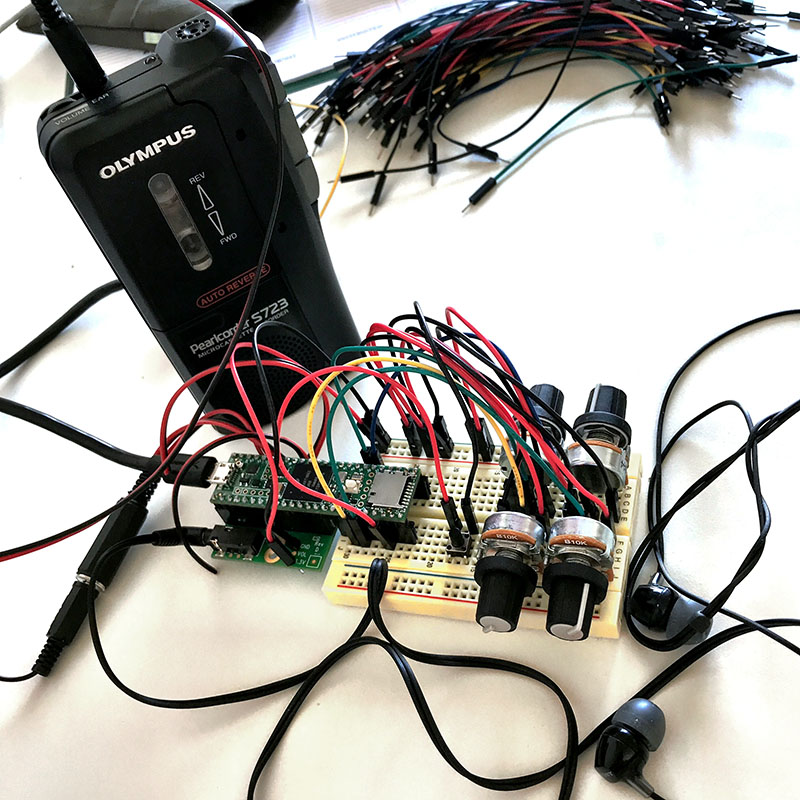

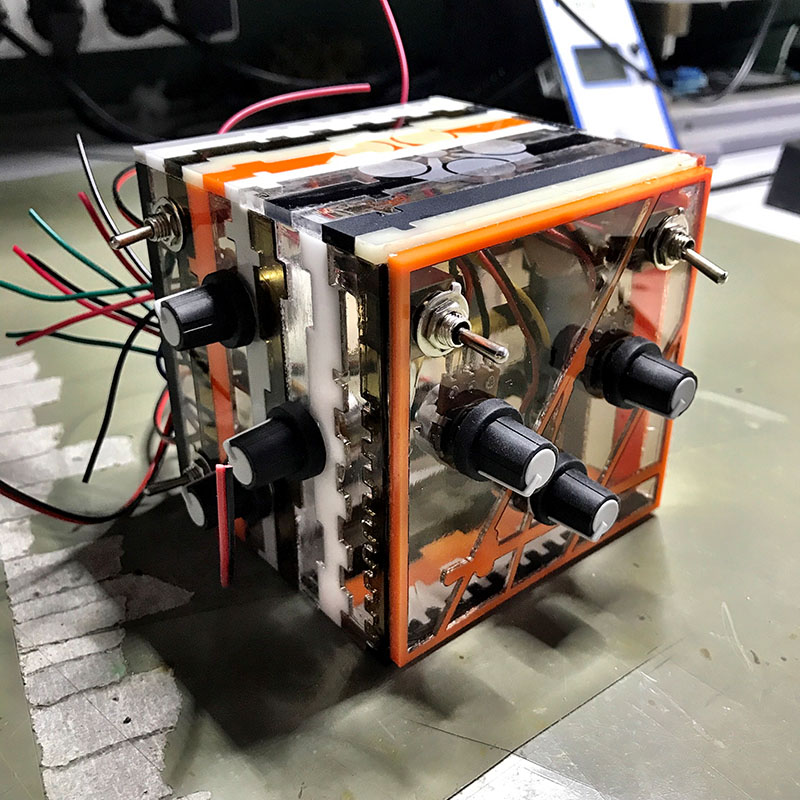

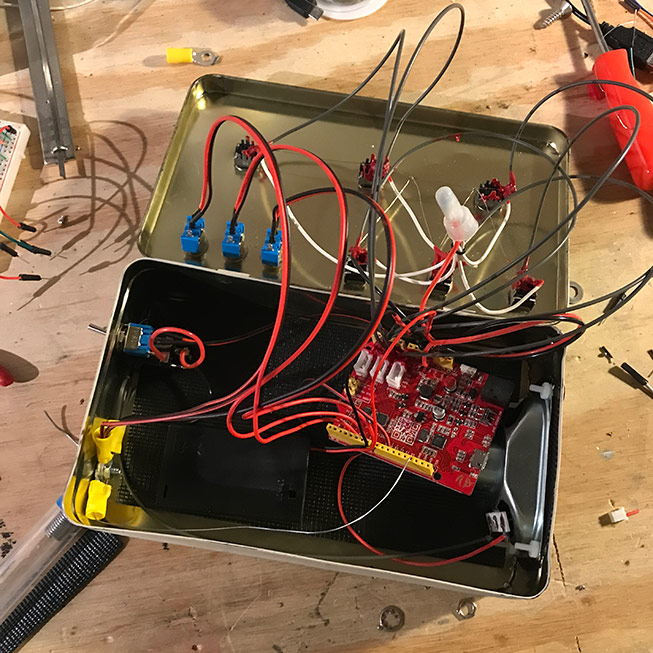

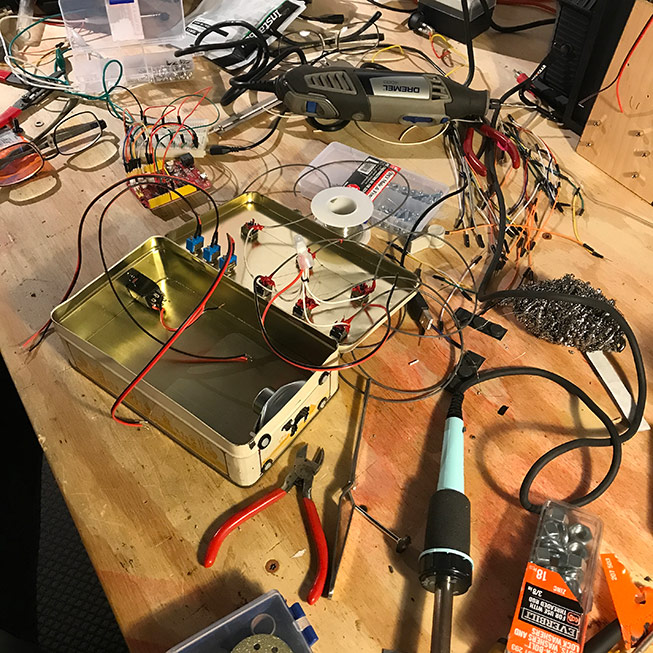

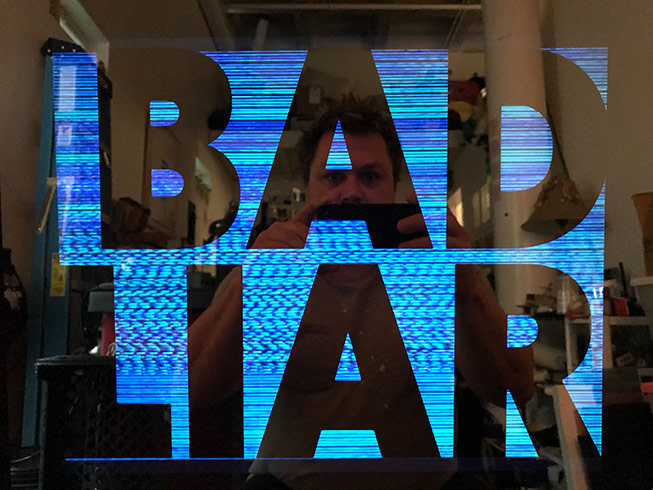

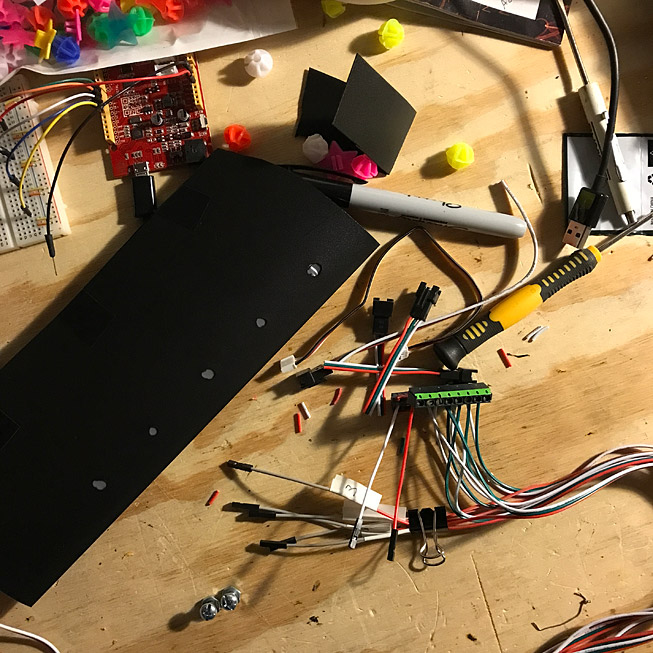

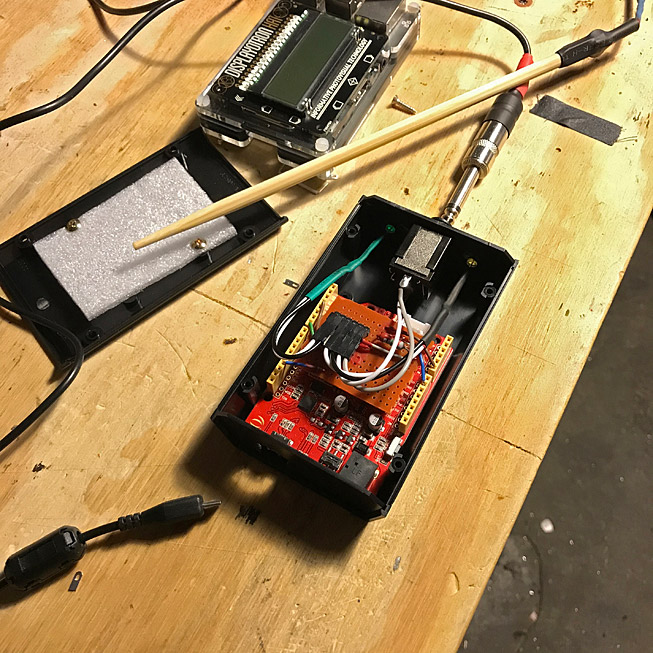

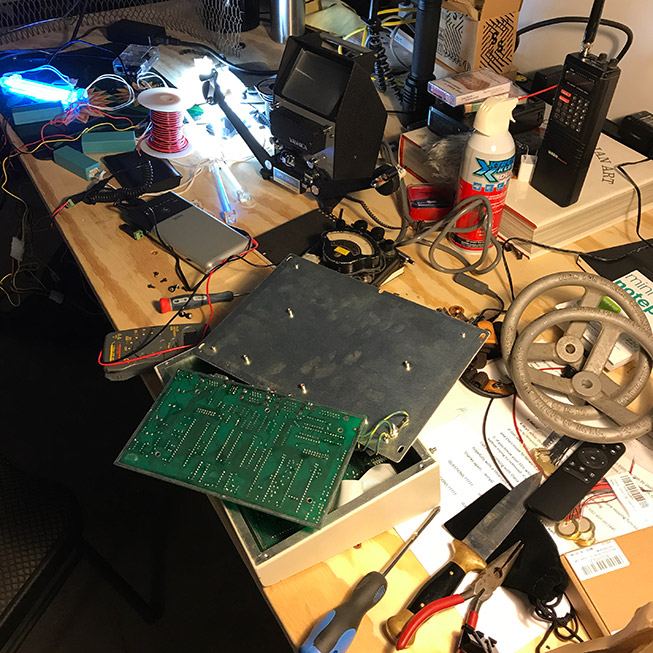

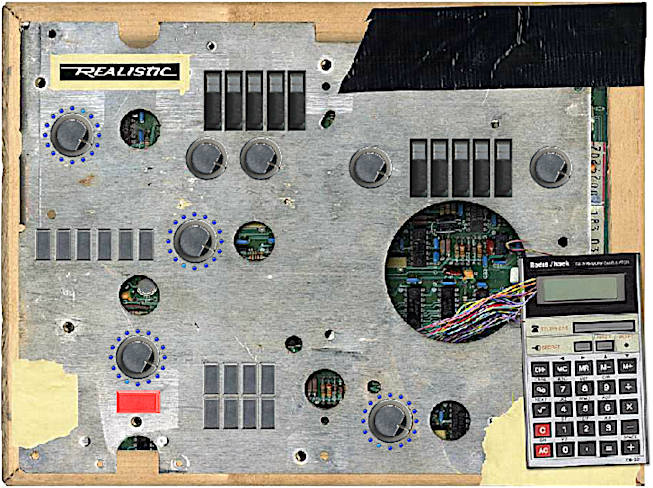

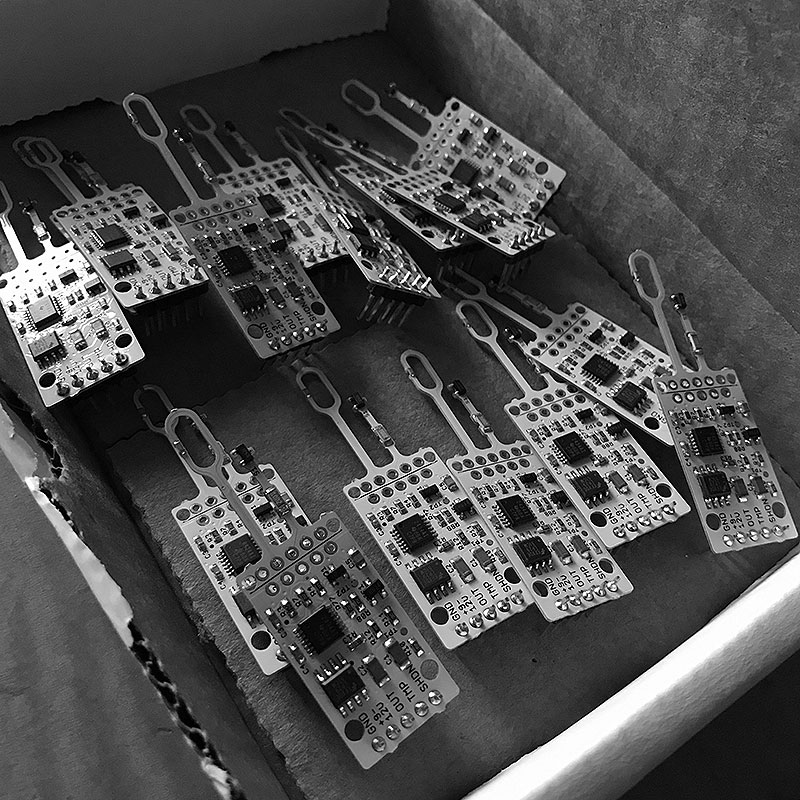

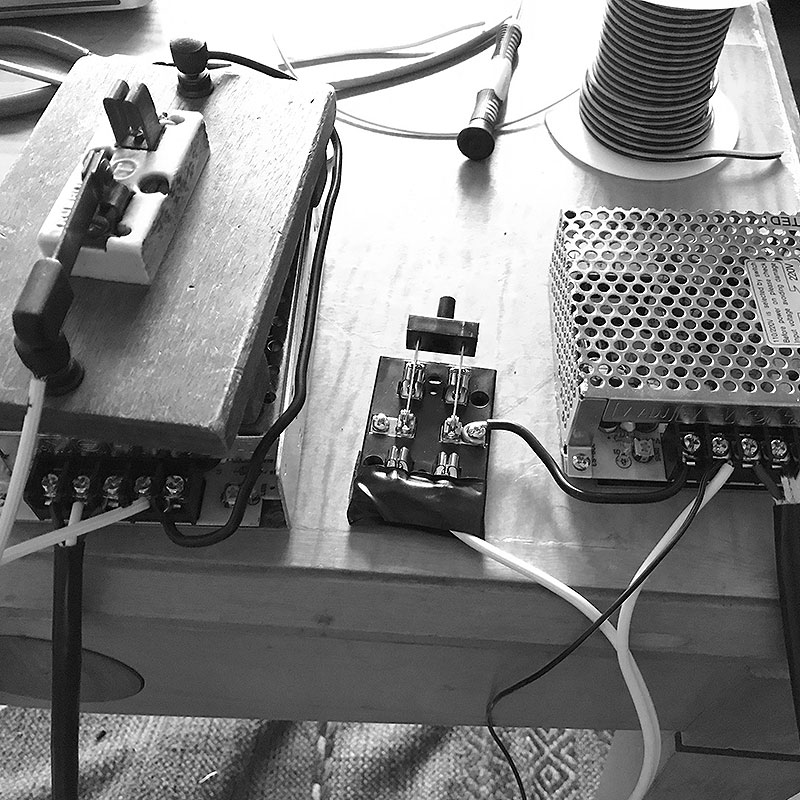

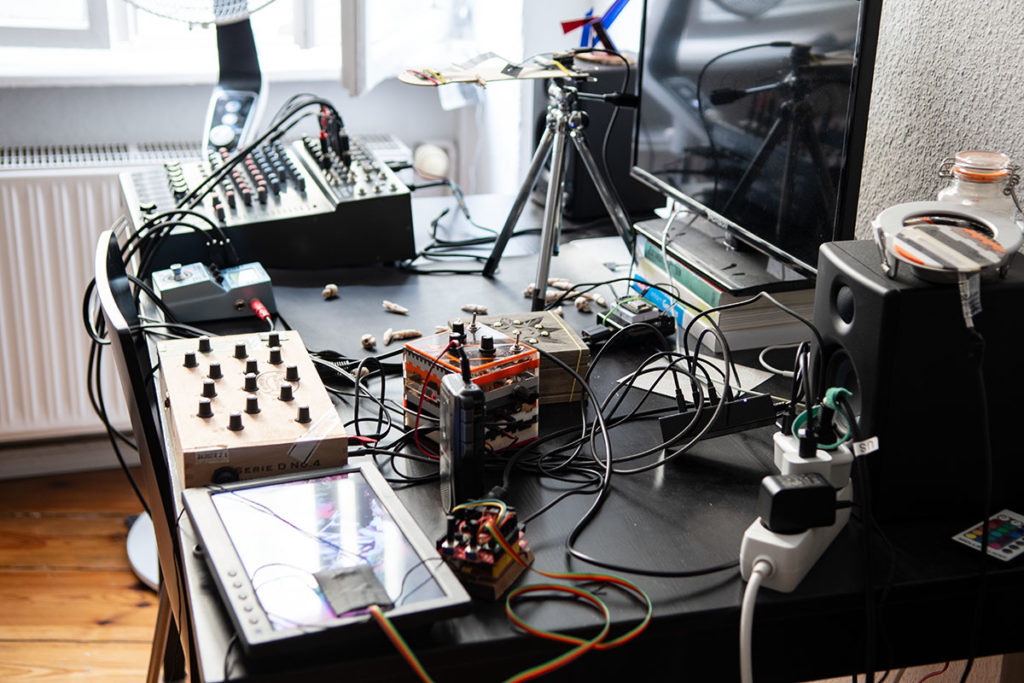

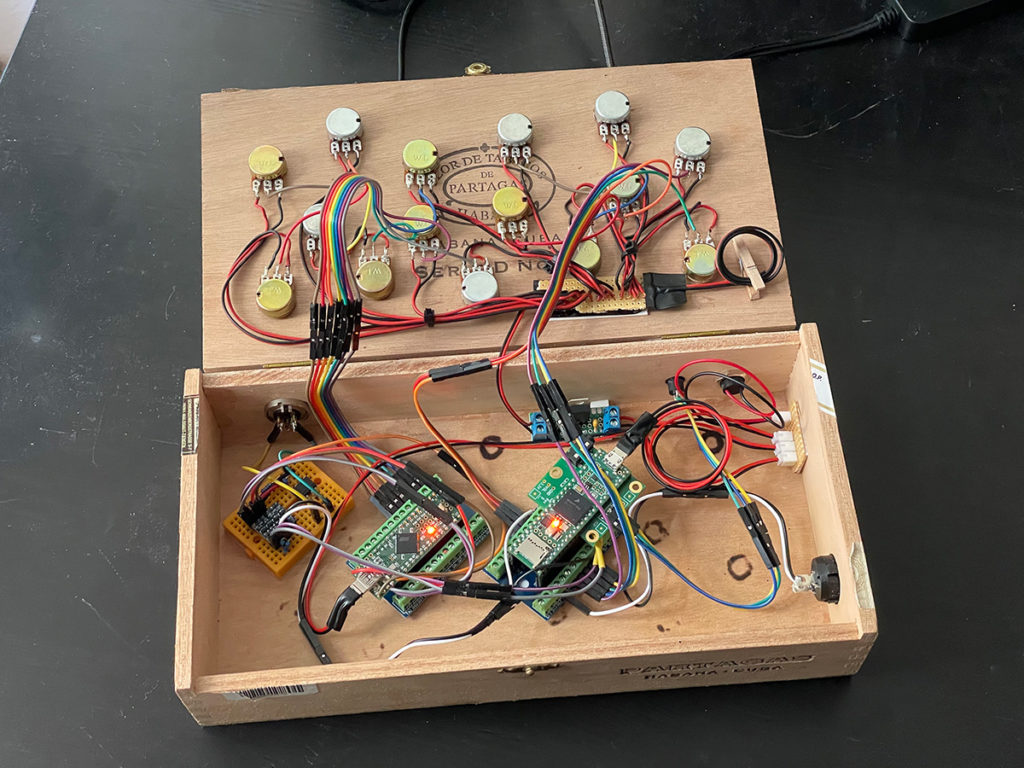

Synthesizer brain transplants

I rewrote the sound engines for some recent synths I built. They sound pretty cool now.

Berghainbox

Starbox

Habanos

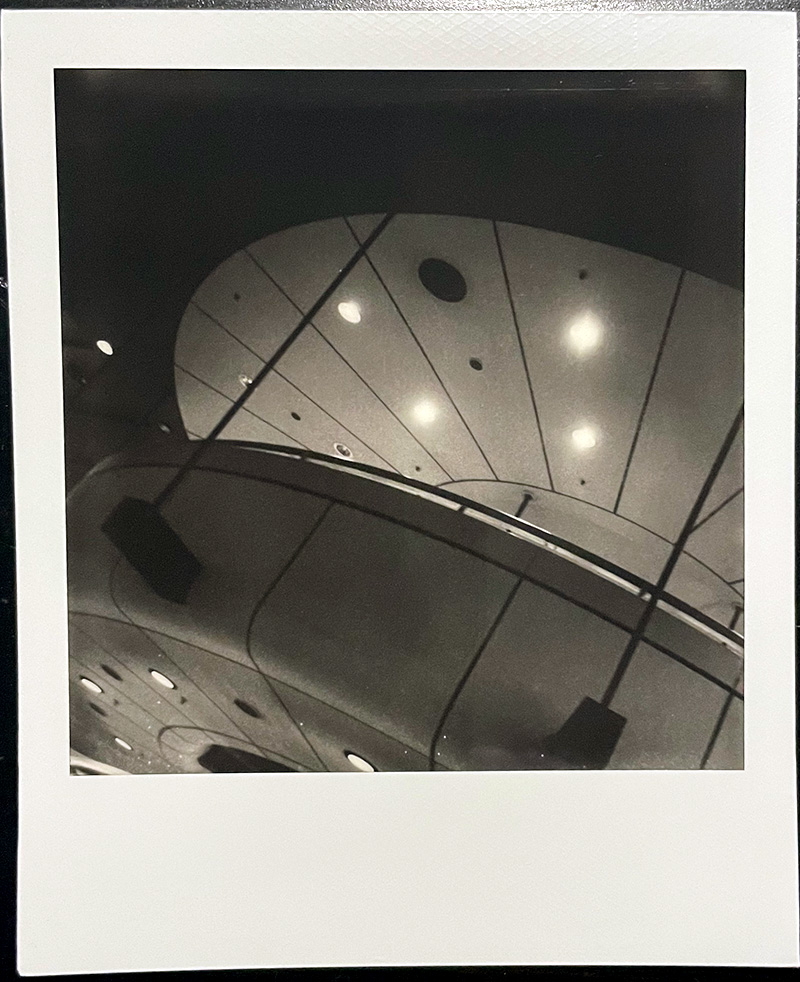

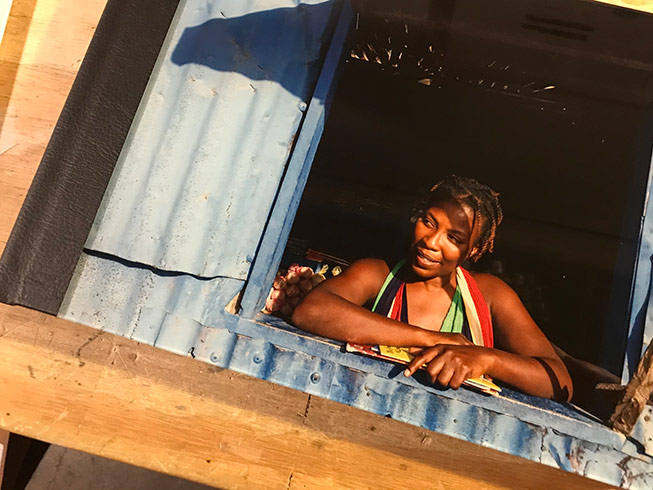

Modern Istanbul

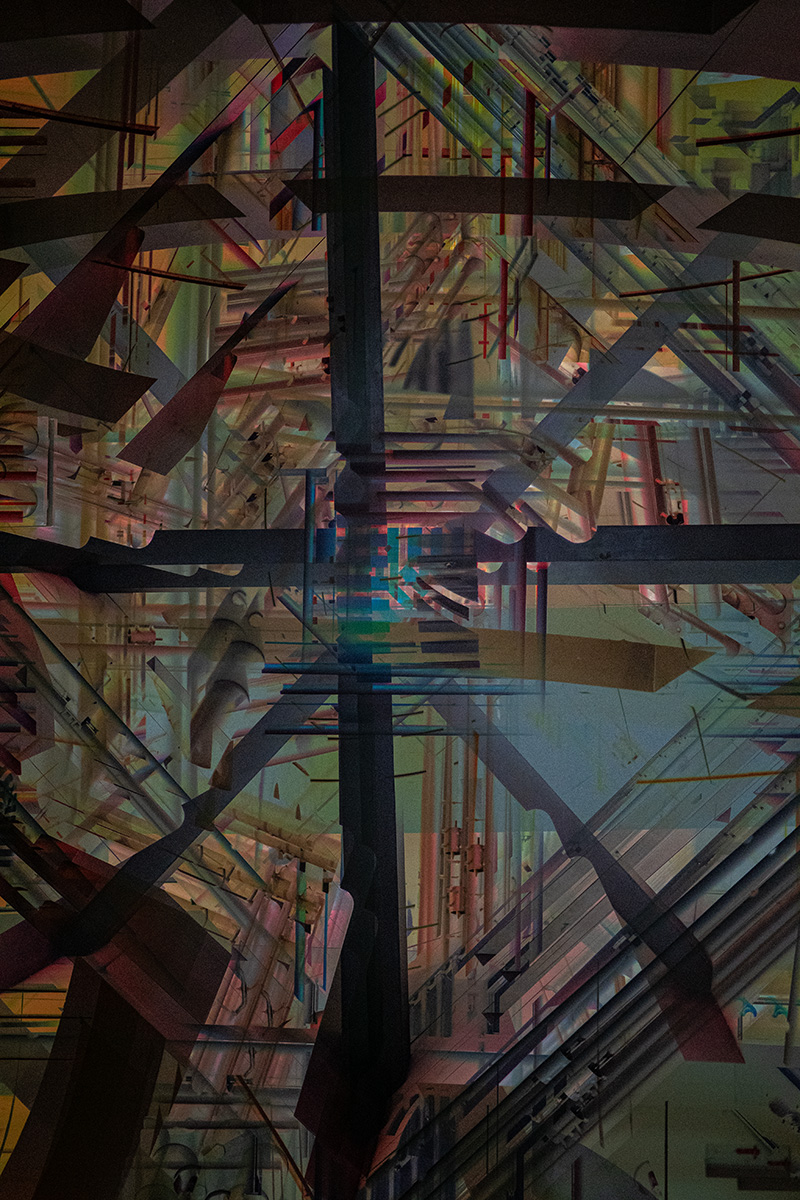

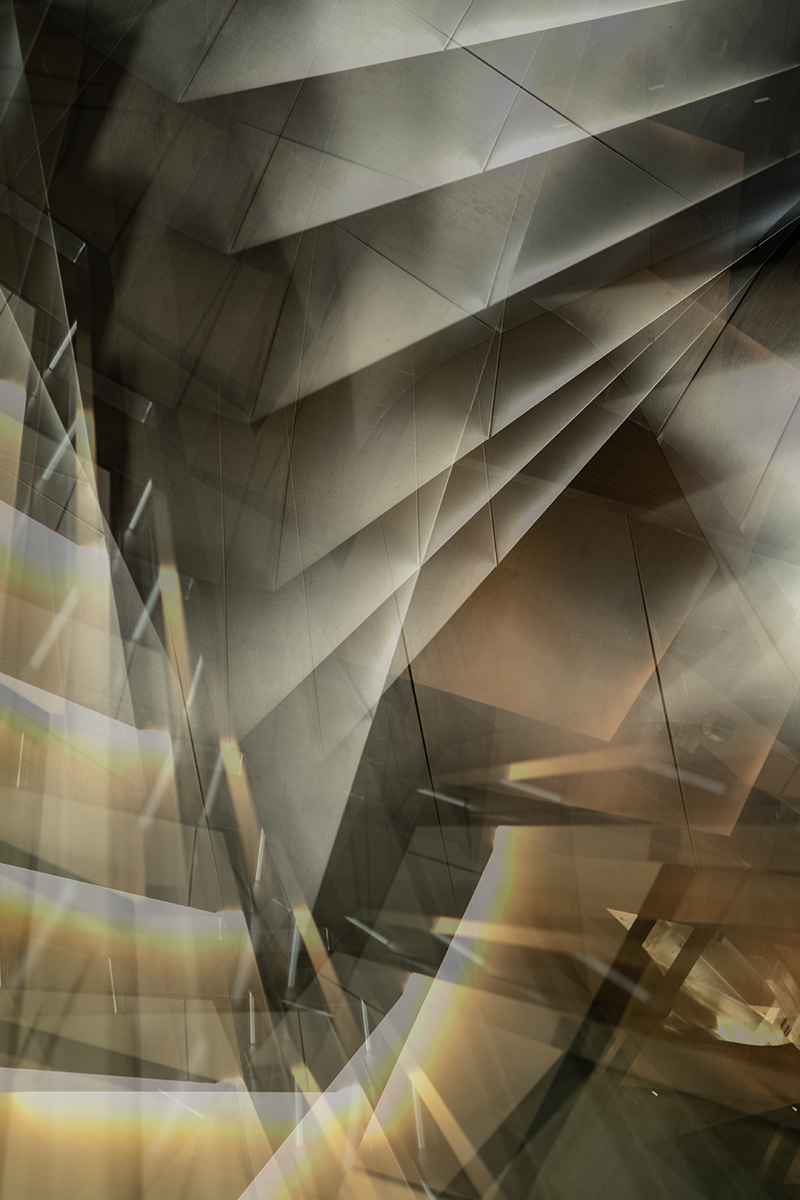

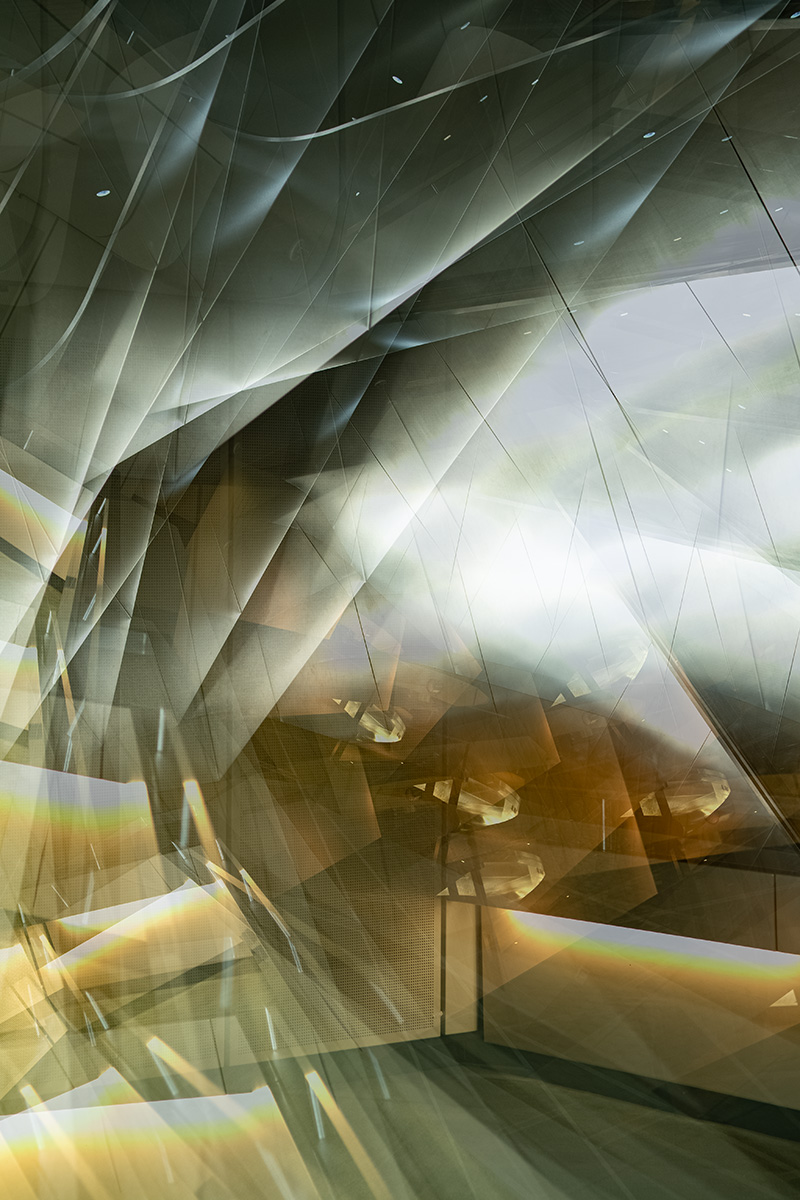

I’ve been following Olafur Eliasson’s work for many years, even before moving to Berlin. I’m particularly interested in his light installations using glass and reflections, as well as his career trajectory. He doesn’t create traditional art objects, and some of his installations are incredibly complex and likely expensive—a scale and resource level I aspire to work at one day.

He has a large solo show at Istanbul Modern right now. I made plans to go see it so I could get a sense of how he staged it all.

Istanbul, Turkey is relatively close to Berlin but the flight is still 3 hours. It was at the beginning of Winter, so crowds weren’t nearly as large as normal.

The show was great, but Istanbul was interesting in itself. Visiting that place was the first time I had been in an Islamic country. That wasn’t radically different, but I was definitely aware of it. The call to prayer happens multiple times a day throughout the city.

Images I made on the streets of Istanbul

Olafur Eliasson at Istanbul Modern

Olafur Eliasson’s first solo exhibition in Türkiye, “Your unexpected encounter,” reflects the artist’s deep interest in light, color, perception, movement, geometry, and the environment. The artworks also reveal the network of relationships the artist forges between broad areas of research and his multidisciplinary practice. As well as following the personal journey of the artist, the exhibition addresses navigation and orientation on a wider scale, inspired by the site of the museum and its maritime location by the Bosphorus.

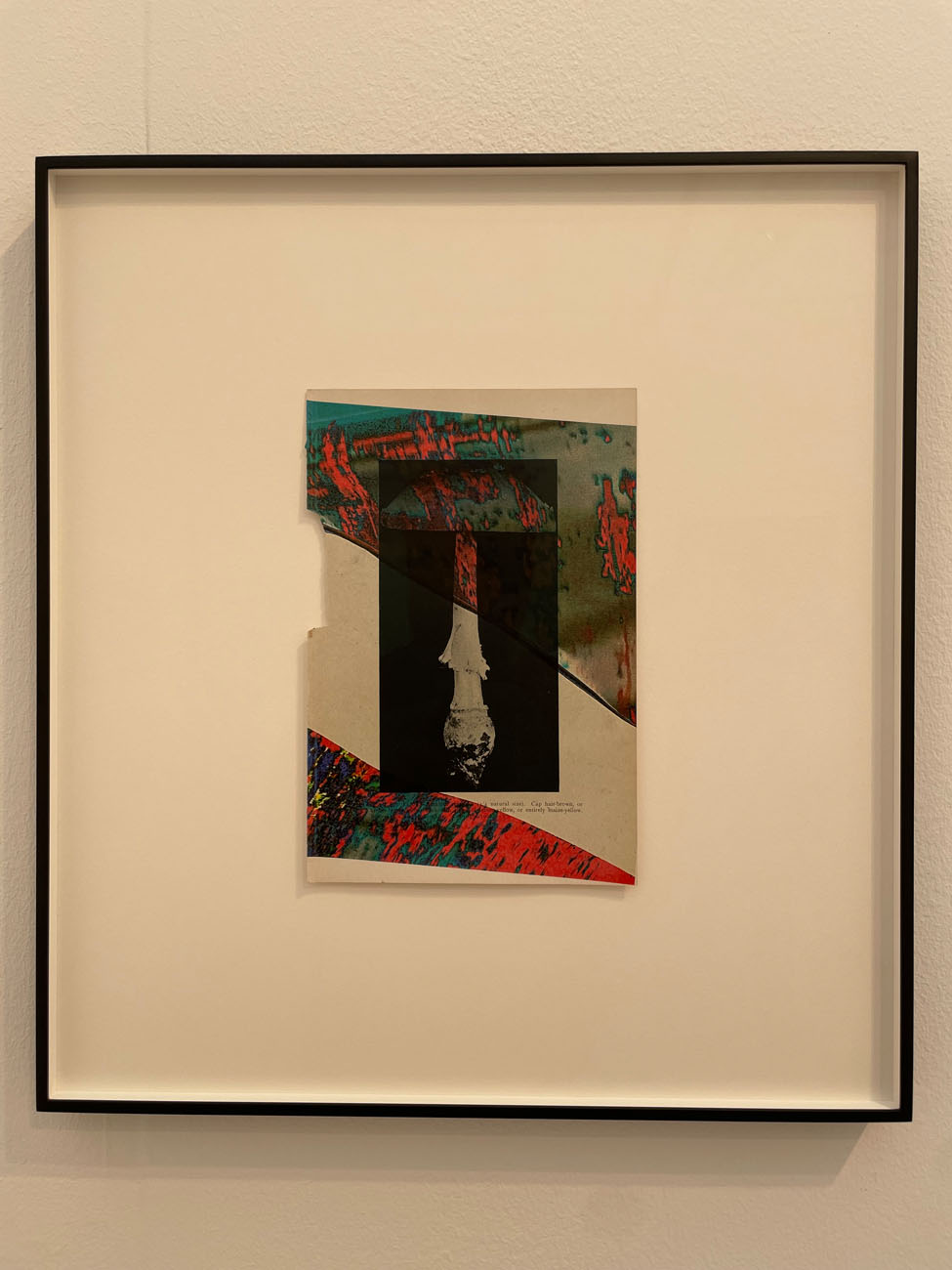

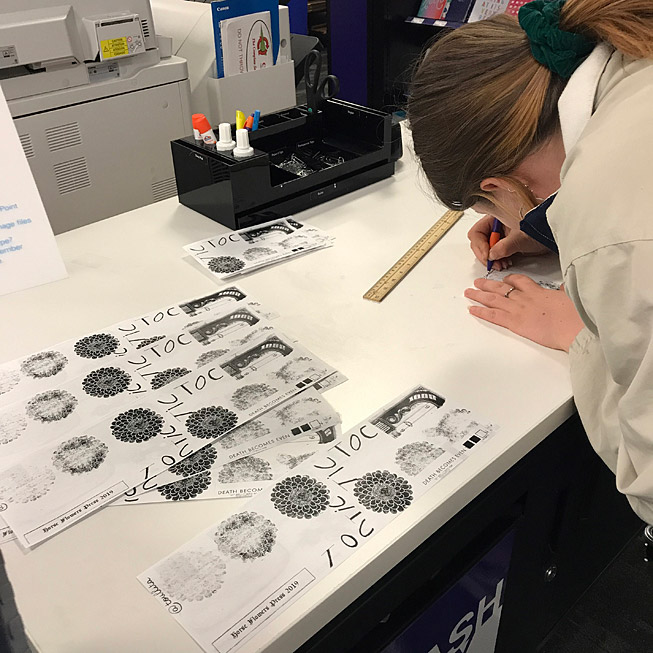

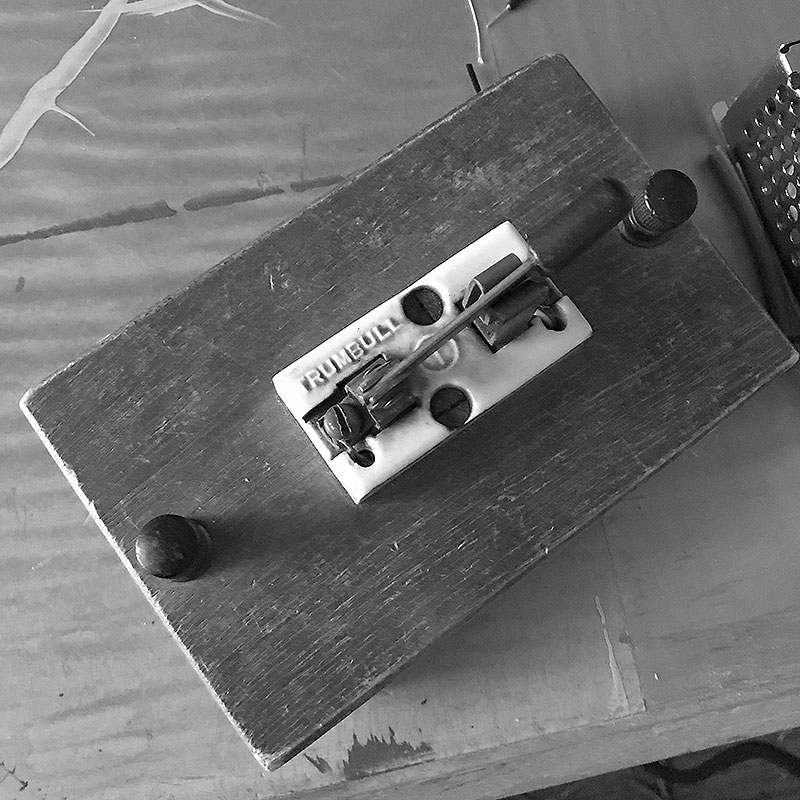

Making prints at Kunstquartier Bethanien

A long planned appointment to work at Bethanien finally happened.

The limits of my kitchen printingmaking studio were too much. I just couldn’t get enough pressure on the lino plates to see consistent results. Also, shutting my kitchen down for printing means going without cooking for 4-5 days. That’s a pain.

I am a member of the B.B.K. artist union here in Berlin. One of the perks of that is access to the Druckwerkstatt im Kunstquartier-Bethanien. Among other things, it is a well-maintained and world class printmaking facility.

For my skill level it’s way over the top. But, I did want to use the lino presses they have. Appointments are made far in advance, so I did that. The time came after Istanbul and I went in. The prints I got were far superior in every way. I also learned new approaches and got ideas for different work.

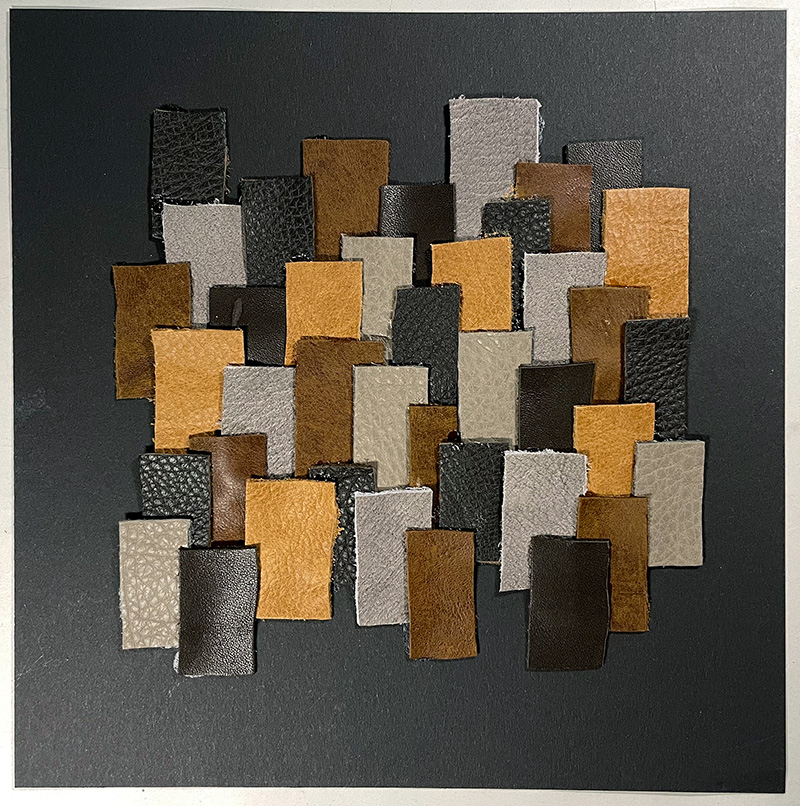

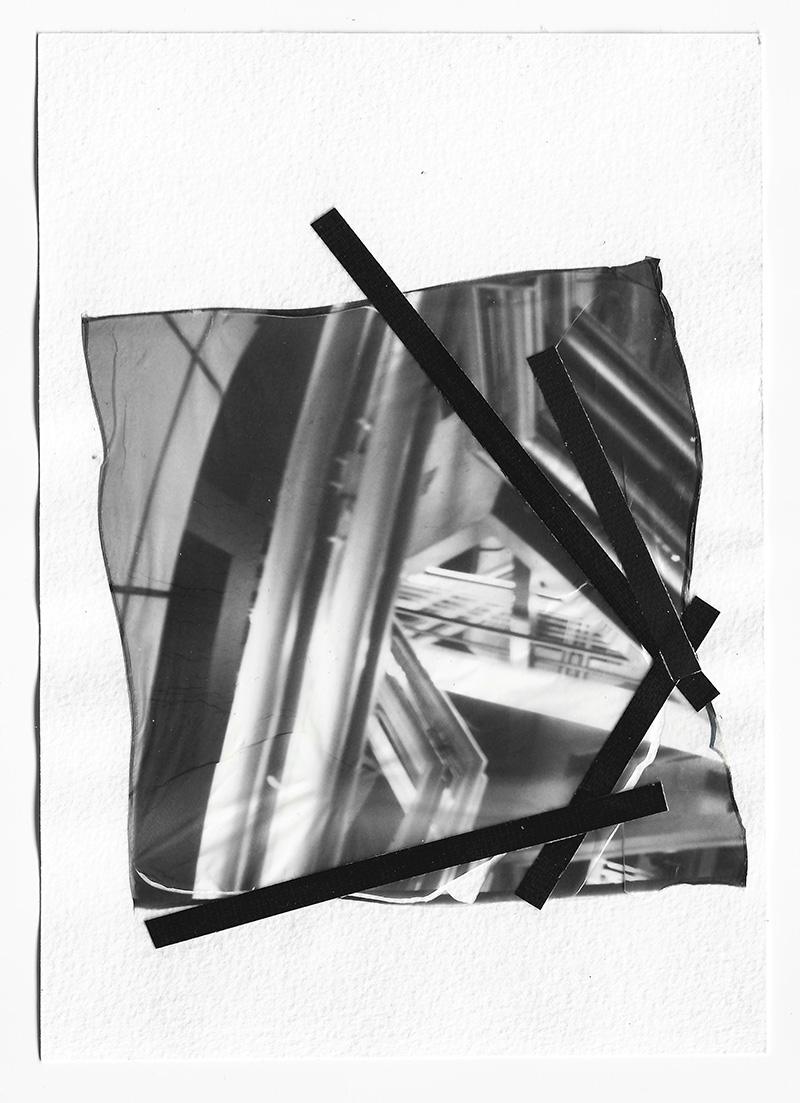

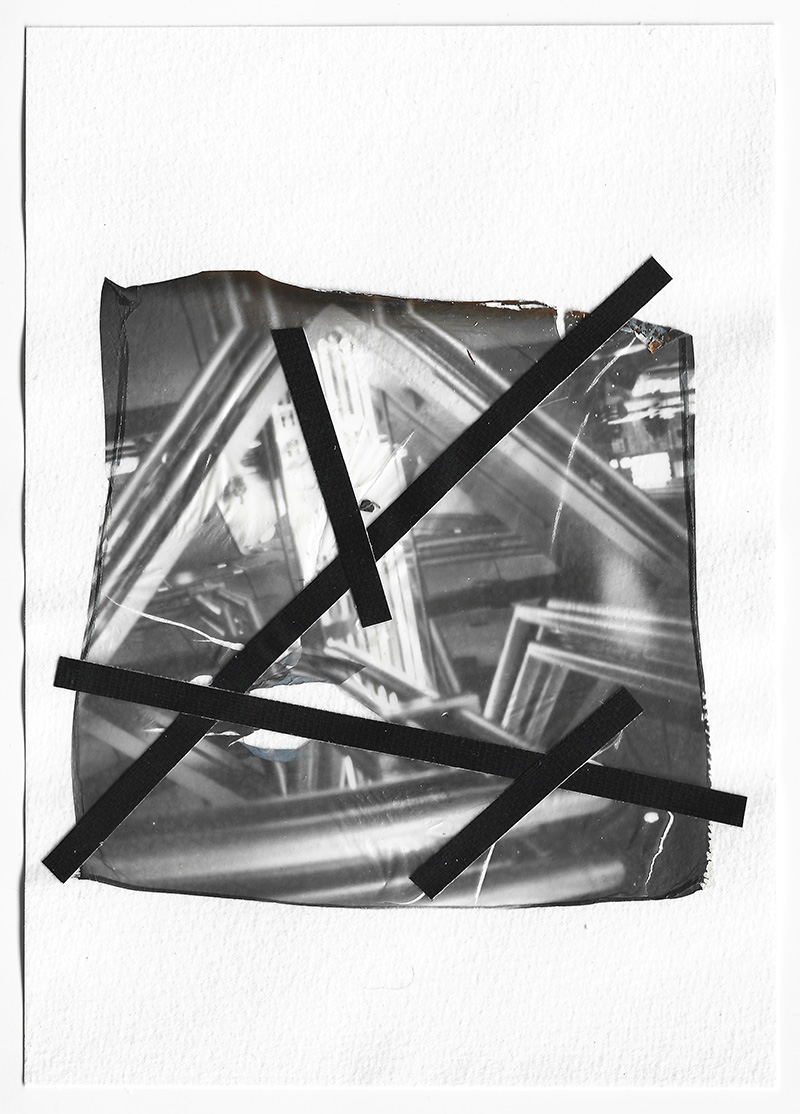

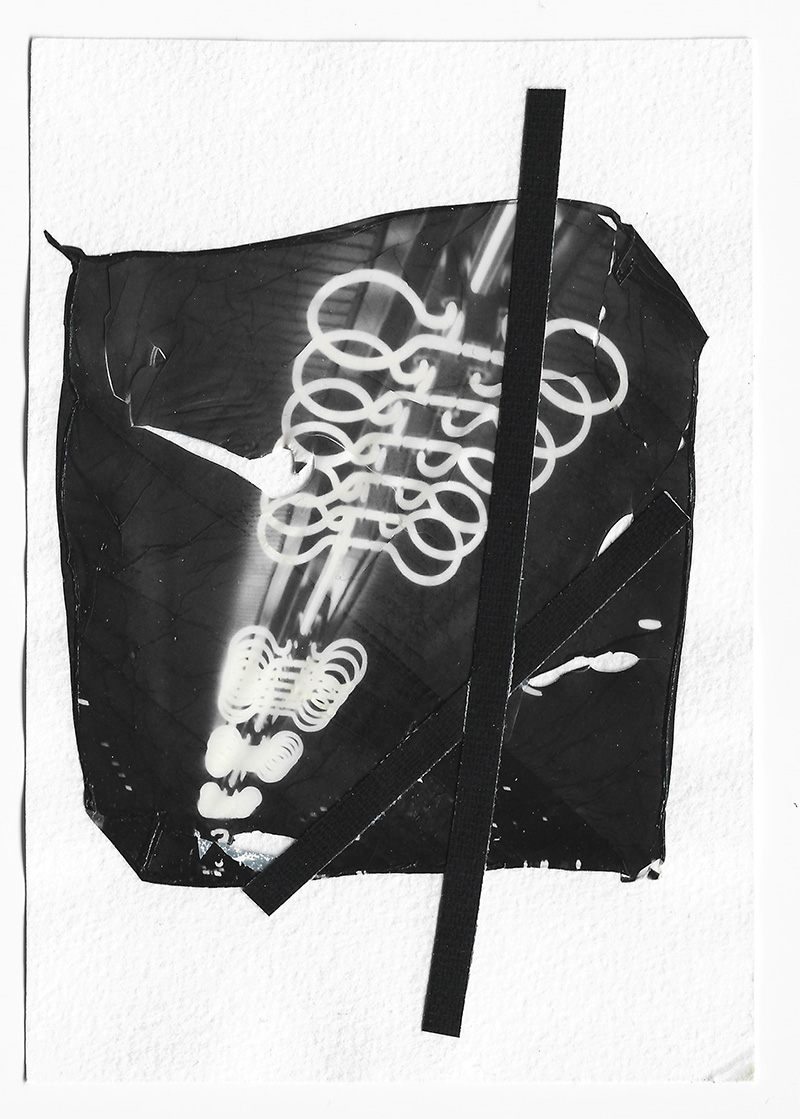

In addition to those lino prints I started a new series of collages using the older mistakes I made back in January. Instead of tossing those, I cut them and up and used parts for graphic elements. I exhibited a group of those collages in Phasenpunkte and got some really positive feedback about them. More of those will come soon.

Glide path

2025 will be my last year in Berlin. It was always the plan to return after a few years. My vision for being here is complete, but I have an opportunity to work on my art full-time in the first half of the year. I’ll stay in Berlin to take full advantage of that work window. After that, I think it’s time to go back to California.